Posts

1635Following

138Followers

881I'm currently working on my second novel which is complete, but is in the edit stage. I wrote my first novel over 20 years ago but then didn't write much till now.

I post about #Coding, #Flutter, #Writing, #Movies and #TV. I'll also talk about #Technology, #Gadgets, #MachineLearning, #DeepLearning and a few other things as the fancy strikes ...

Lived in: 🇱🇰🇸🇦🇺🇸🇳🇿🇸🇬🇲🇾🇦🇪🇫🇷🇪🇸🇵🇹🇶🇦🇨🇦

Fahim Farook

f

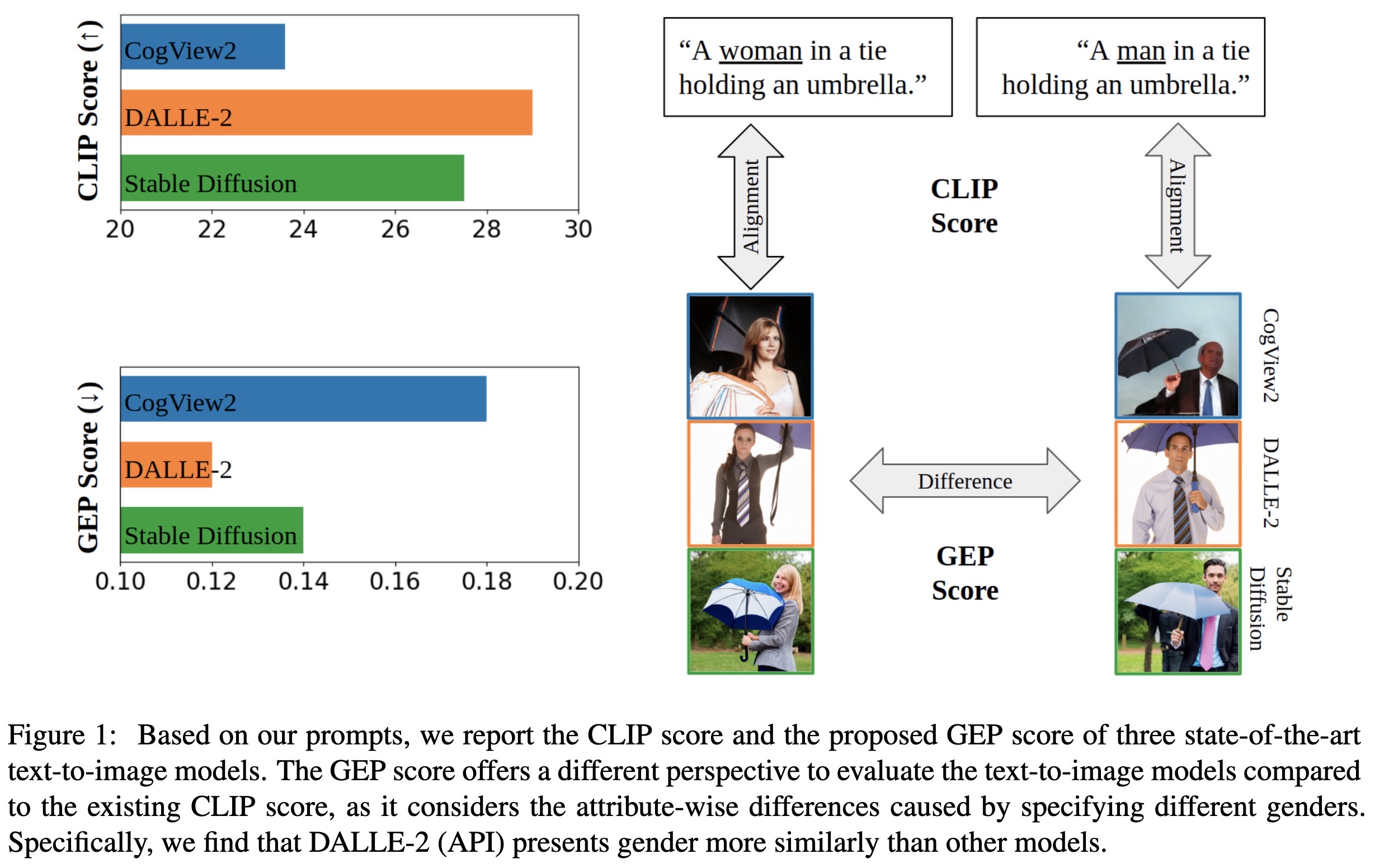

"Auditing Gender Presentation Differences in Text-to-Image Models. (arXiv:2302.03675v1 [cs.CV])" — A method using fine-grained self-presentation attributes to study how gender is presented differently in text-to-image models to quantify the frequency differences of presentation-centric attributes (e.g., "a shirt" and "a dress").

Paper: http://arxiv.org/abs/2302.03675

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Based on our prompts, we report…

Paper: http://arxiv.org/abs/2302.03675

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Based on our prompts, we report…

Fahim Farook

f

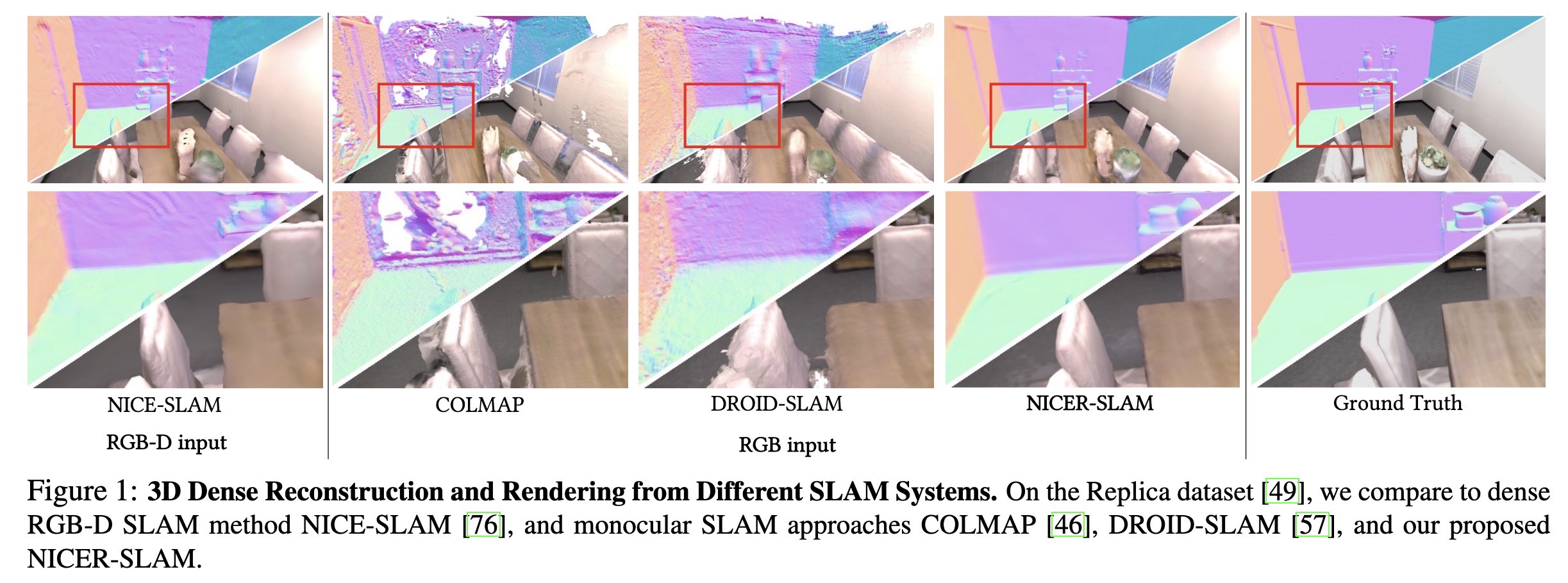

"NICER-SLAM: Neural Implicit Scene Encoding for RGB SLAM. (arXiv:2302.03594v1 [cs.CV])" — A dense RGB Simultaneous Localization And Mapping (SLAM) system that simultaneously optimizes for camera poses and a hierarchical neural implicit map representation, which also allows for high-quality novel view synthesis.

Paper: http://arxiv.org/abs/2302.03594

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

3D Dense Reconstruction and Ren…

Paper: http://arxiv.org/abs/2302.03594

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

3D Dense Reconstruction and Ren…

Fahim Farook

f

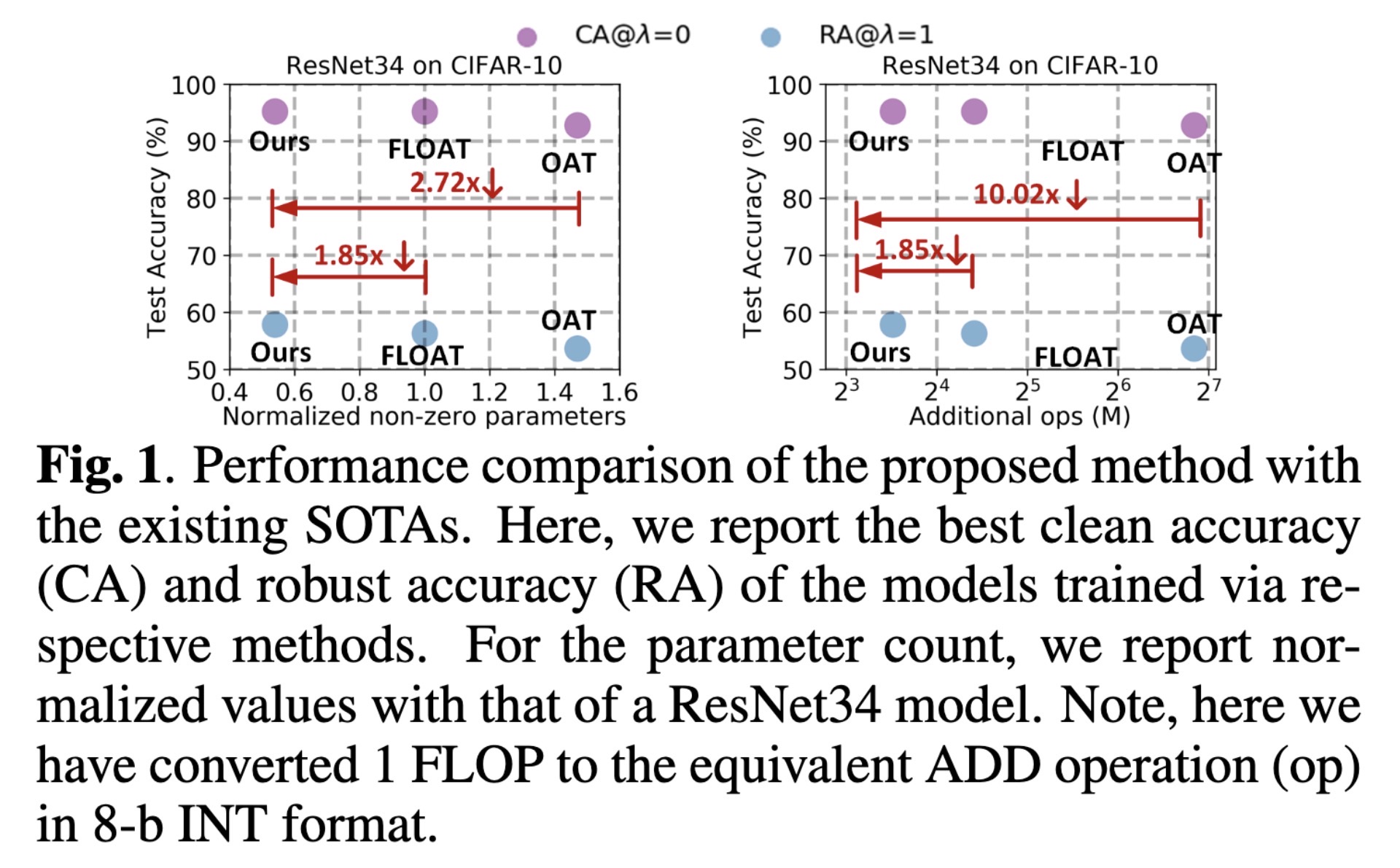

"Sparse Mixture Once-for-all Adversarial Training for Efficient In-Situ Trade-Off Between Accuracy and Robustness of DNNs. (arXiv:2302.03523v1 [cs.CV])" — A method that allows a Deep Neural Network (DNN) to train once and then in-situ trade-off between accuracy and robustness, that too at a reduced compute and parameter overhead.

Paper: http://arxiv.org/abs/2302.03523

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Performance comparison of the p…

Paper: http://arxiv.org/abs/2302.03523

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Performance comparison of the p…

Fahim Farook

f

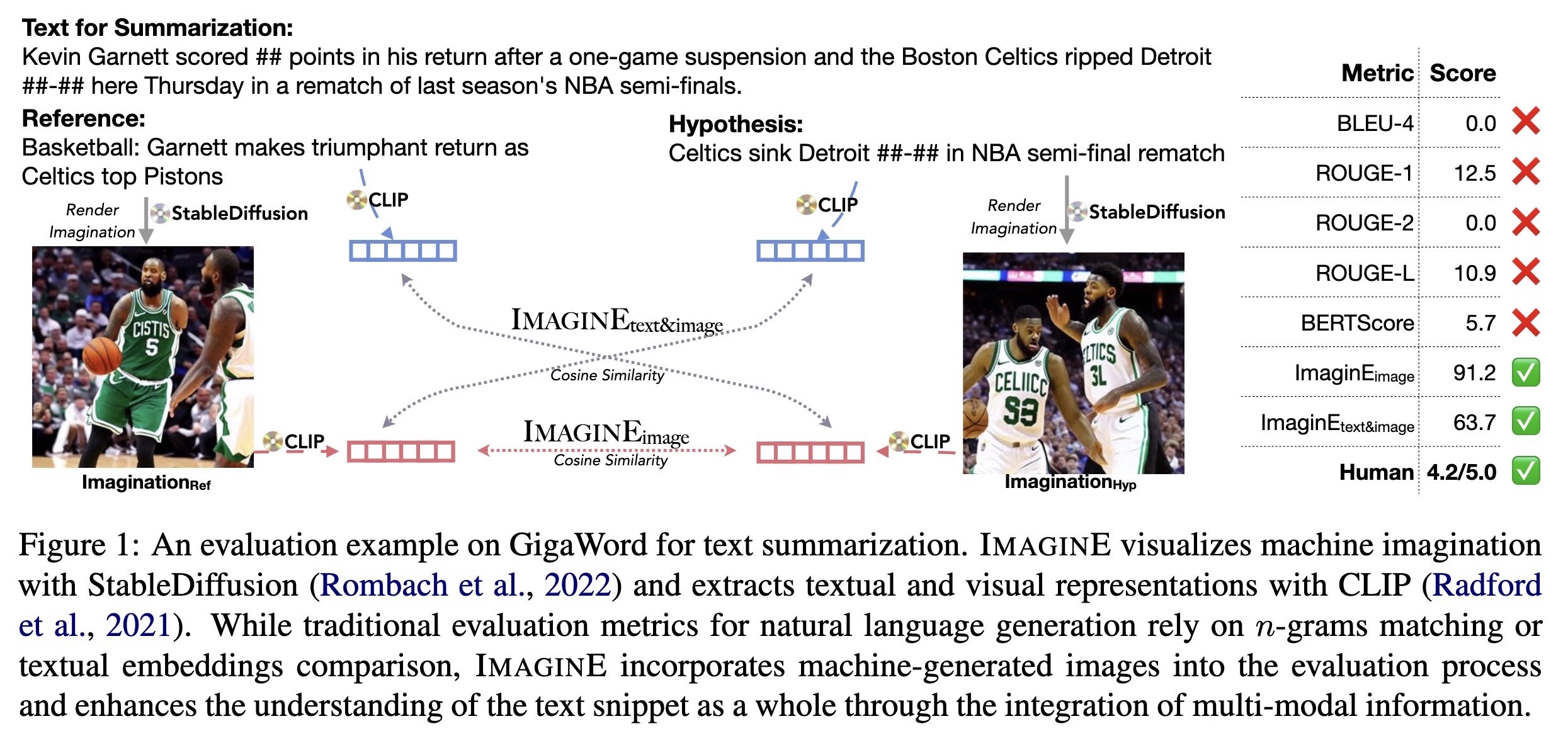

"ImaginE: An Imagination-Based Automatic Evaluation Metric for Natural Language Generation. (arXiv:2106.05970v2 [cs.CL] UPDATED)" — An imagination-based automatic evaluation metric for natural language generation, which with the help of StableDiffusion, automatically generates an image as the embodied imagination for a text snippet and computes the imagination similarity using contextual embeddings.

Paper: http://arxiv.org/abs/2106.05970

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

An evaluation example on GigaWo…

Paper: http://arxiv.org/abs/2106.05970

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

An evaluation example on GigaWo…

Fahim Farook

f

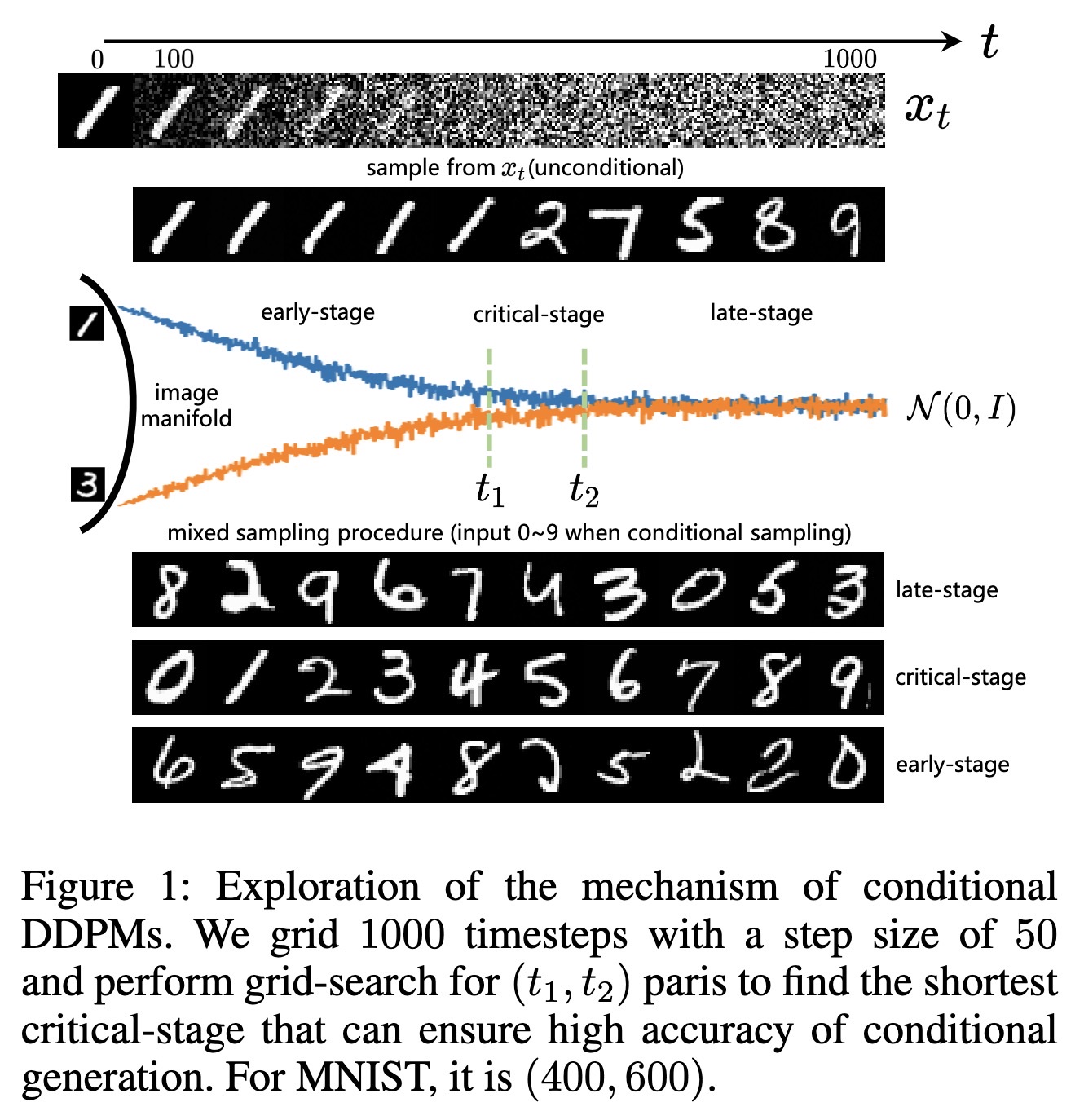

"ShiftDDPMs: Exploring Conditional Diffusion Models by Shifting Diffusion Trajectories. (arXiv:2302.02373v1 [cs.CV])" — A novel and flexible conditional diffusion model by introducing conditions into the forward process. The process utilizes extra latent space to allocate an exclusive diffusion trajectory for each condition based on some shifting rules, which will disperse condition modeling to all timesteps and improve the learning capacity of model.

Paper: http://arxiv.org/abs/2302.02373

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Exploration of the mechanism of…

Paper: http://arxiv.org/abs/2302.02373

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Exploration of the mechanism of…

Fahim Farook

f

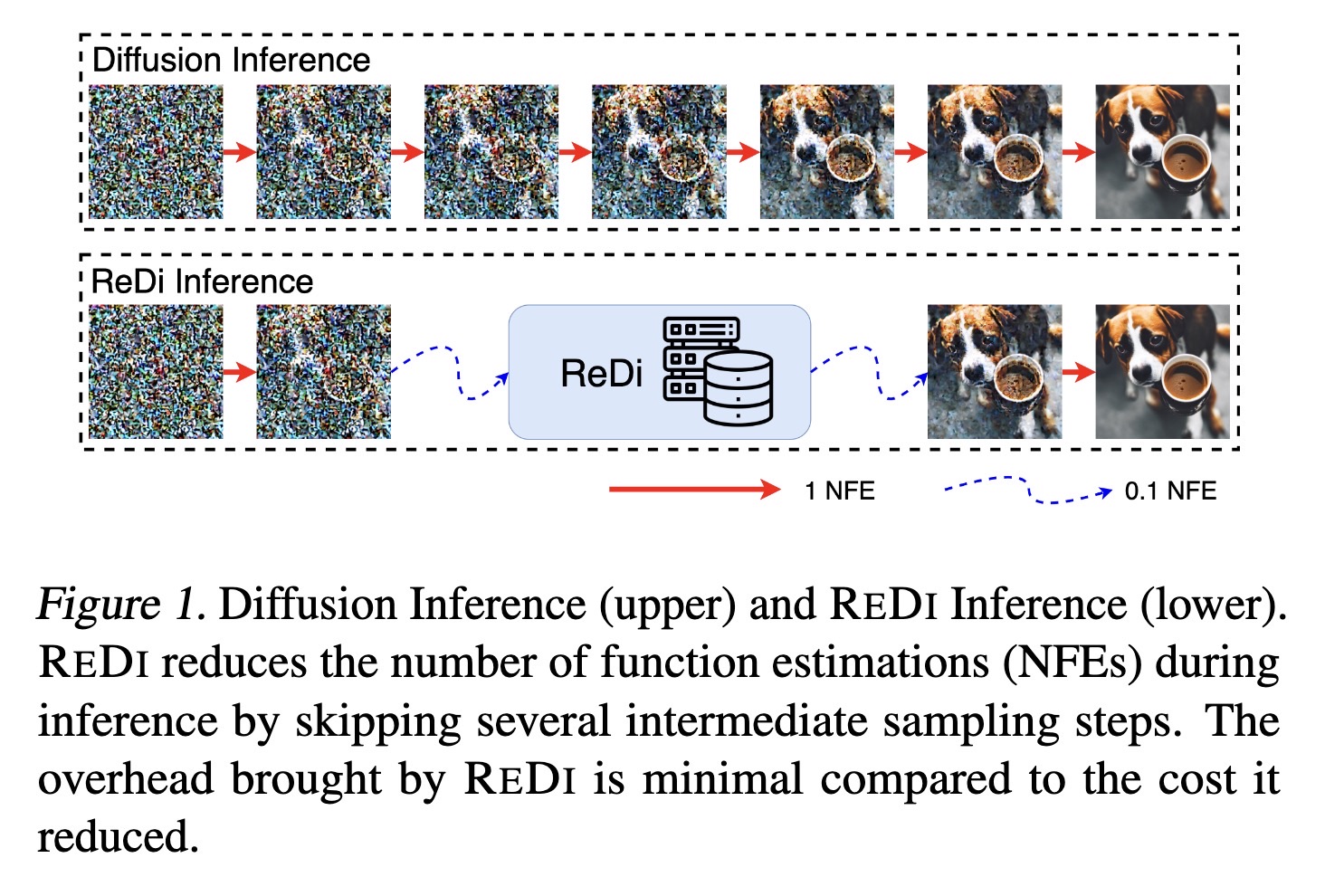

"ReDi: Efficient Learning-Free Diffusion Inference via Trajectory Retrieval. (arXiv:2302.02285v1 [cs.CV])" — A simple yet learning-free Retrieval-based Diffusion sampling framework capable of fast inference, which retrieves a trajectory similar to the partially generated trajectory from a precomputed knowledge base at an early stage of generation, skips a large portion of intermediate steps, and continues sampling from a later step in the retrieved trajectory.

Paper: http://arxiv.org/abs/2302.02285

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Diffusion Inference (upper) and…

Paper: http://arxiv.org/abs/2302.02285

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Diffusion Inference (upper) and…

Fahim Farook

f

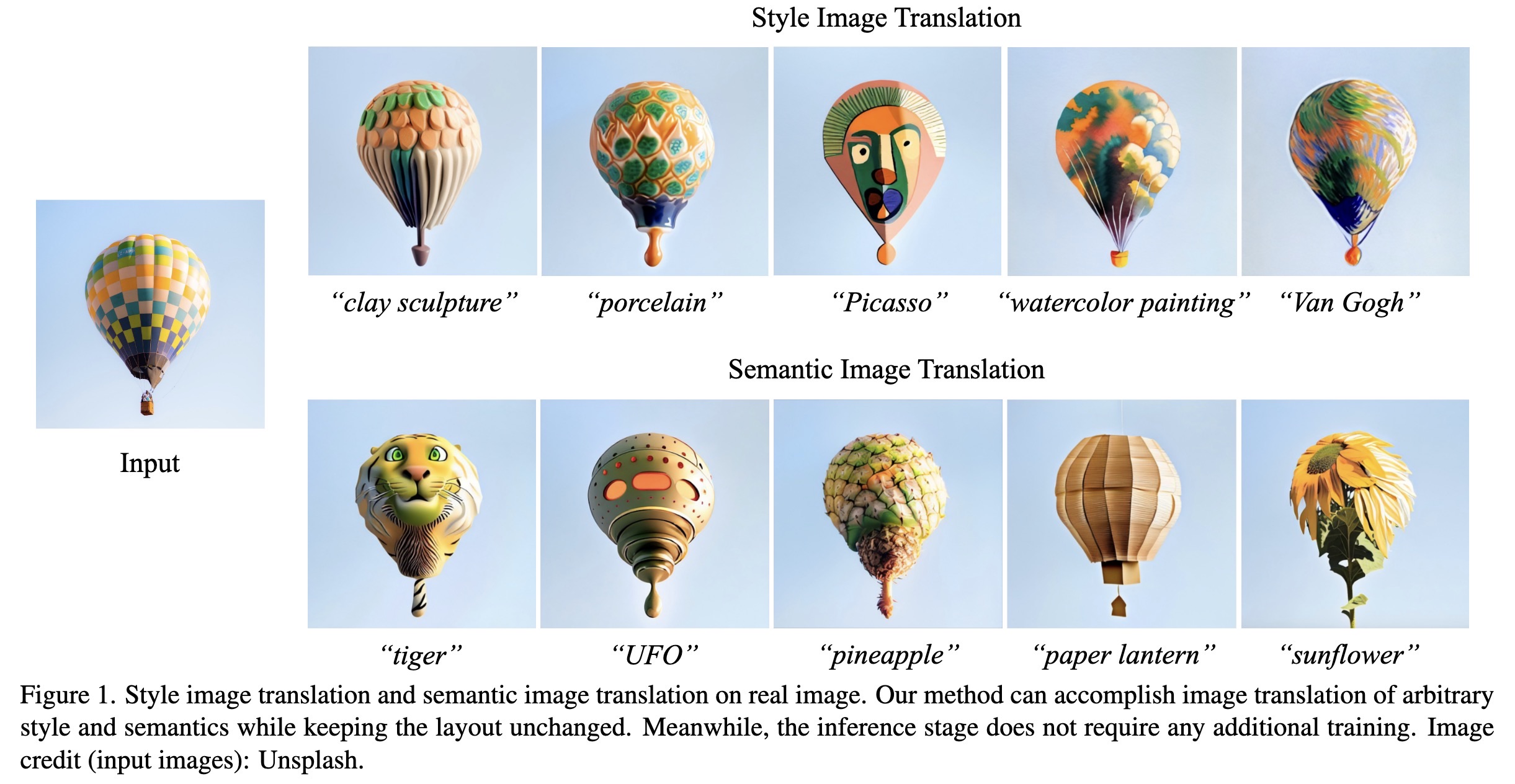

"Design Booster: A Text-Guided Diffusion Model for Image Translation with Spatial Layout Preservation. (arXiv:2302.02284v1 [cs.CV])" — A new approach for flexible image translation by learning a layout-aware image condition together with a text condition, which co-encodes images and text into a new domain during the training phase.

Paper: http://arxiv.org/abs/2302.02284

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Style image translation and sem…

Paper: http://arxiv.org/abs/2302.02284

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Style image translation and sem…

Fahim Farook

f

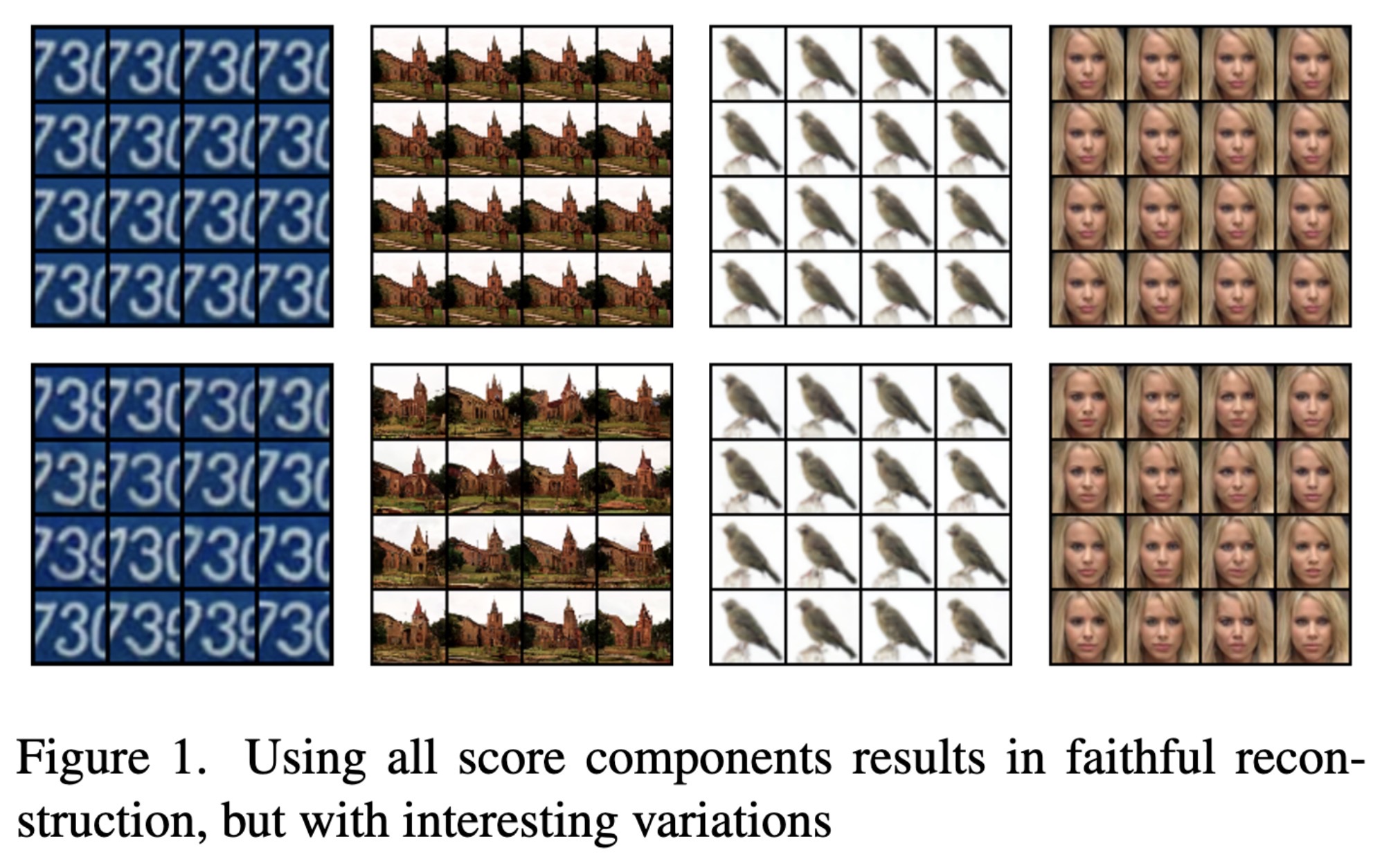

"Divide and Compose with Score Based Generative Models. (arXiv:2302.02272v1 [cs.CV])" — Learning image components in an unsupervised manner in order to compose those components to generate and manipulate images in an informed manner.

Paper: http://arxiv.org/abs/2302.02272

Code: https://github.com/sandeshgh/Score-based-disentanglement

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Using all score components resu…

Paper: http://arxiv.org/abs/2302.02272

Code: https://github.com/sandeshgh/Score-based-disentanglement

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Using all score components resu…

Fahim Farook

f

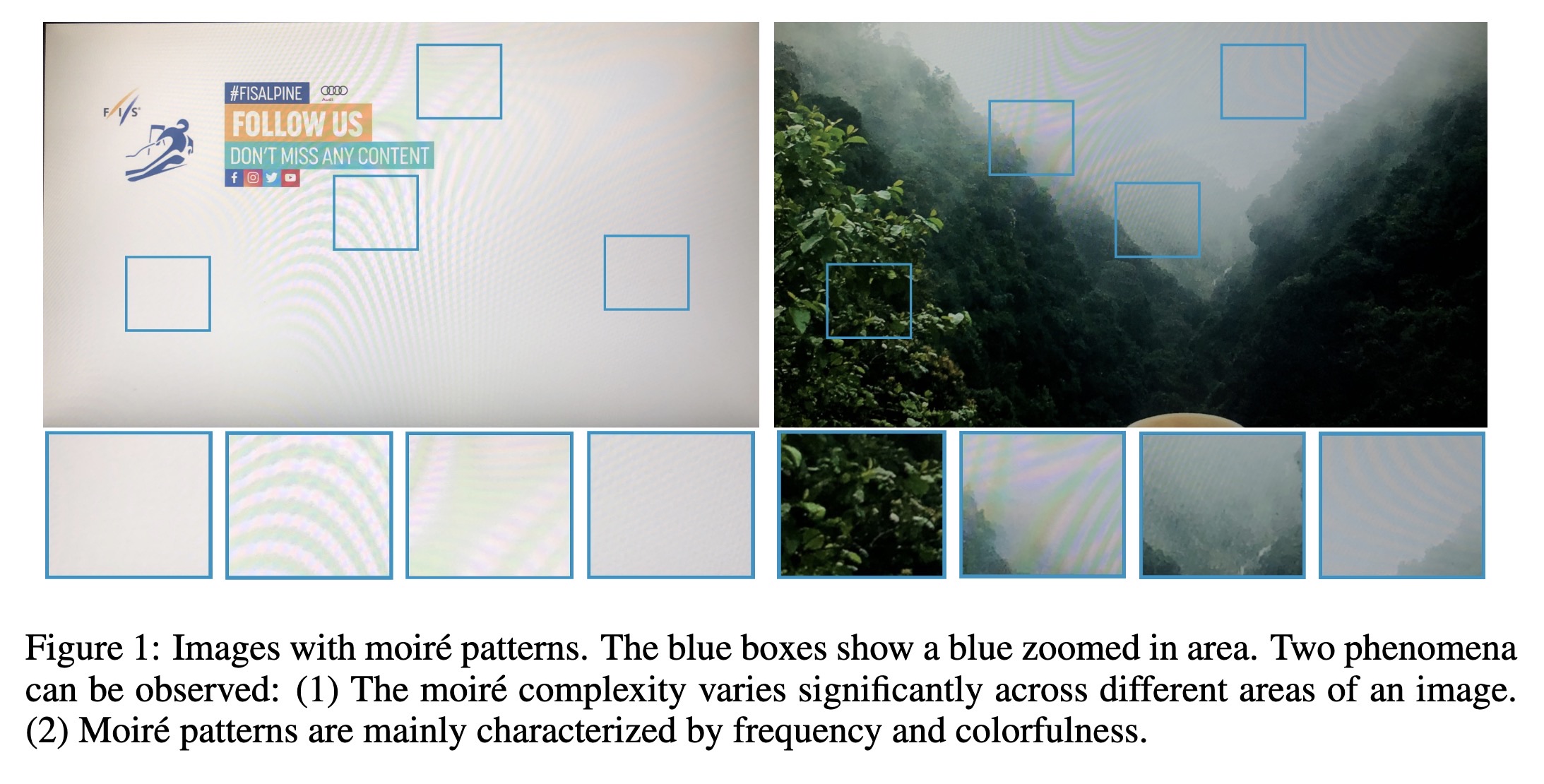

"Real-Time Image Demoireing on Mobile Devices. (arXiv:2302.02184v1 [cs.CV])" — A study on accelerating demoireing networks and a dynamic demoireing acceleration method (DDA) capable of real-time deployment on mobile devices.

Paper: http://arxiv.org/abs/2302.02184

Code: https://github.com/zyxxmu/DDA

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Images with moir ́e patterns. Th…

Paper: http://arxiv.org/abs/2302.02184

Code: https://github.com/zyxxmu/DDA

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Images with moir ́e patterns. Th…

Fahim Farook

f

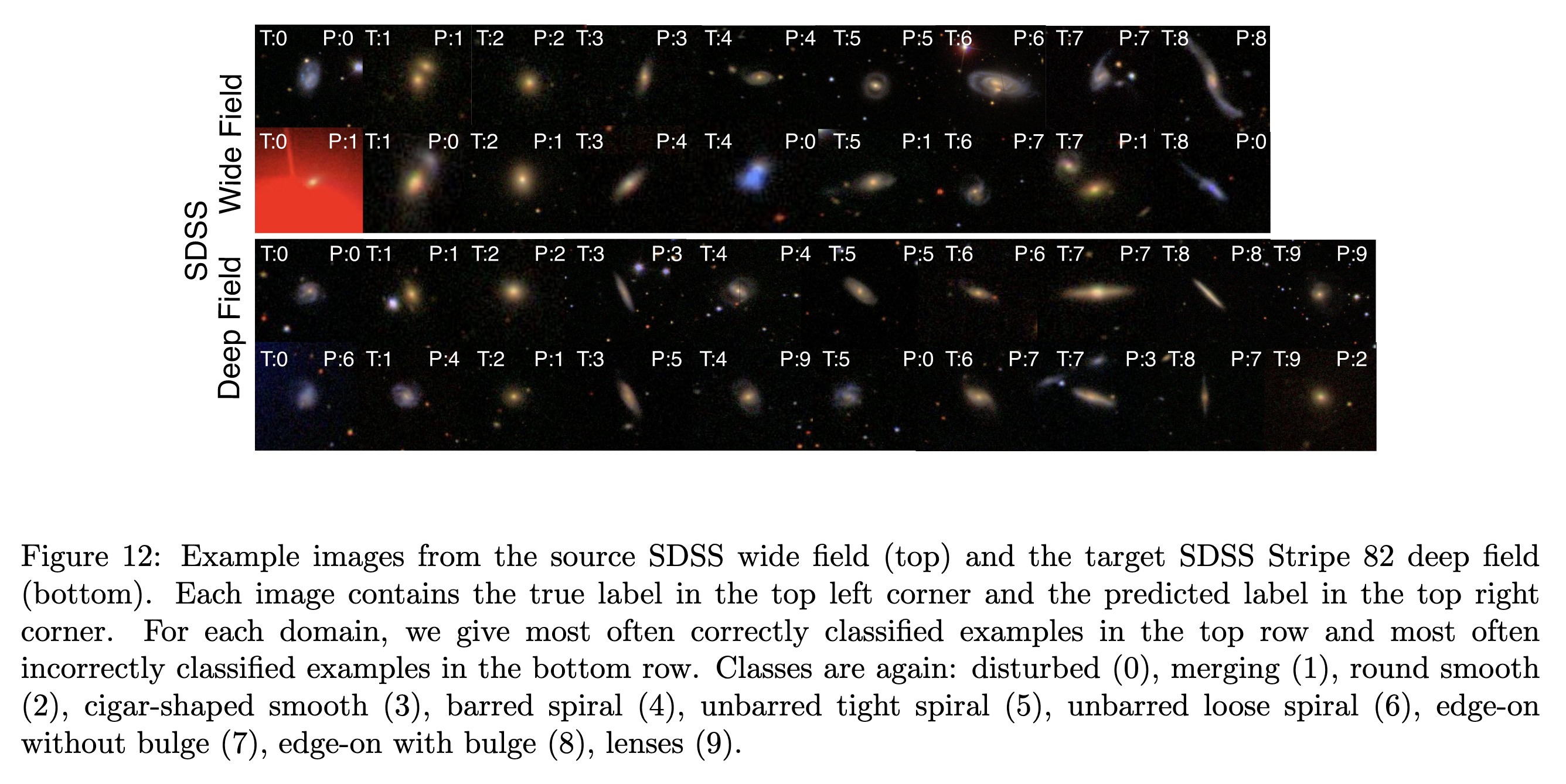

"DeepAstroUDA: Semi-Supervised Universal Domain Adaptation for Cross-Survey Galaxy Morphology Classification and Anomaly Detection. (arXiv:2302.02005v1 [astro-ph.GA])" — A universal domain adaptation method to overcome the challenge of artificial intelligence methods extracting dataset-specific, non-robust features.

Paper: http://arxiv.org/abs/2302.02005

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Example images from the source …

Paper: http://arxiv.org/abs/2302.02005

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Example images from the source …

Fahim Farook

f

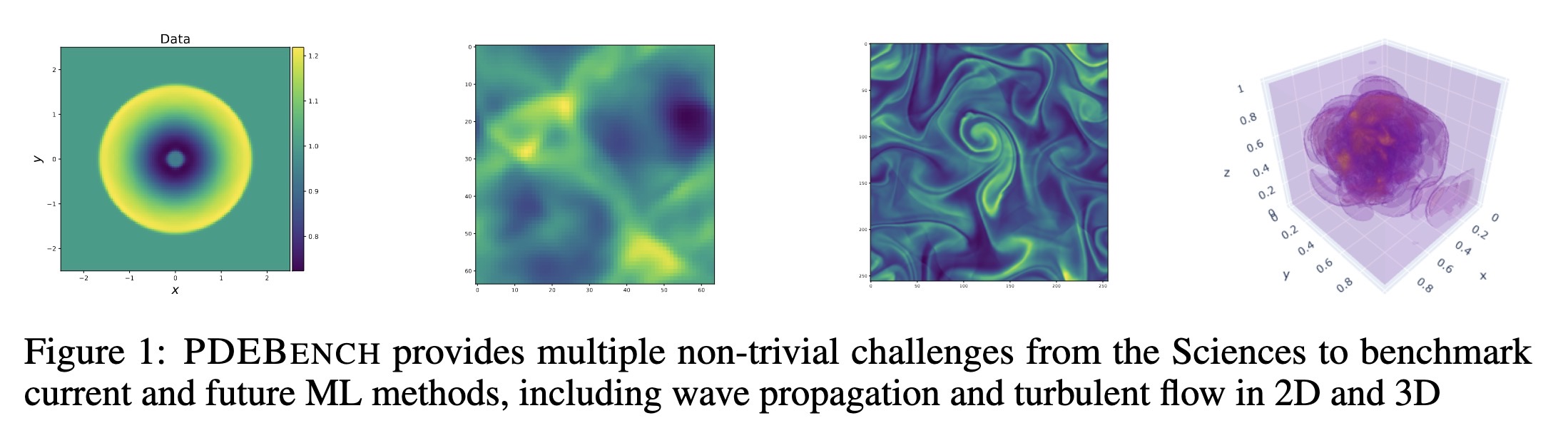

"PDEBENCH: An Extensive Benchmark for Scientific Machine Learning. (arXiv:2210.07182v4 [cs.LG] UPDATED)" — A benchmark suite of time-dependent simulation tasks based on Partial Differential Equations (PDEs), which comprises both code and data to benchmark the performance of novel machine learning models against both classical numerical simulations and machine learning baselines.

Paper: http://arxiv.org/abs/2210.07182

Code: https://github.com/pdebench/PDEBench

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

PDEBENCH provides multiple non-…

Paper: http://arxiv.org/abs/2210.07182

Code: https://github.com/pdebench/PDEBench

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

PDEBENCH provides multiple non-…

Fahim Farook

f

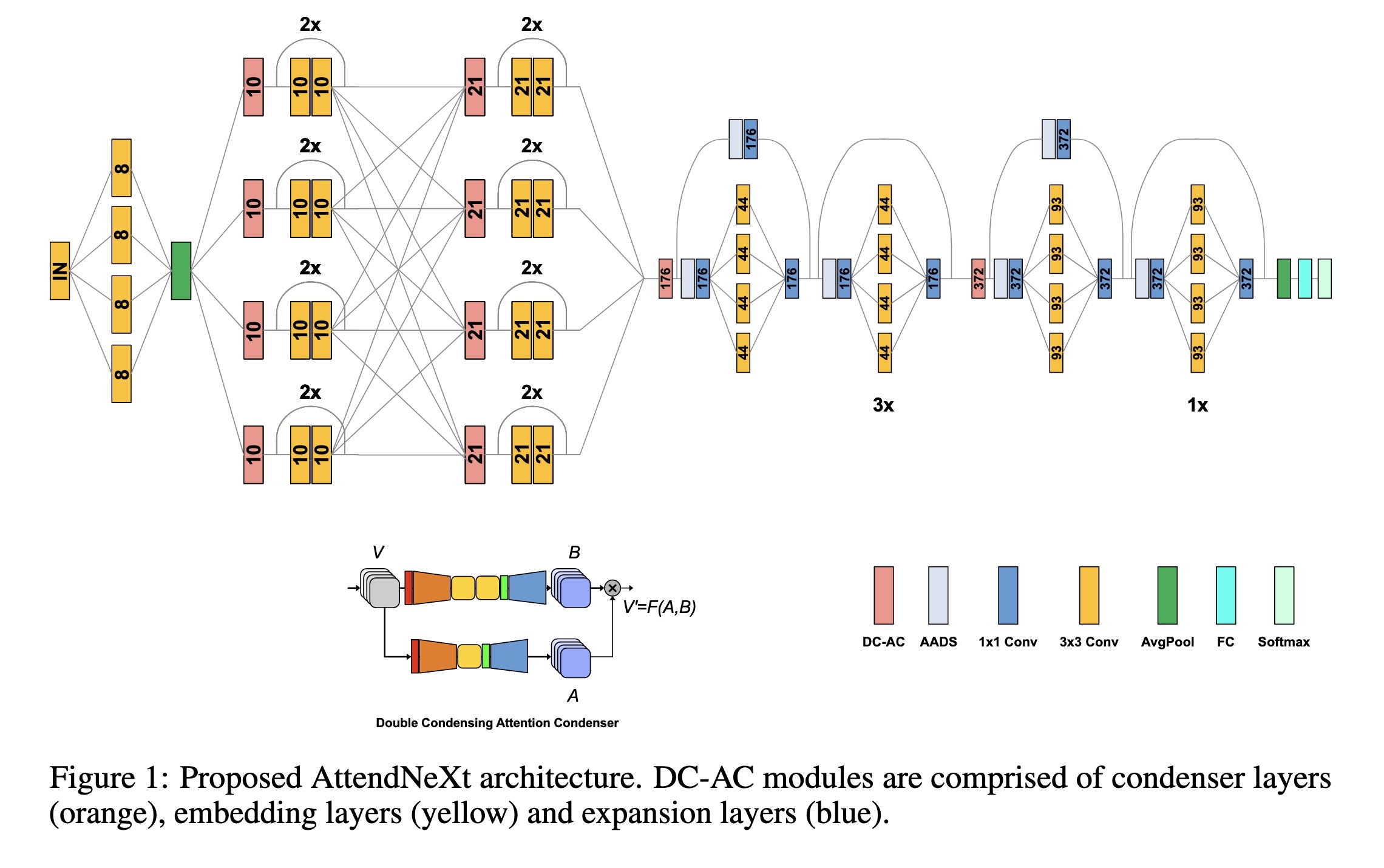

"Faster Attention Is What You Need: A Fast Self-Attention Neural Network Backbone Architecture for the Edge via Double-Condensing Attention Condensers. (arXiv:2208.06980v3 [cs.CV] UPDATED)" — A faster attention condenser design called double-condensing attention condensers that allow for highly condensed feature embeddings.

Paper: http://arxiv.org/abs/2208.06980

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Proposed AttendNeXt architectur…

Paper: http://arxiv.org/abs/2208.06980

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Proposed AttendNeXt architectur…

Fahim Farook

f

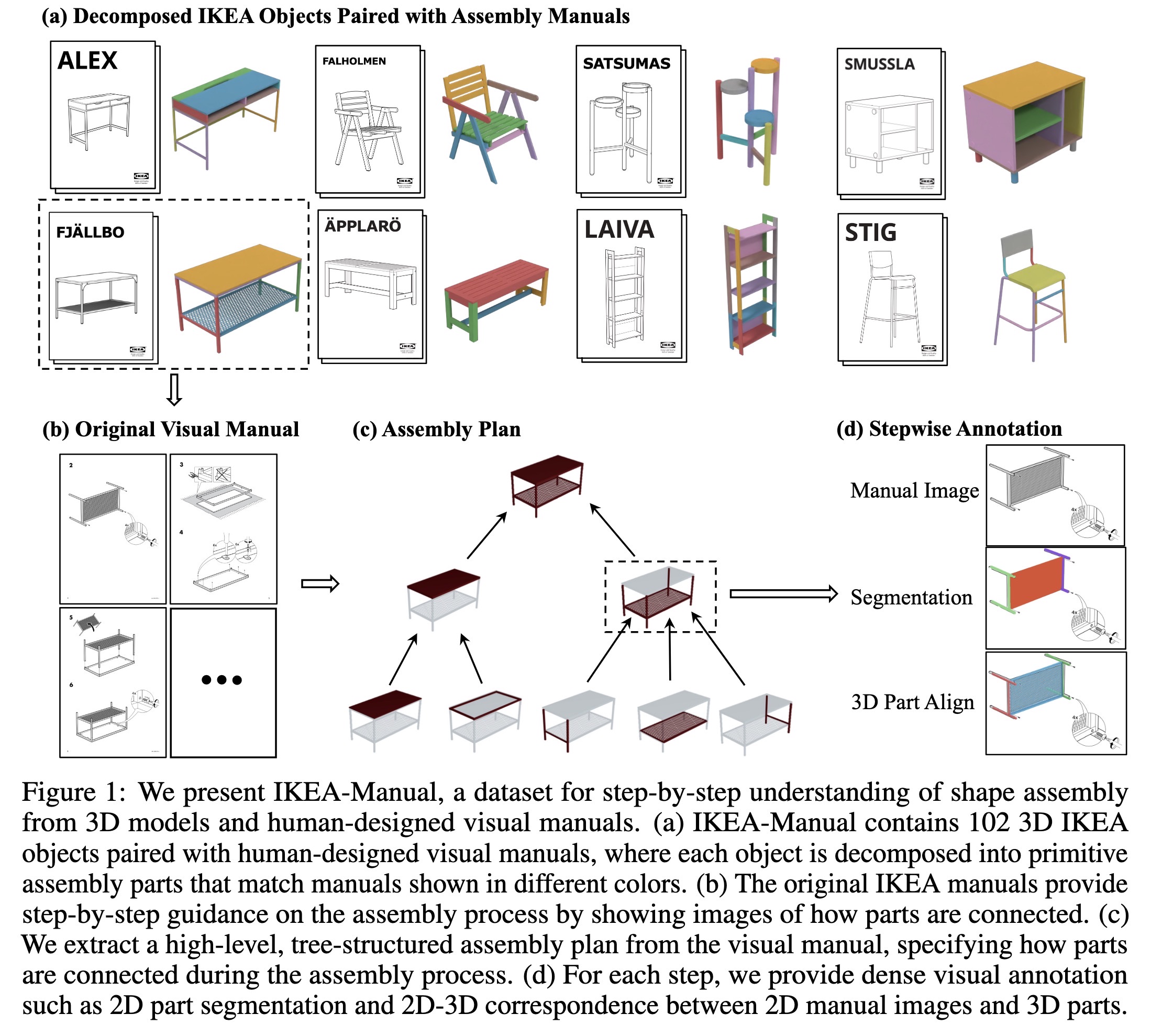

"IKEA-Manual: Seeing Shape Assembly Step by Step. (arXiv:2302.01881v1 [cs.CV])" — A dataset consisting of 102 IKEA objects paired with assembly manuals to help improve/test shape assembly activities since the manuals provide step-by-step guidance on how we should move and connect different parts in a convenient and physically-realizable way.

Paper: http://arxiv.org/abs/2302.01881

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

IKEA-Manual, a dataset for step…

Paper: http://arxiv.org/abs/2302.01881

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

IKEA-Manual, a dataset for step…

Fahim Farook

f

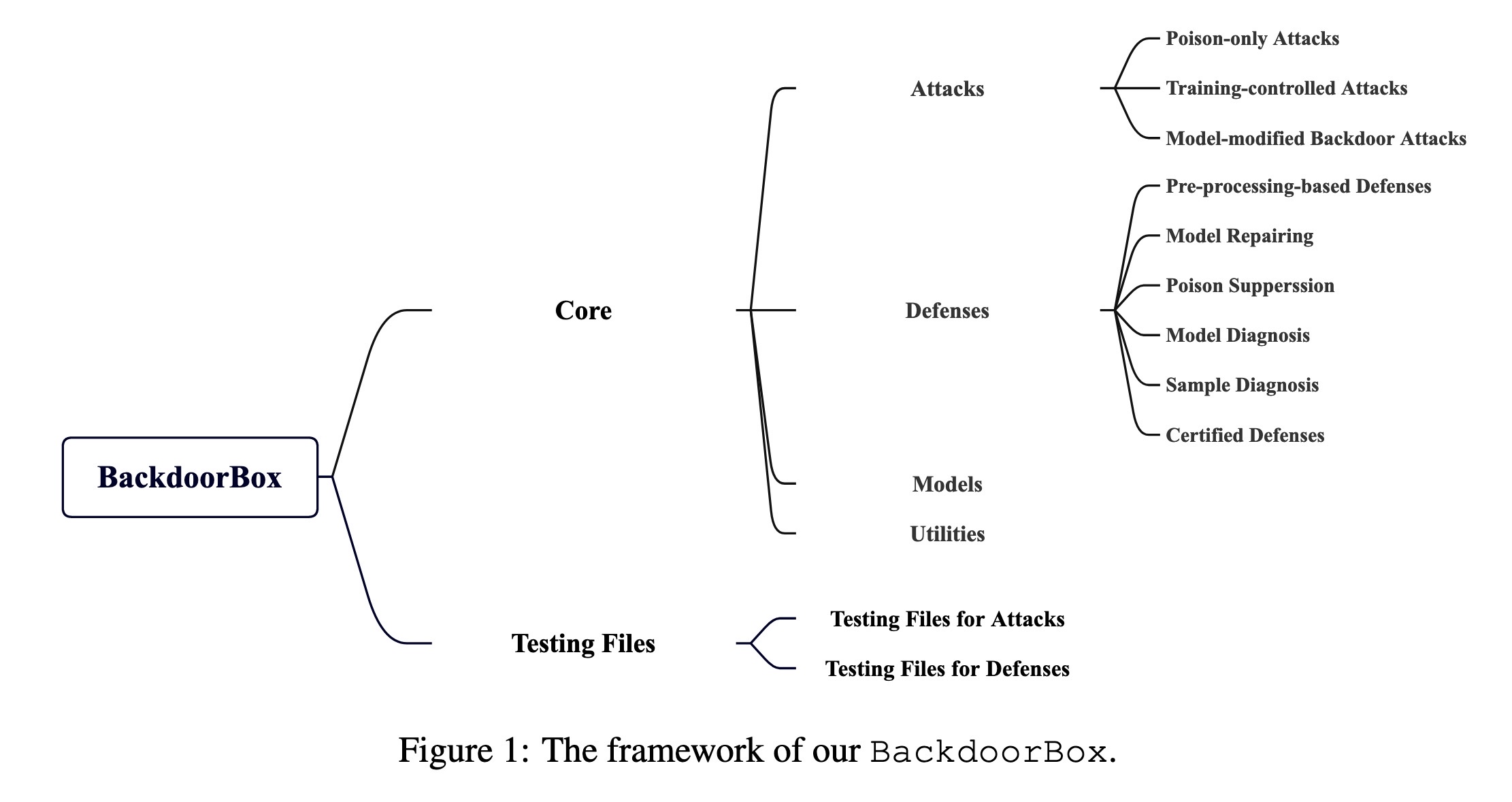

"BackdoorBox: A Python Toolbox for Backdoor Learning. (arXiv:2302.01762v1 [cs.CR])" — An open-sourced Python toolbox that implements representative and advanced backdoor attacks and defenses under a unified and flexible framework to help detect and possibly defend against backdoor attacks against deep neural networks (DNNs).

Paper: http://arxiv.org/abs/2302.01762

Code: https://github.com/THUYimingLi/BackdoorBox

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

The framework of BackdoorBox

Paper: http://arxiv.org/abs/2302.01762

Code: https://github.com/THUYimingLi/BackdoorBox

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

The framework of BackdoorBox

Fahim Farook

f

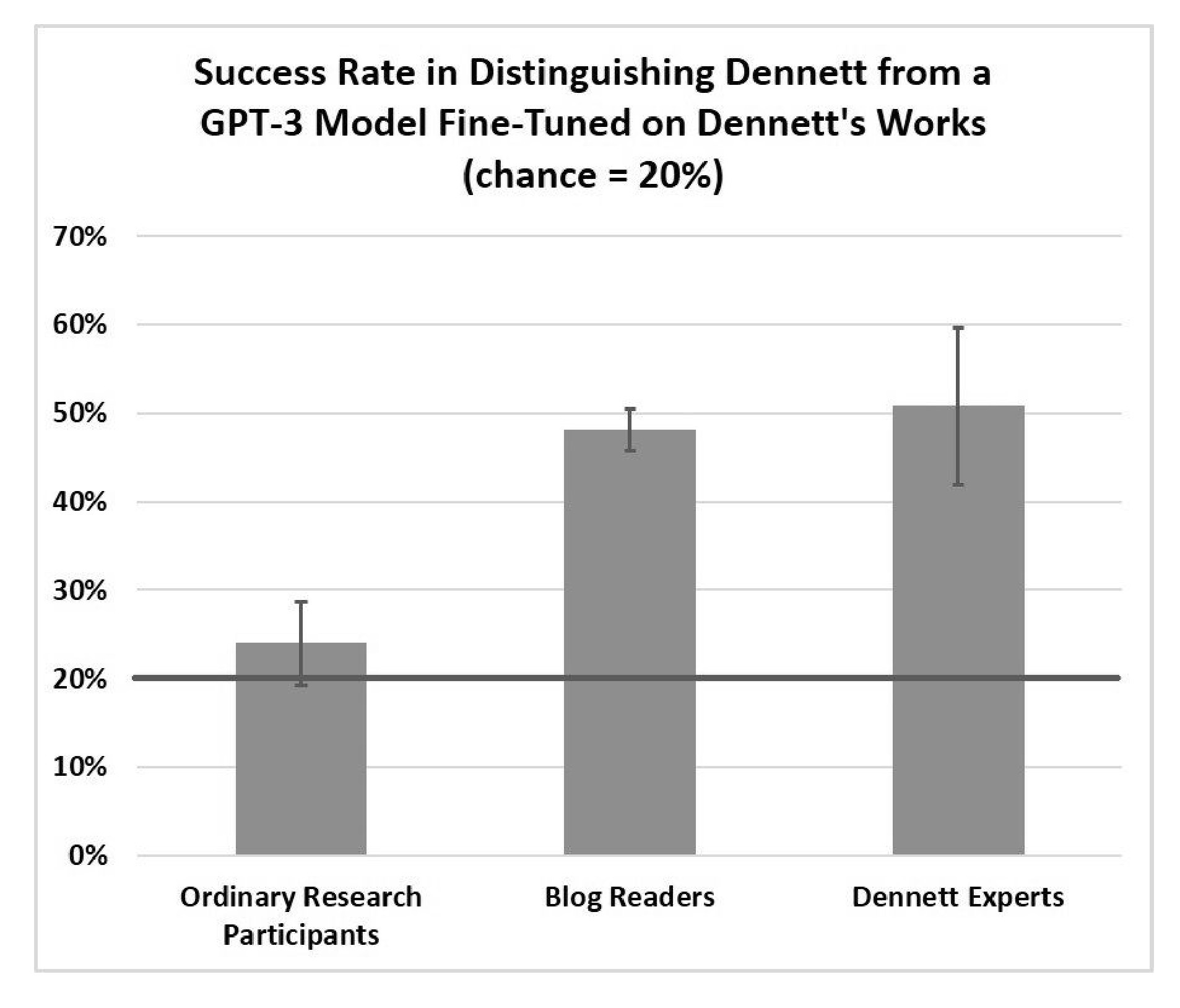

“Creating a Large Language Model of a Philosopher” — Tries to answer the question: “Can large language models be trained to produce philosophical texts that are difficult to distinguish from texts produced by human philosophers?” by fine-tuning OpenAI's GPT-3 with the works of philosopher Daniel C. Dennett.

Paper: https://arxiv.org/abs/2302.01339

#AI #CL #NewPaper #DeepLearning #MachineLearning #Language

<<Find this useful? Please boost so that others can benefit too 🙂>>”

A graph showing the success rat…

Paper: https://arxiv.org/abs/2302.01339

#AI #CL #NewPaper #DeepLearning #MachineLearning #Language

<<Find this useful? Please boost so that others can benefit too 🙂>>”

A graph showing the success rat…

Fahim Farook

f

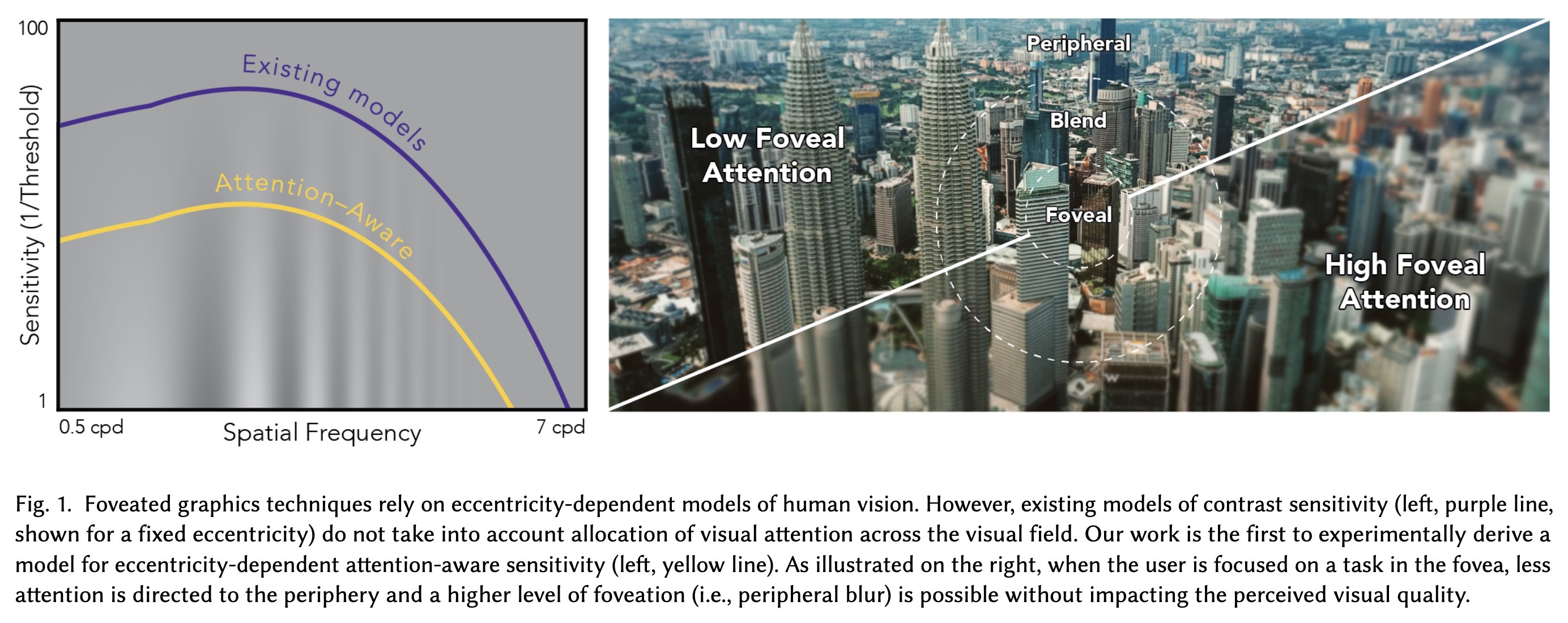

“Towards Attention-aware Rendering for Virtual and Augmented Reality” — An attention-aware model of contrast sensitivity based on measuring contrast sensitivity under different attention distributions and discovering that sensitivity in the periphery drops significantly when the user is required to allocate attention to the fovea.

Paper: https://arxiv.org/abs/2302.01368

#NewPaper #HumanComputerInteraction #Graphics #VR #AR #ImageProcessing #VideoProcessing

<<Find this useful? Please boost so that others can benefit too 🙂>>

Foveated graphics techniques re…

Paper: https://arxiv.org/abs/2302.01368

#NewPaper #HumanComputerInteraction #Graphics #VR #AR #ImageProcessing #VideoProcessing

<<Find this useful? Please boost so that others can benefit too 🙂>>

Foveated graphics techniques re…

Fahim Farook

f

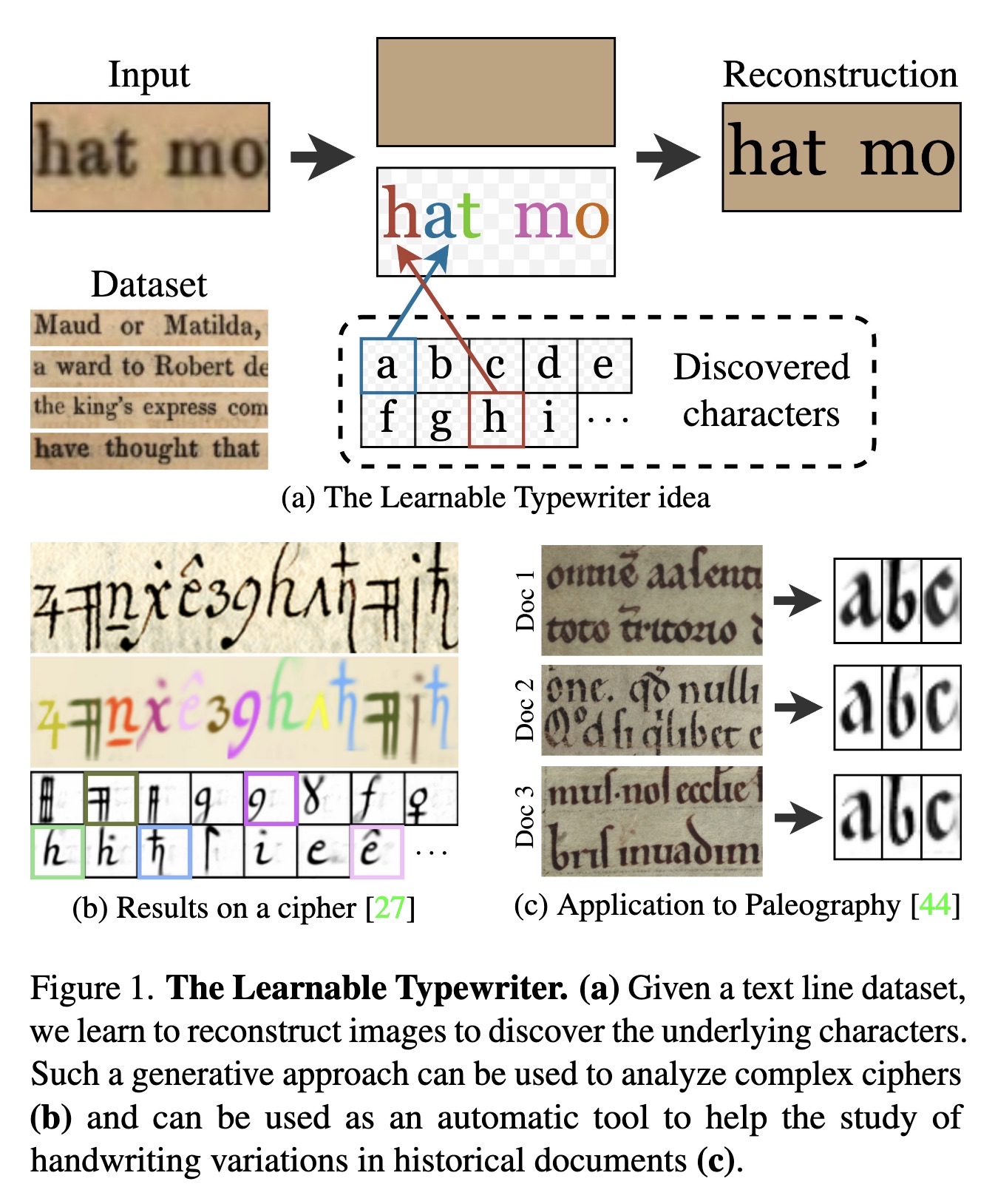

"The Learnable Typewriter: A Generative Approach to Text Line Analysis. (arXiv:2302.01660v1 [cs.CV])" — A generative document-specific approach to character analysis and recognition in text lines which builds on unsupervised multi-object segmentation methods, and in particular, those that reconstruct images based on a limited amount of visual elements, called sprites. This approach can learn a large number of different characters and leverage line-level annotations when available.

Paper: http://arxiv.org/abs/2302.01660

Code: https://github.com/ysig/learnable-typewriter

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

The Learnable Typewriter. (a) G…

Paper: http://arxiv.org/abs/2302.01660

Code: https://github.com/ysig/learnable-typewriter

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

The Learnable Typewriter. (a) G…

Fahim Farook

f

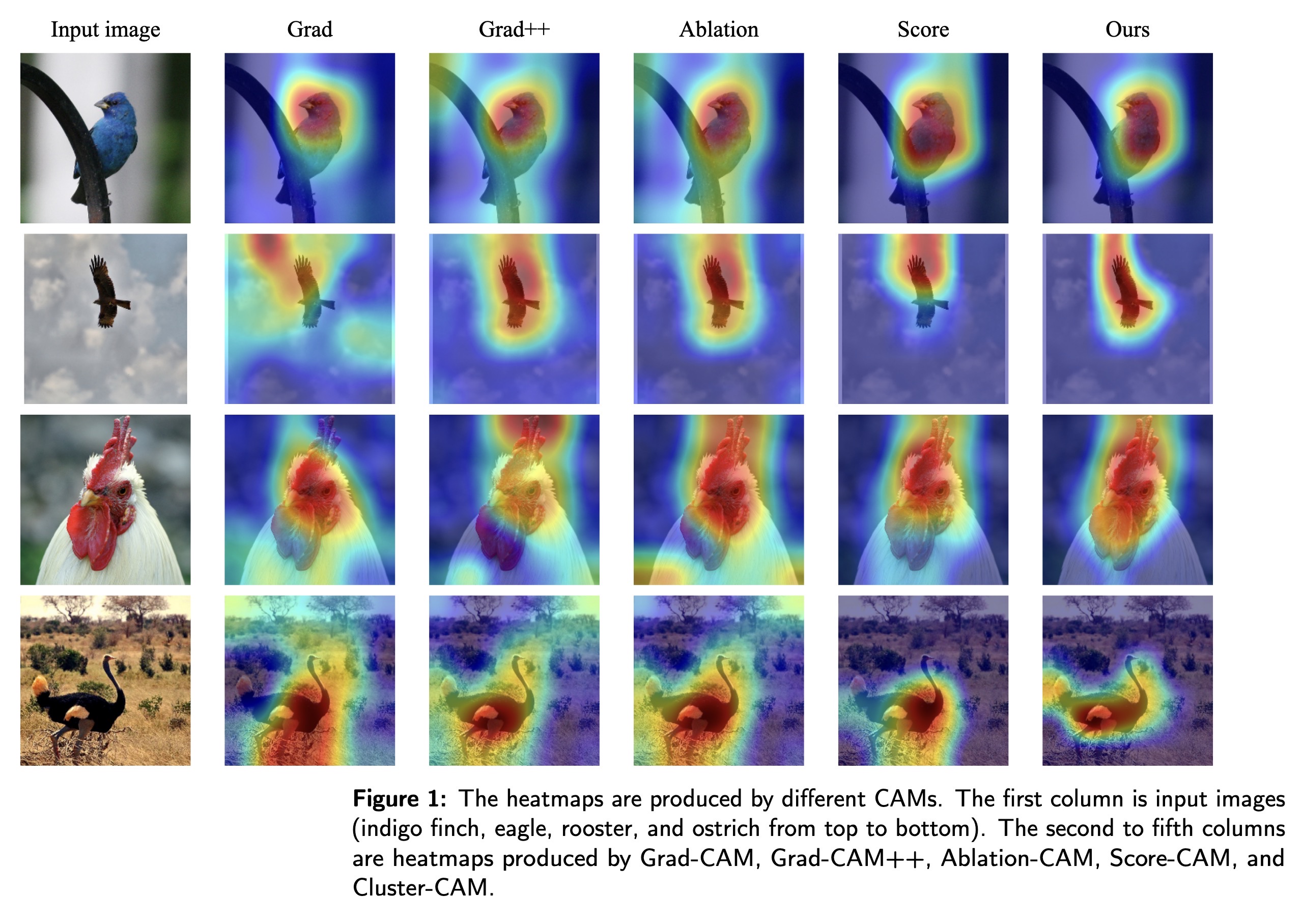

"Cluster-CAM: Cluster-Weighted Visual Interpretation of CNNs' Decision in Image Classification. (arXiv:2302.01642v1 [cs.CV])" — An effective and efficient gradient-free Convolutional Neural Network (CNN) interpretation algorithm which can significantly reduce the times of forward propagation by splitting the feature maps into clusters in an unsupervised manner.

Paper: http://arxiv.org/abs/2302.01642

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

The heatmaps are produced by di…

Paper: http://arxiv.org/abs/2302.01642

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

The heatmaps are produced by di…

Fahim Farook

f

"CTE: A Dataset for Contextualized Table Extraction. (arXiv:2302.01451v1 [cs.CL])" — A task which aims to extract and define the structure of tables considering the textual context of the document providing data that helps you with document layout analysis and table understanding using a dataset which comprises 75k fully annotated pages of scientific papers, including more than 35k tables.

Paper: http://arxiv.org/abs/2302.01451

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Example page (content is intent…

Paper: http://arxiv.org/abs/2302.01451

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Example page (content is intent…

Fahim Farook

f

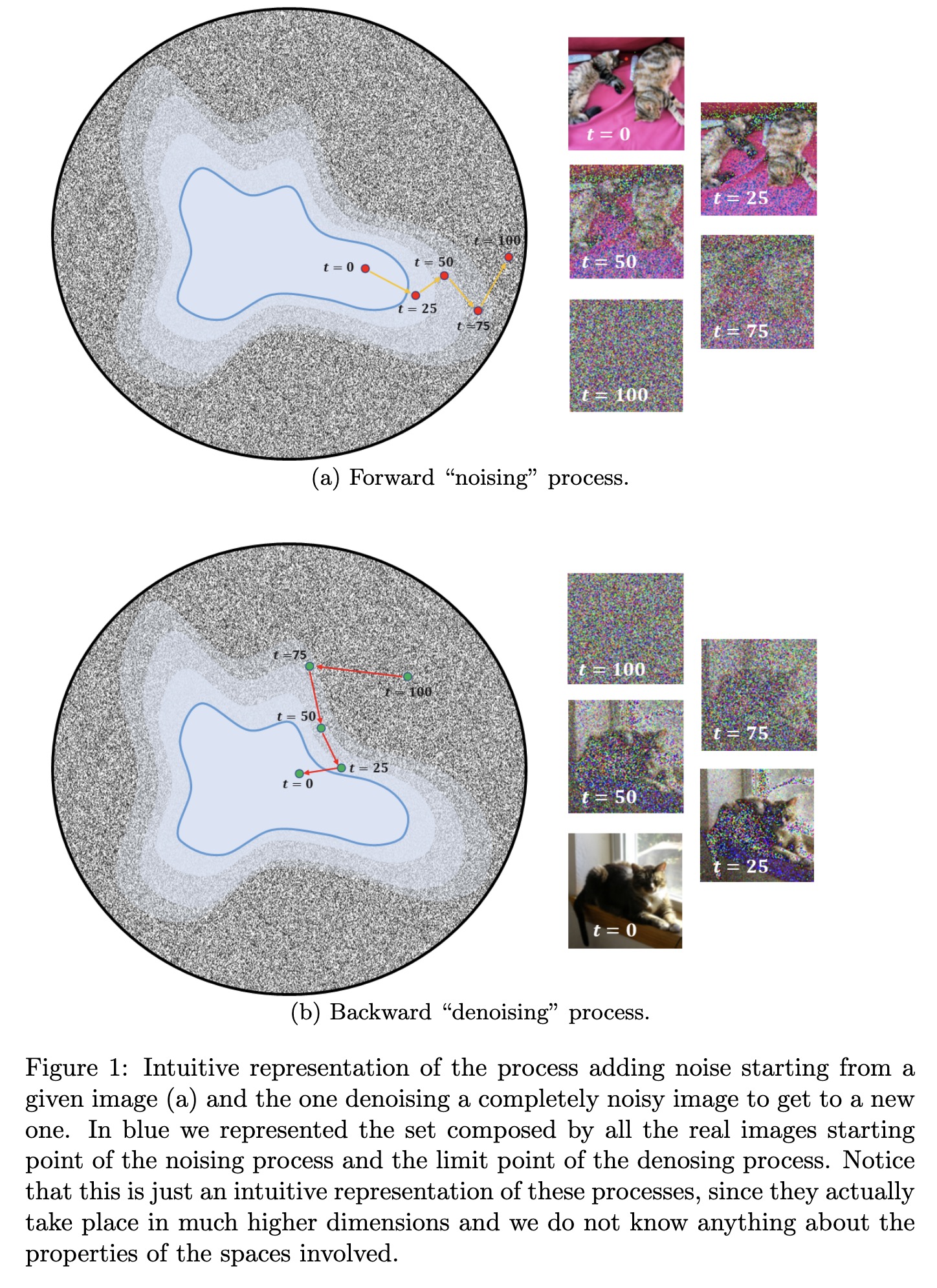

"Understanding and contextualising diffusion models. (arXiv:2302.01394v1 [cs.CV])" — An explanation about how common diffusion models work by focusing on the mathematical theory behind them, i.e. without analyzing in detail the specific implementations and related methods.

Paper: http://arxiv.org/abs/2302.01394

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Intuitive representation of the…

Paper: http://arxiv.org/abs/2302.01394

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Intuitive representation of the…