Posts

1621Following

138Followers

881I'm currently working on my second novel which is complete, but is in the edit stage. I wrote my first novel over 20 years ago but then didn't write much till now.

I post about #Coding, #Flutter, #Writing, #Movies and #TV. I'll also talk about #Technology, #Gadgets, #MachineLearning, #DeepLearning and a few other things as the fancy strikes ...

Lived in: 🇱🇰🇸🇦🇺🇸🇳🇿🇸🇬🇲🇾🇦🇪🇫🇷🇪🇸🇵🇹🇶🇦🇨🇦

Fahim Farook

f

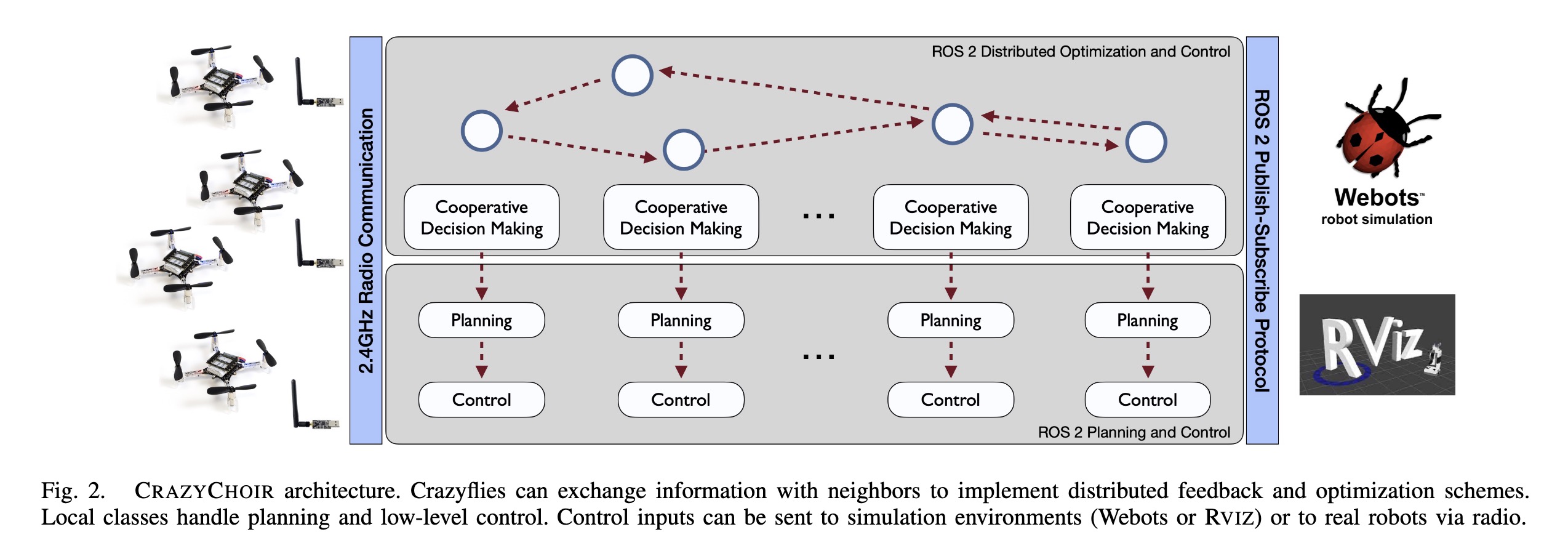

"CrazyChoir: Flying Swarms of Crazyflie Quadrotors in ROS 2" — A modular Python framework based on the Robot Operating System (ROS) 2 which provides a comprehensive set of functionalities to simulate and run experiments on teams of cooperating Crazyflie nano-quadrotors.

Paper: https://arxiv.org/abs/2302.00716

#NewPaper #Robotics

<<Find this useful? Please boost so that others can benefit too 🙂>>

CRAZYCHOIR architecture. Crazyf…

Paper: https://arxiv.org/abs/2302.00716

#NewPaper #Robotics

<<Find this useful? Please boost so that others can benefit too 🙂>>

CRAZYCHOIR architecture. Crazyf…

Fahim Farook

f

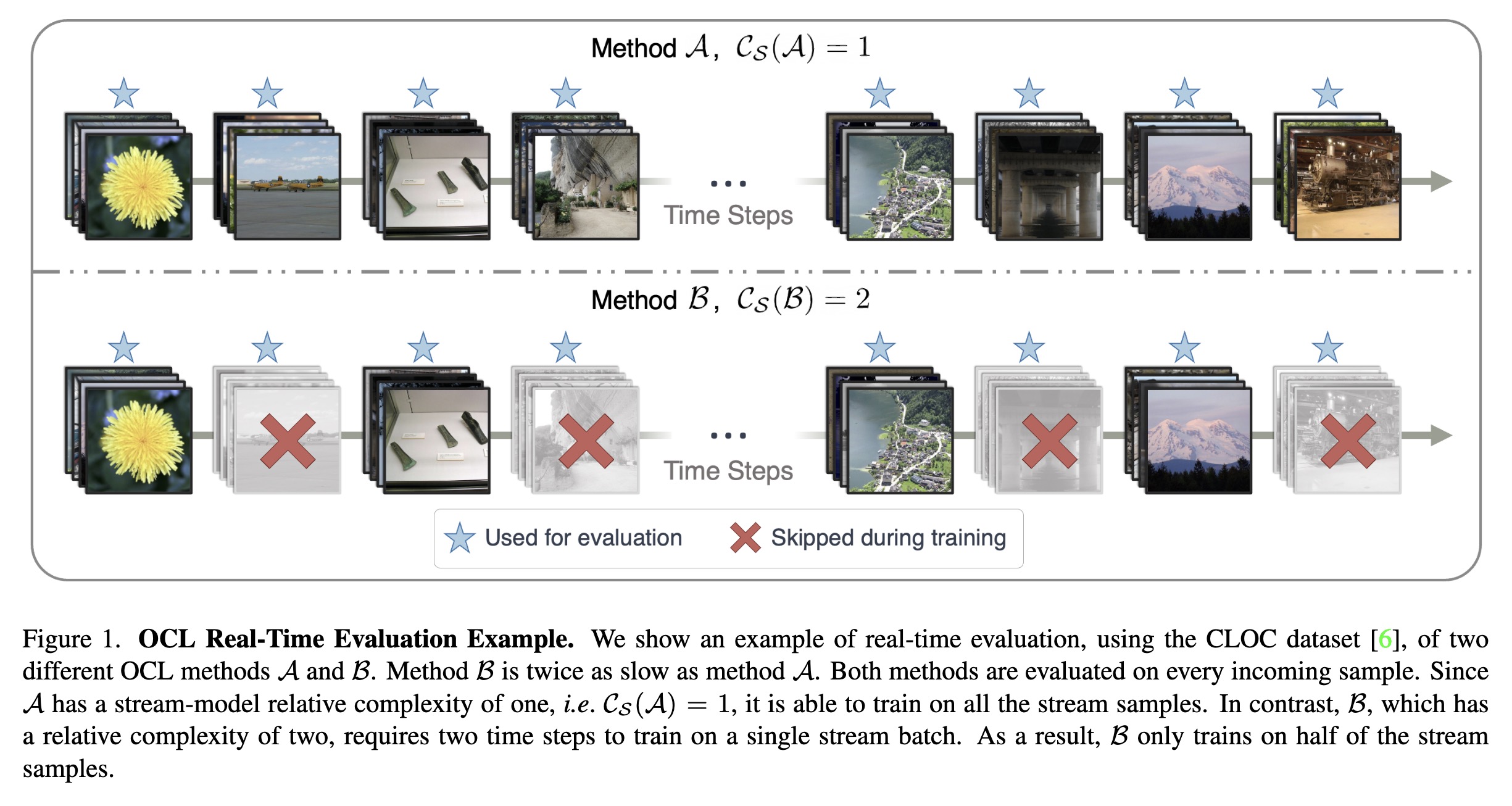

"Real-Time Evaluation in Online Continual Learning: A New Paradigm. (arXiv:2302.01047v1 [cs.LG])" — A practical real-time evaluation of continual learning, in which the stream does not wait for the model to complete training before revealing the next data for predictions.

Paper: http://arxiv.org/abs/2302.01047

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

OCL Real-Time Evaluation Exampl…

Paper: http://arxiv.org/abs/2302.01047

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

OCL Real-Time Evaluation Exampl…

Fahim Farook

f

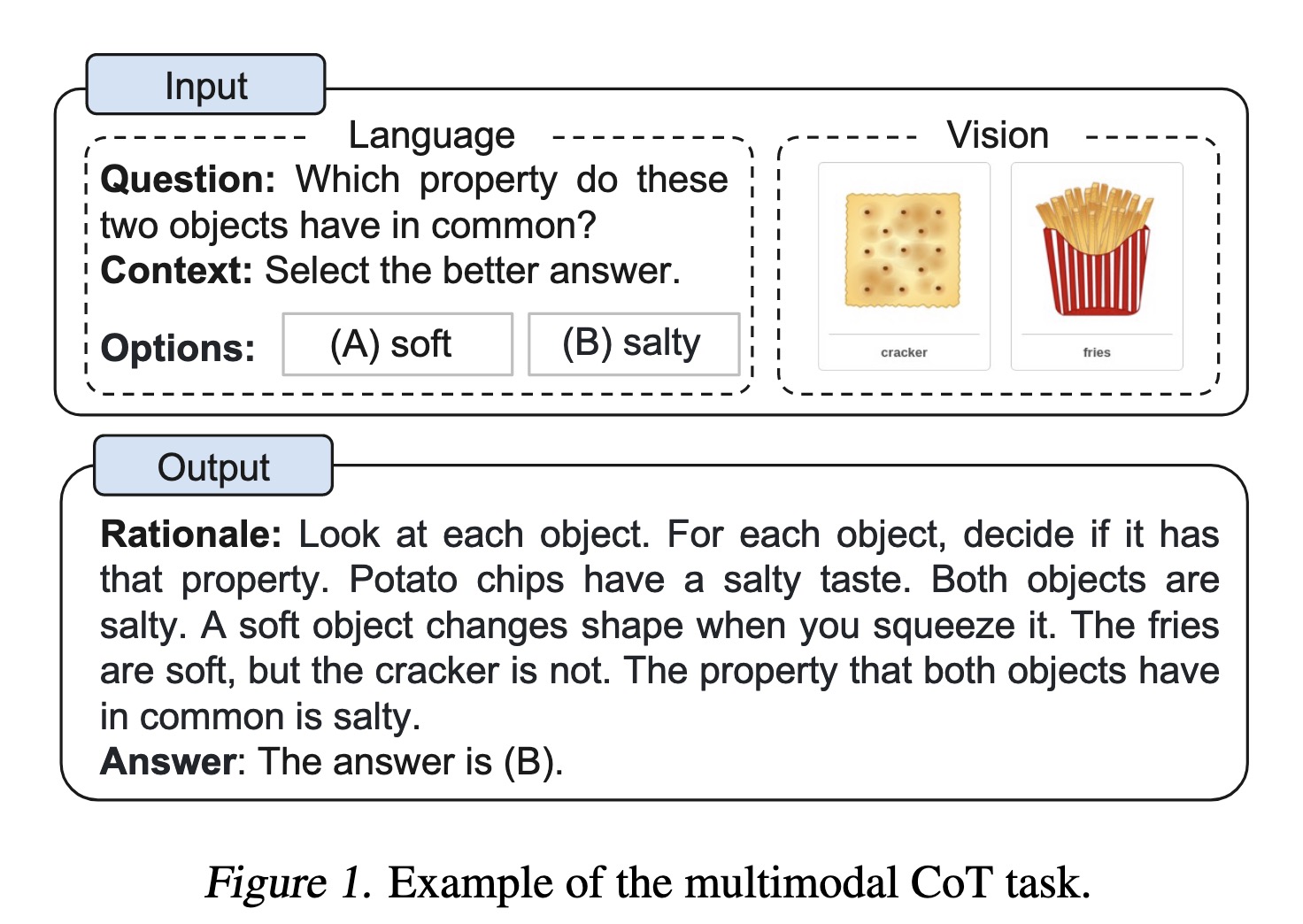

"Multimodal Chain-of-Thought Reasoning in Language Models. (arXiv:2302.00923v1 [cs.CL])" — A Multimodal Chain-of-Thought that incorporates vision features in a decoupled training framework which separates the rationale generation and answer inference into two stages and incorporates vision features in both stages.

Paper: http://arxiv.org/abs/2302.00923

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Example of the multimodal CoT t…

Paper: http://arxiv.org/abs/2302.00923

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Example of the multimodal CoT t…

Fahim Farook

f

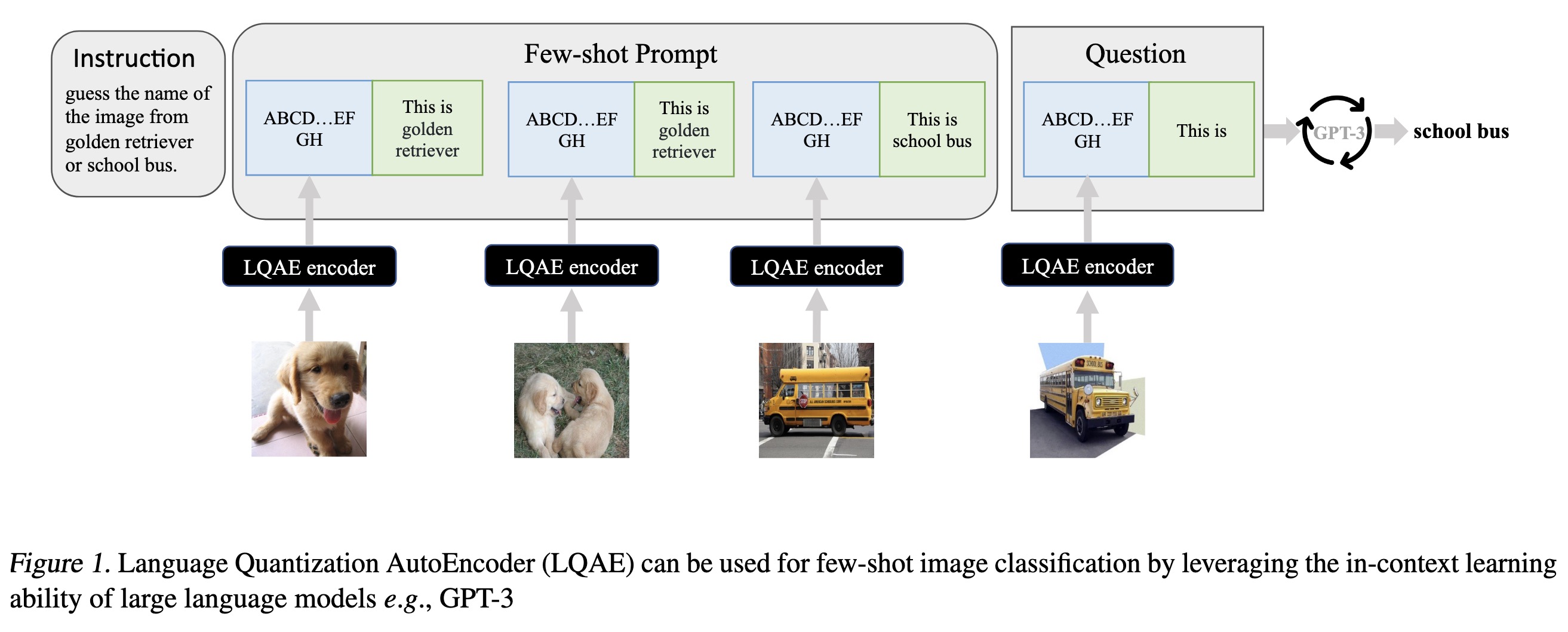

"Language Quantized AutoEncoders: Towards Unsupervised Text-Image Alignment. (arXiv:2302.00902v1 [cs.LG])" — A modification of VQ-VAE that learns to align text-image data in an unsupervised manner by leveraging pretrained language models (e.g., BERT, RoBERTa).

Paper: http://arxiv.org/abs/2302.00902

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Language Quantization AutoEnco…

Paper: http://arxiv.org/abs/2302.00902

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Language Quantization AutoEnco…

Fahim Farook

f

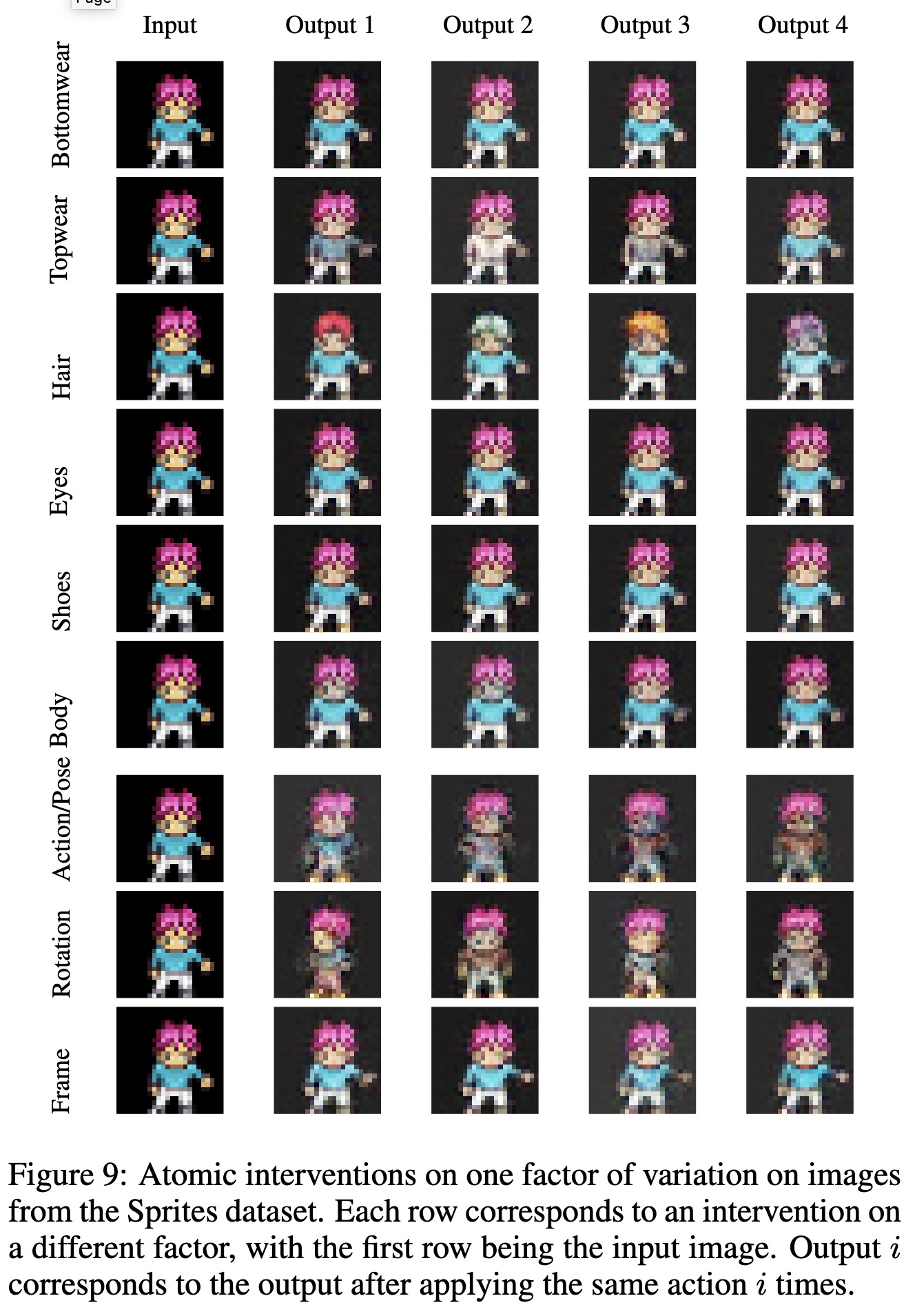

"Disentanglement of Latent Representations via Sparse Causal Interventions. (arXiv:2302.00869v1 [cs.LG])" — A new method for disentanglement inspired by causal dynamics that combines causality theory with vector-quantized variational autoencoders where the model considers the quantized vectors as causal variables and links them in a causal graph.

Paper: http://arxiv.org/abs/2302.00869

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Atomic interventions on one fac…

Paper: http://arxiv.org/abs/2302.00869

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Atomic interventions on one fac…

Fahim Farook

f

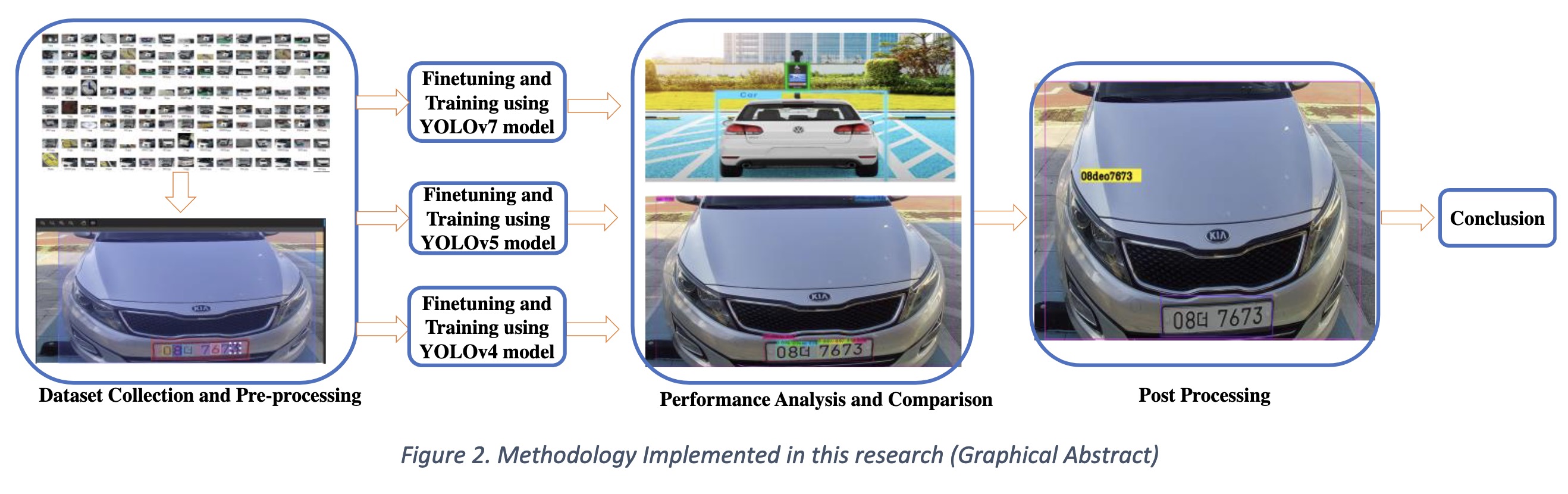

"SHINE: Deep Learning-Based Accessible Parking Management System. (arXiv:2302.00837v1 [cs.CV])" — A system which uses deep learning object detection algorithms to detect the vehicle, license plate, and disability badges and then authenticates the rights to use the accessible parking spaces by coordinating with a central server.

Paper: http://arxiv.org/abs/2302.00837

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

An overview of the methodology

Paper: http://arxiv.org/abs/2302.00837

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

An overview of the methodology

Fahim Farook

f

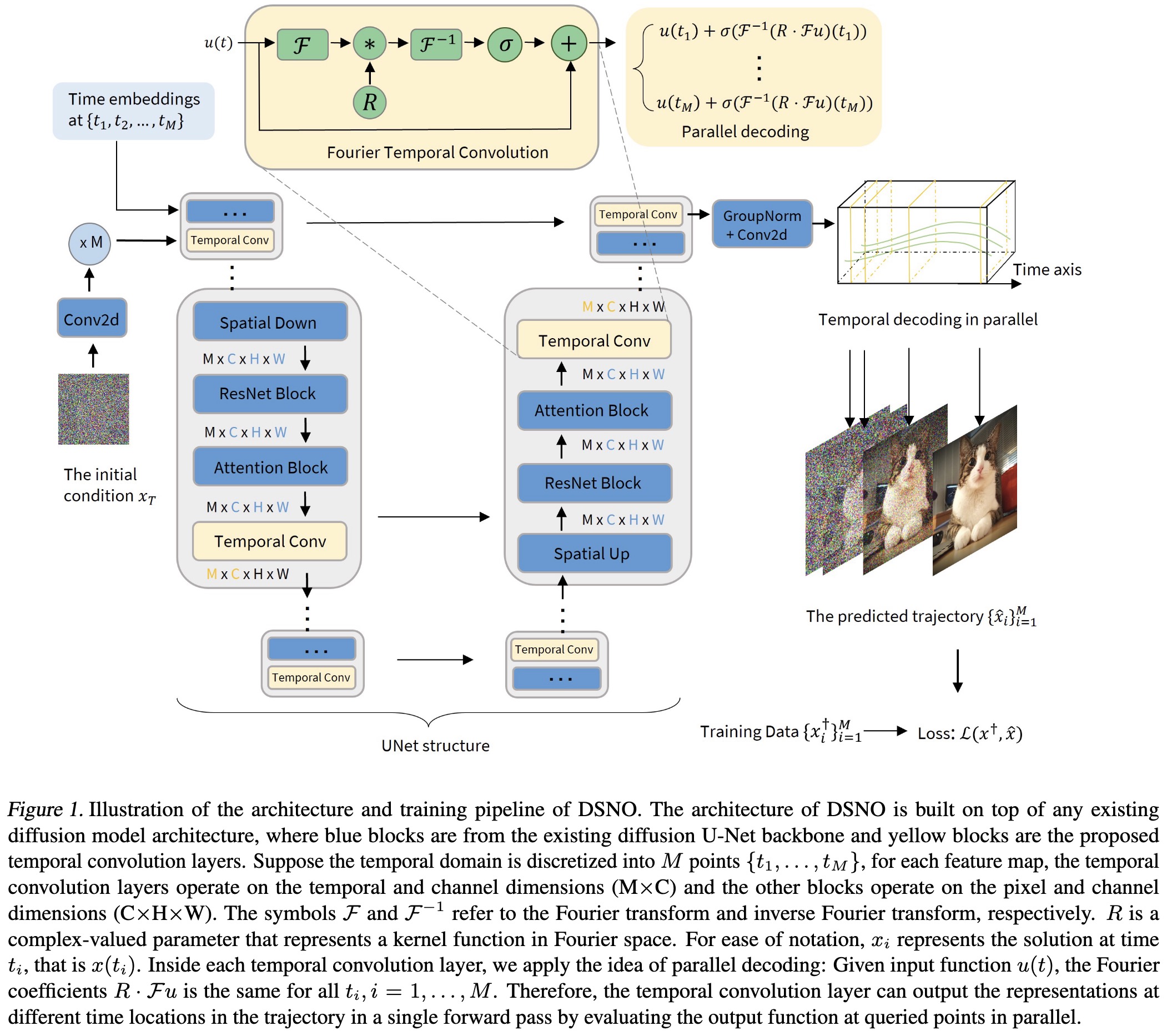

"Fast Sampling of Diffusion Models via Operator Learning. (arXiv:2211.13449v2 [cs.LG] UPDATED)" — Accelerating the sampling process of diffusion models using neural operators, an efficient method to solve the probability flow differential equations.

Paper: http://arxiv.org/abs/2211.13449

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Illustration of the architectur…

Paper: http://arxiv.org/abs/2211.13449

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Illustration of the architectur…

Fahim Farook

f

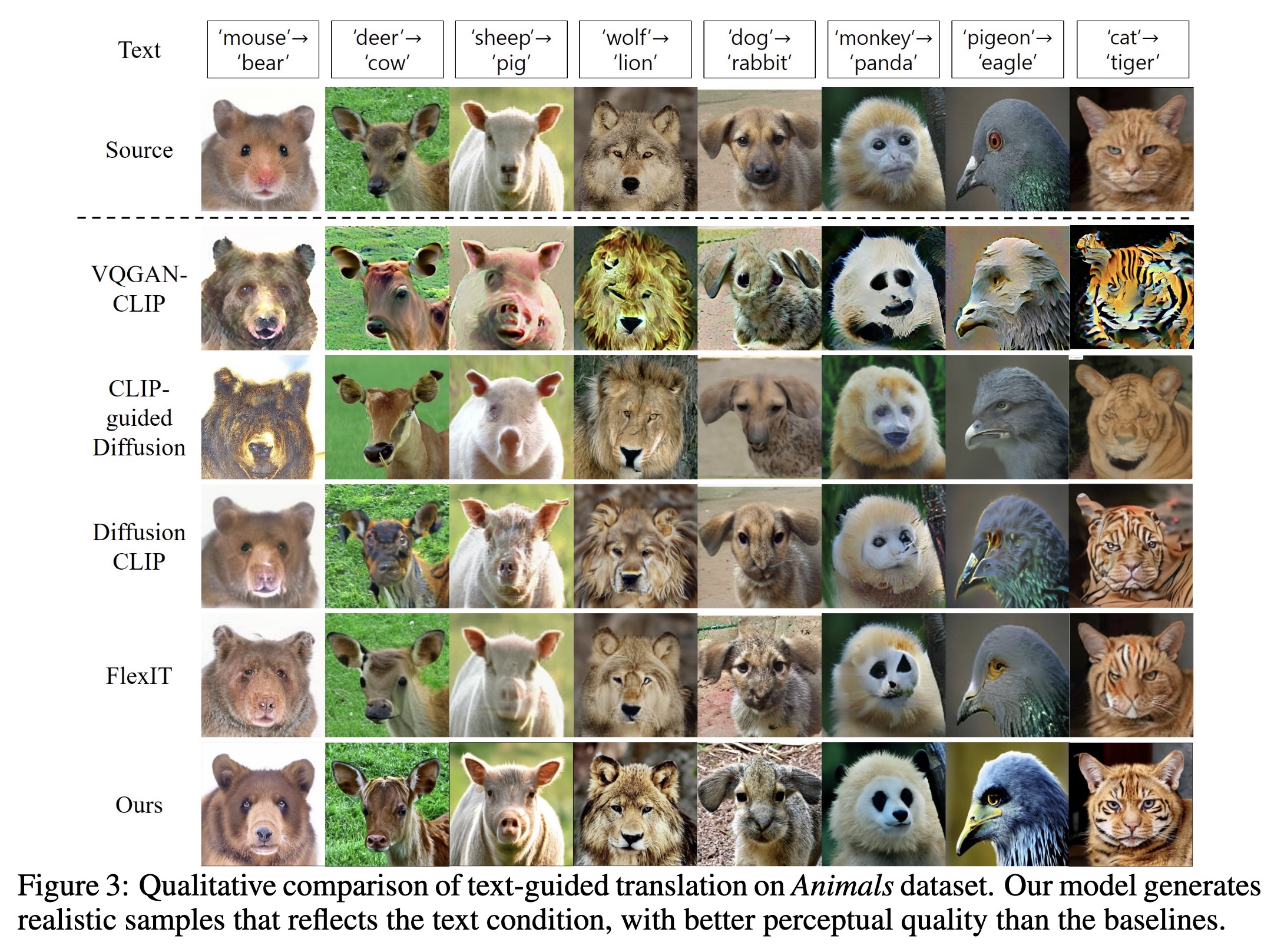

"Diffusion-based Image Translation using Disentangled Style and Content Representation. (arXiv:2209.15264v2 [cs.CV] UPDATED)" — A novel diffusion-based unsupervised image translation method using disentangled style and content representation inspired by the splicing Vision Transformer.

Paper: http://arxiv.org/abs/2209.15264

Code: https://github.com/cyclomon/DiffuseIT

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Qualitative comparison of text-…

Paper: http://arxiv.org/abs/2209.15264

Code: https://github.com/cyclomon/DiffuseIT

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Qualitative comparison of text-…

Fahim Farook

f

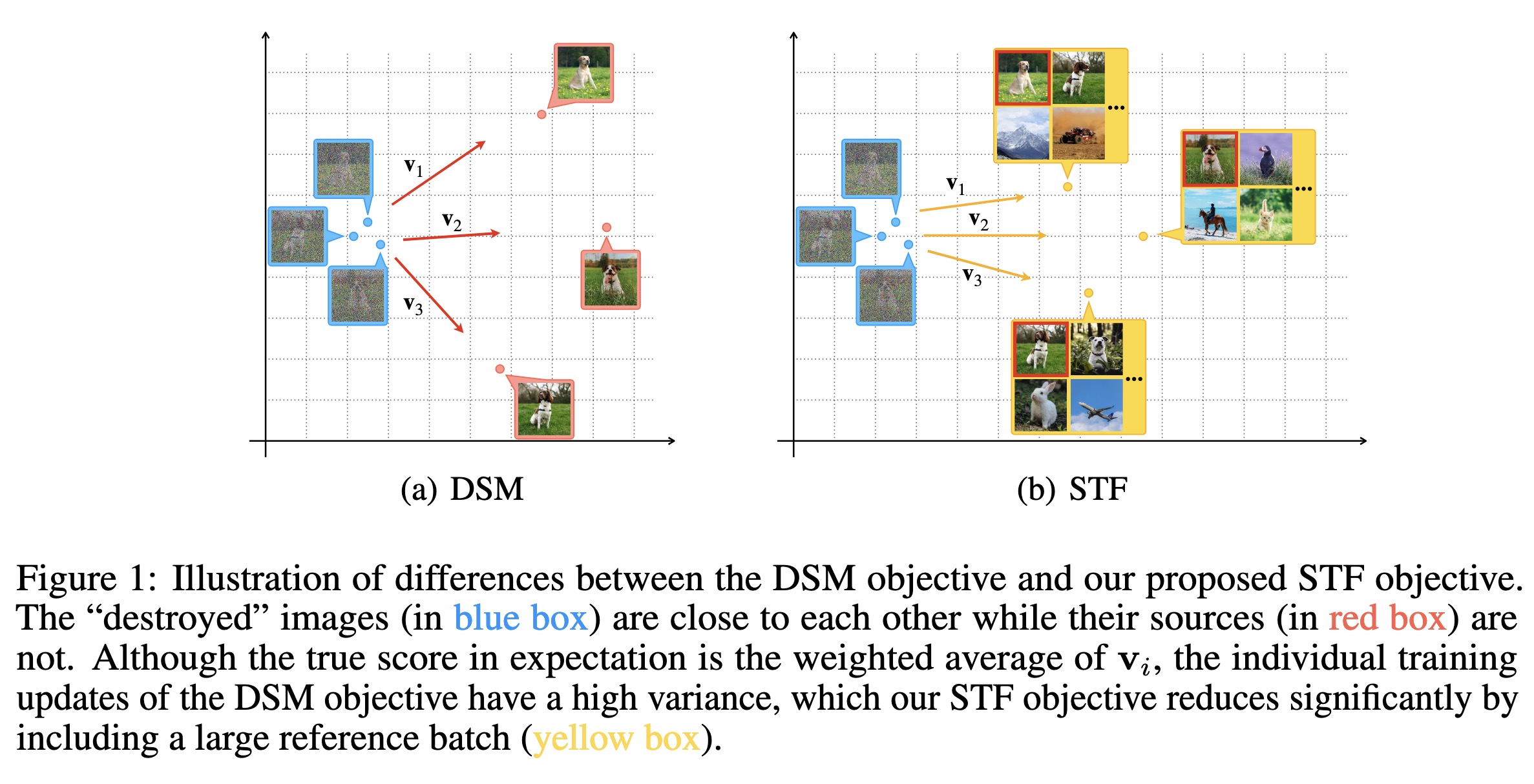

"Stable Target Field for Reduced Variance Score Estimation in Diffusion Models. (arXiv:2302.00670v1 [cs.LG])" — A method to improve diffusion models by by reducing the variance of the training targets in their denoising score-matching objective. This is achieved by incorporating a reference batch which is used to calculate weighted conditional scores as more stable training targets.

Paper: http://arxiv.org/abs/2302.00670

Code: https://github.com/Newbeeer/stf

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Illustration of differences bet…

Paper: http://arxiv.org/abs/2302.00670

Code: https://github.com/Newbeeer/stf

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Illustration of differences bet…

Fahim Farook

f

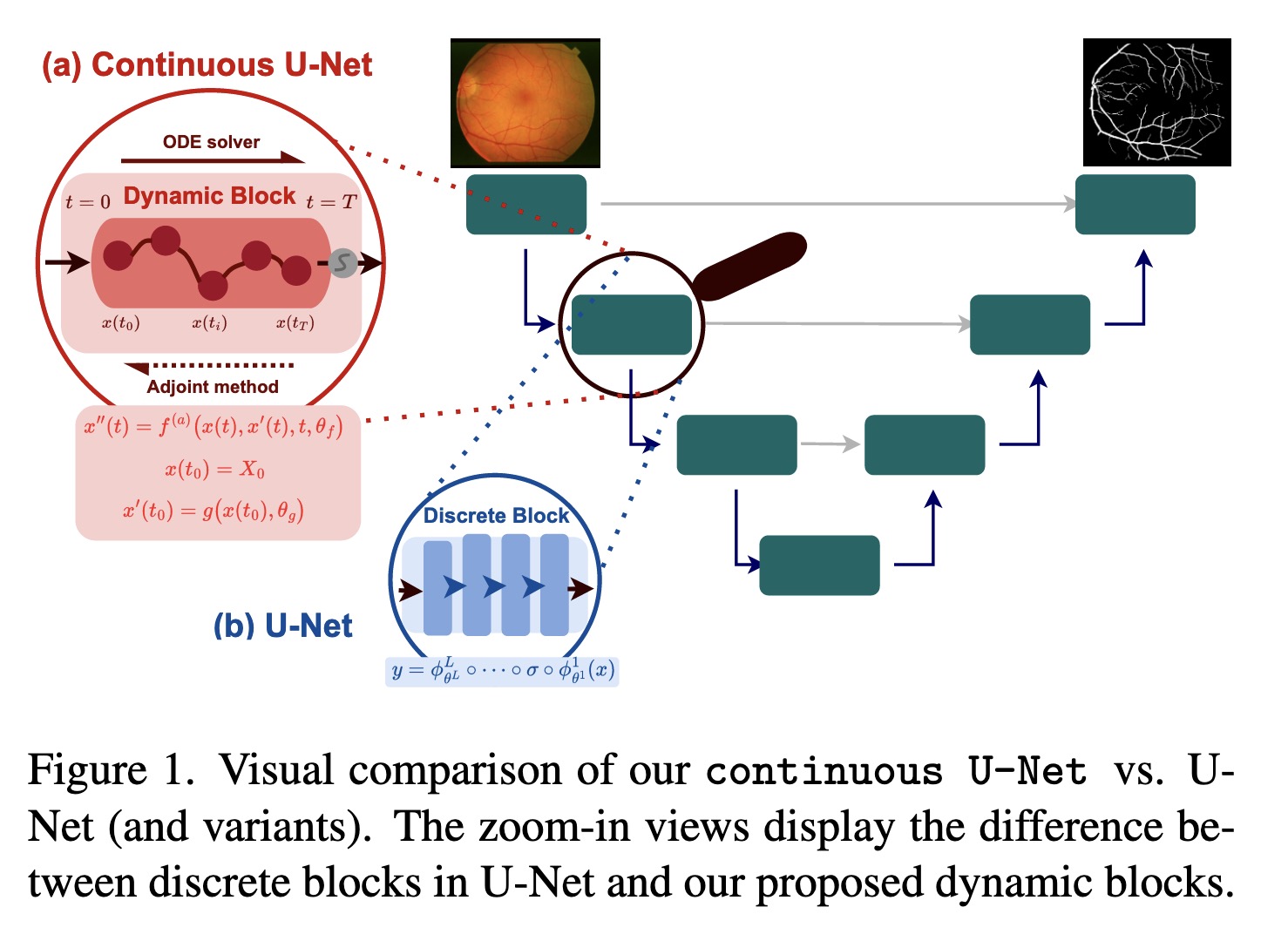

"Continuous U-Net: Faster, Greater and Noiseless. (arXiv:2302.00626v1 [cs.CV])" — A novel family of networks for image segmentation which is a continuous deep neural network that introduces new dynamic blocks modelled by second order ordinary differential equations to overcome some of the limitations in current U-Net architectures.

Paper: http://arxiv.org/abs/2302.00626

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Visual comparison of our contin…

Paper: http://arxiv.org/abs/2302.00626

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Visual comparison of our contin…

Fahim Farook

f

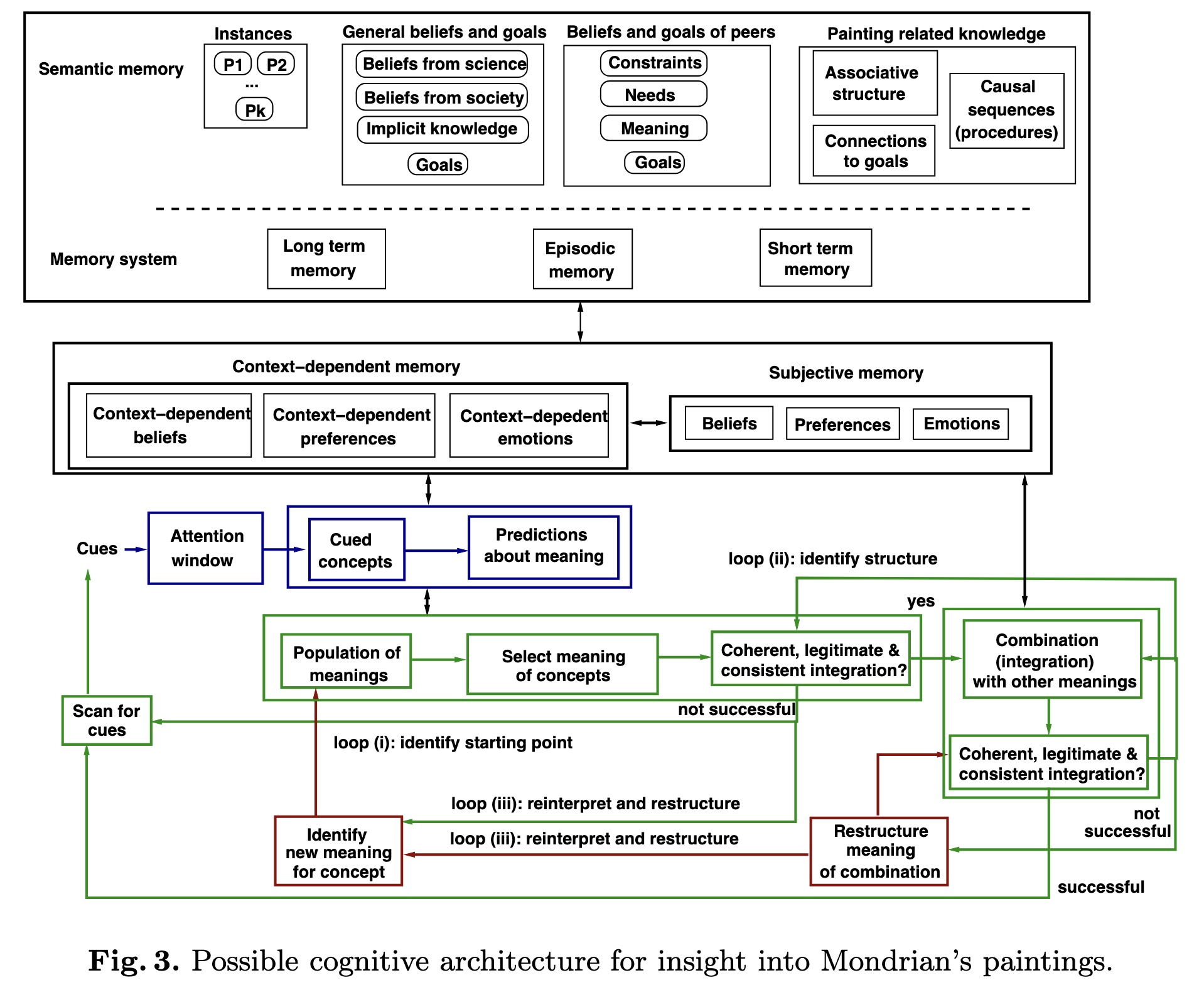

"Inching Towards Automated Understanding of the Meaning of Art: An Application to Computational Analysis of Mondrian's Artwork. (arXiv:2302.00594v1 [cs.CV])" — An attempt to identify capabilities that are related to semantic processing, a current limitation of Deep Neural Networks (DNN), which identifies the missing capabilities by comparing the process of understanding Mondrian's paintings with the process of understanding electronic circuit designs, another creative problem solving instance.

Paper: http://arxiv.org/abs/2302.00594

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Possible cognitive architecture…

Paper: http://arxiv.org/abs/2302.00594

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Possible cognitive architecture…

Fahim Farook

f

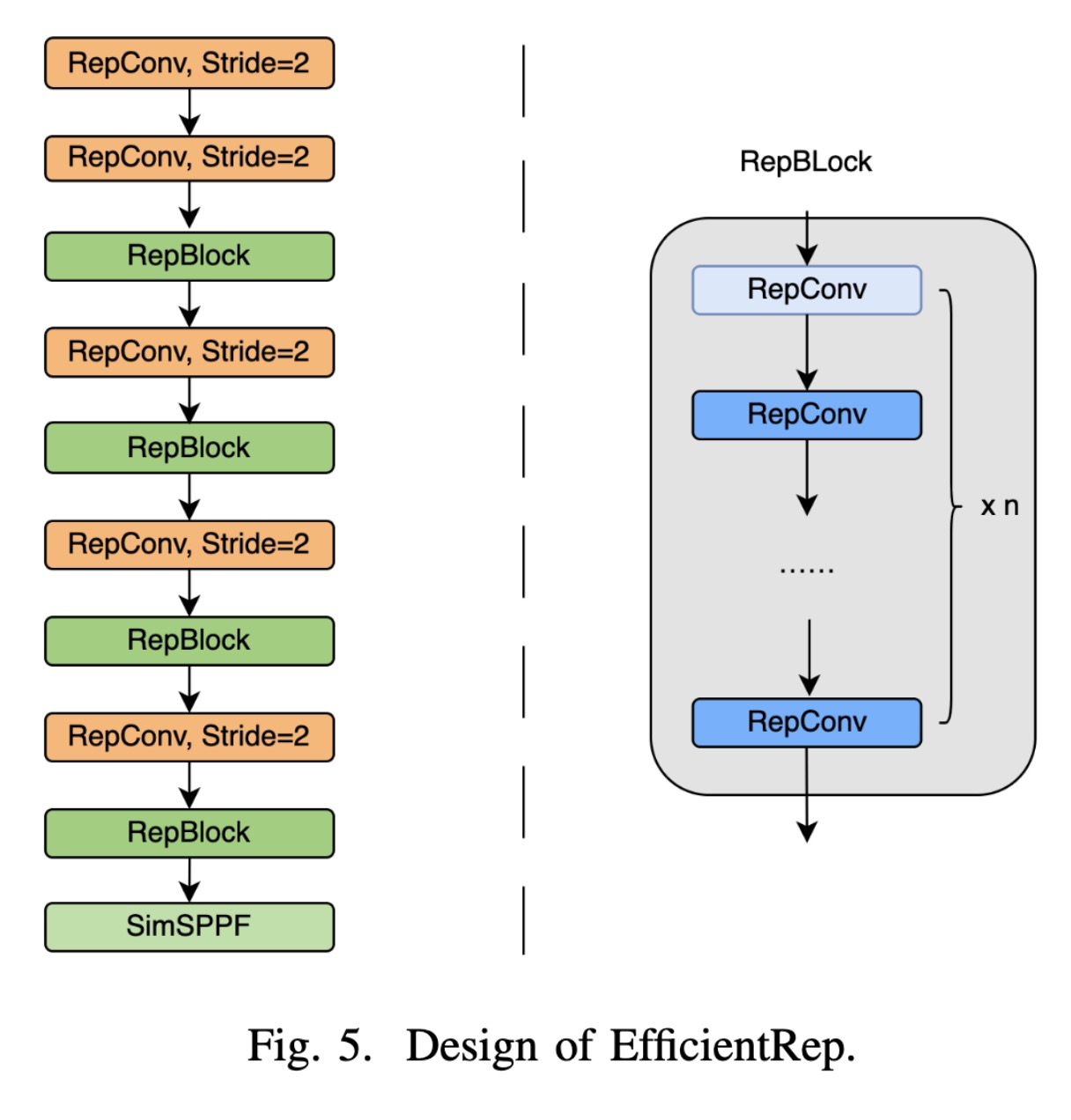

"EfficientRep:An Efficient Repvgg-style ConvNets with Hardware-aware Neural Network Design. (arXiv:2302.00386v1 [cs.CV])" — A hardware-efficient architecture of convolutional neural network, which has a repvgg-like architecture which is high-computation hardware(e.g. GPU) friendly.

Paper: http://arxiv.org/abs/2302.00386

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Design of EfficientRep

Paper: http://arxiv.org/abs/2302.00386

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Design of EfficientRep

Fahim Farook

f

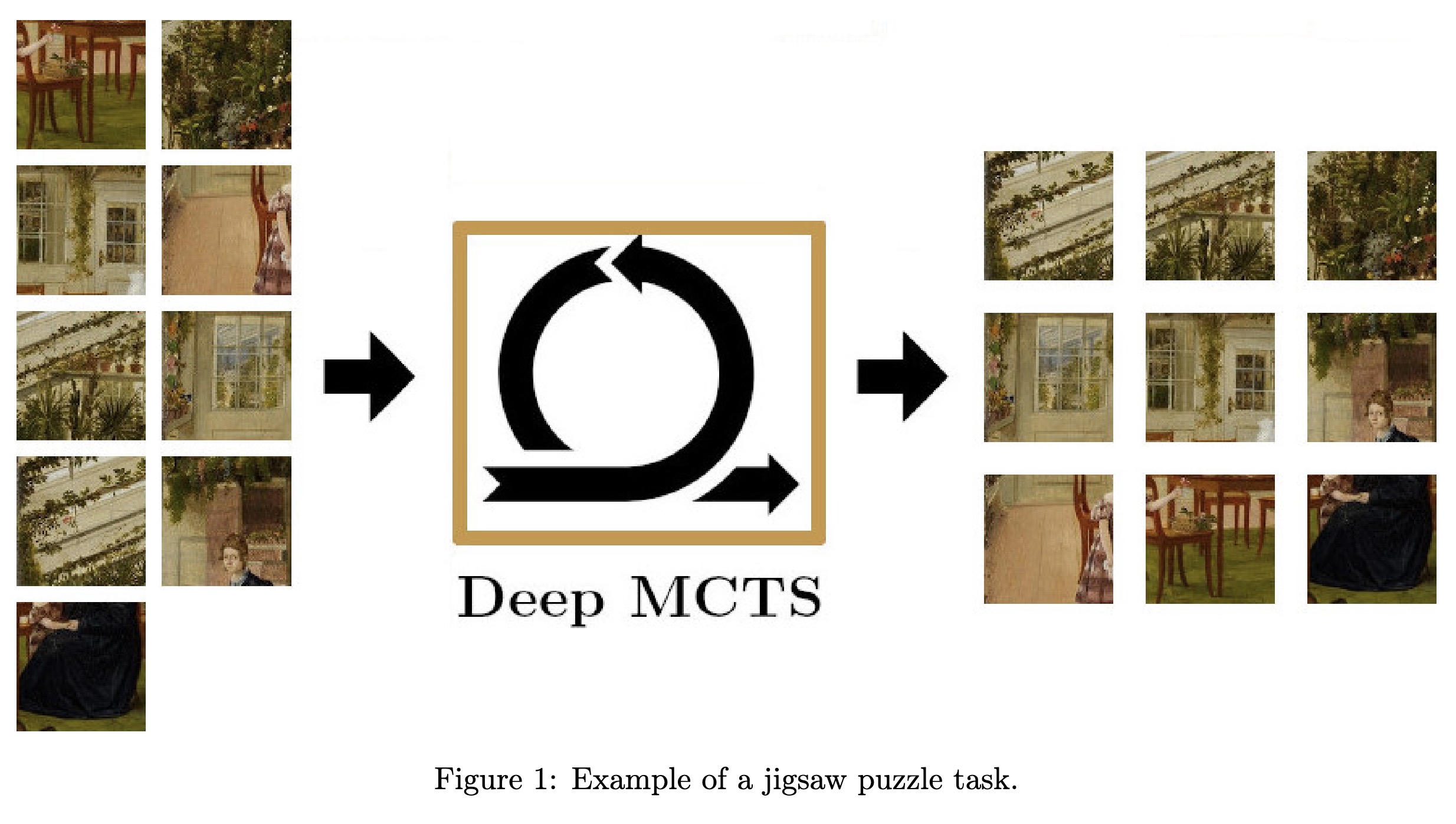

"Alphazzle: Jigsaw Puzzle Solver with Deep Monte-Carlo Tree Search. (arXiv:2302.00384v1 [cs.CV])" — A reassembly algorithm based on single-player Monte Carlo Tree Search (MCTS) which shows the importance of MCTS and the neural networks working together.

Paper: http://arxiv.org/abs/2302.00384

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Example of a jigsaw puzzle task…

Paper: http://arxiv.org/abs/2302.00384

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Example of a jigsaw puzzle task…

Fahim Farook

f

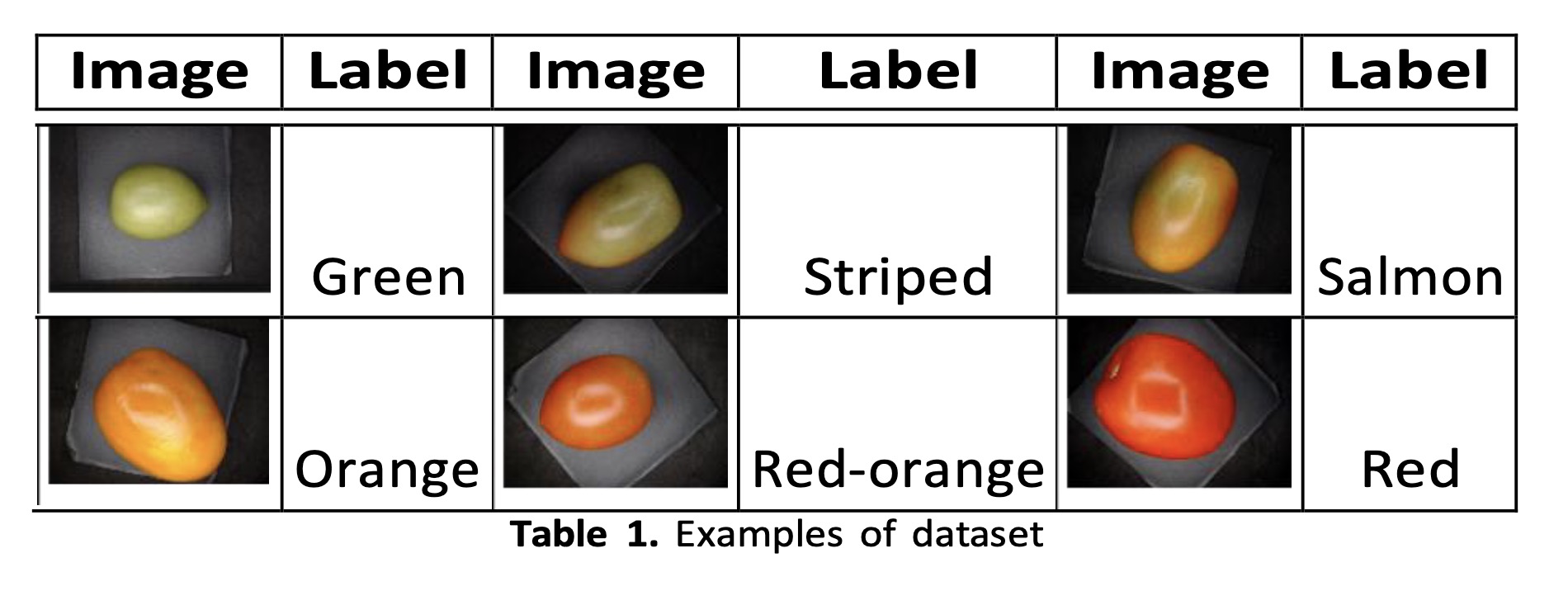

"Detection of Tomato Ripening Stages using Yolov3-tiny. (arXiv:2302.00164v1 [cs.CV])" — A computer vision system to detect tomatoes at different ripening stages by using a neural network-based model for tomato classification and detection.

Paper: http://arxiv.org/abs/2302.00164

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Sample dataset images

Paper: http://arxiv.org/abs/2302.00164

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Sample dataset images

Fahim Farook

f

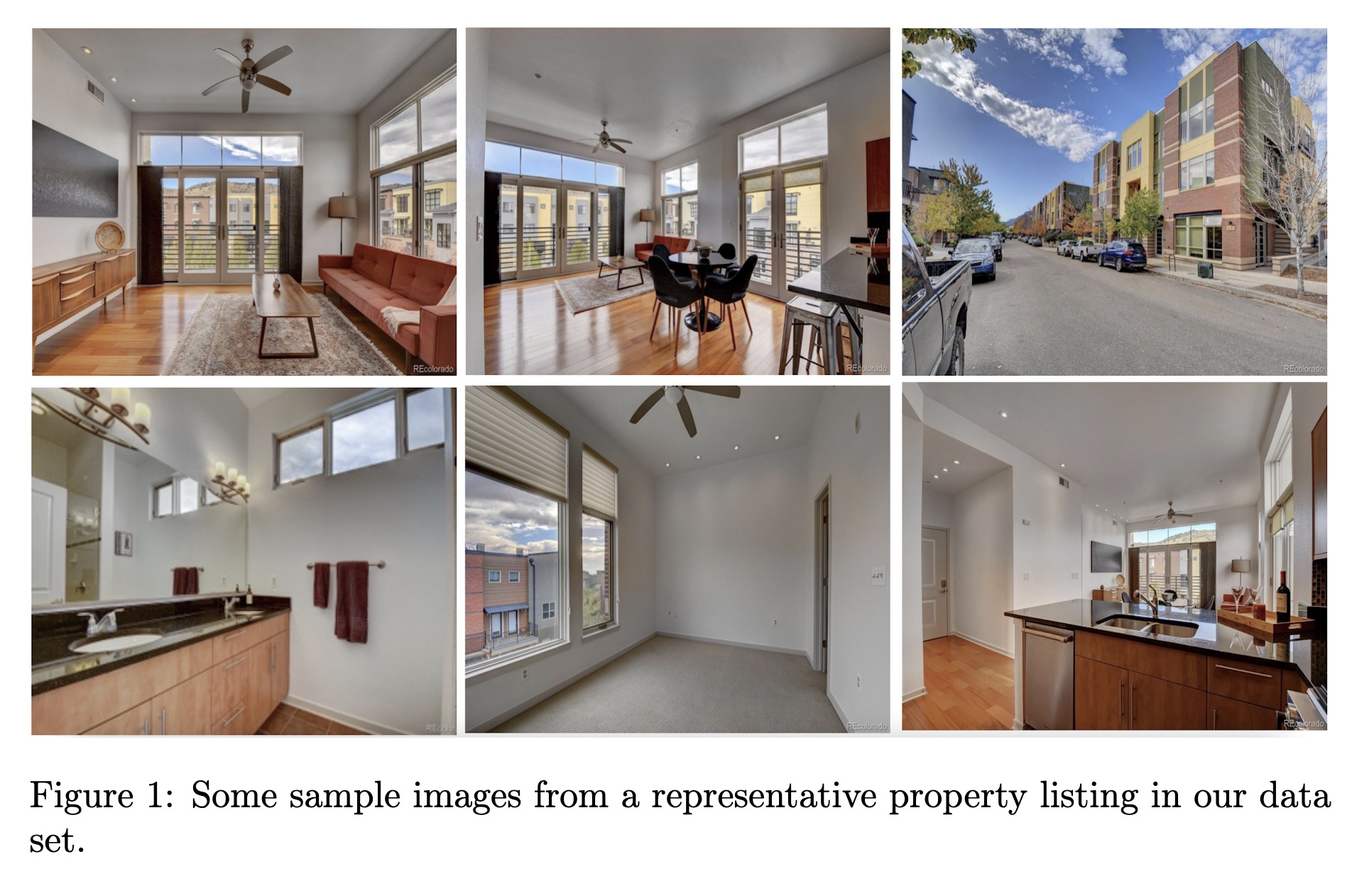

"Real Estate Property Valuation using Self-Supervised Vision Transformers. (arXiv:2302.00117v1 [cs.CV])" — A new method for property valuation that utilizes self-supervised vision transformers and hedonic pricing models trained on real estate data to estimate the value of a given property.

Paper: http://arxiv.org/abs/2302.00117

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Some sample images from a repre…

Paper: http://arxiv.org/abs/2302.00117

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Some sample images from a repre…

Fahim Farook

f

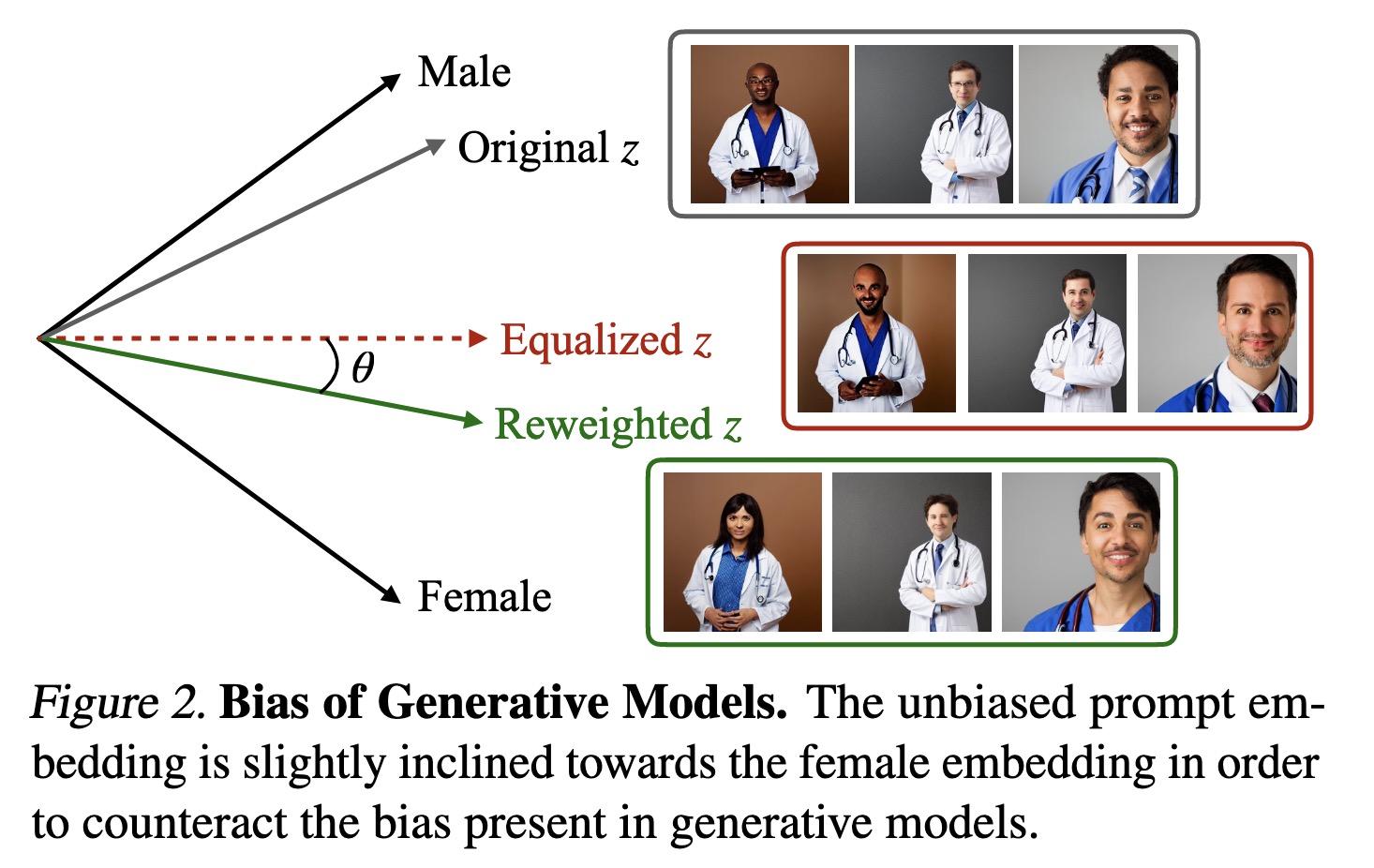

"Debiasing Vision-Language Models via Biased Prompts. (arXiv:2302.00070v1 [cs.LG])" — A general approach for debiasing vision-language foundation models by projecting out biased directions in the text embedding by debiasing only the text embedding with a calibrated projection matrix to yield robust classifiers and fair generative models.

Paper: http://arxiv.org/abs/2302.00070

Code: https://github.com/chingyaoc/debias_vl

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Bias of Generative Models. The …

Paper: http://arxiv.org/abs/2302.00070

Code: https://github.com/chingyaoc/debias_vl

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Bias of Generative Models. The …

Fahim Farook

f

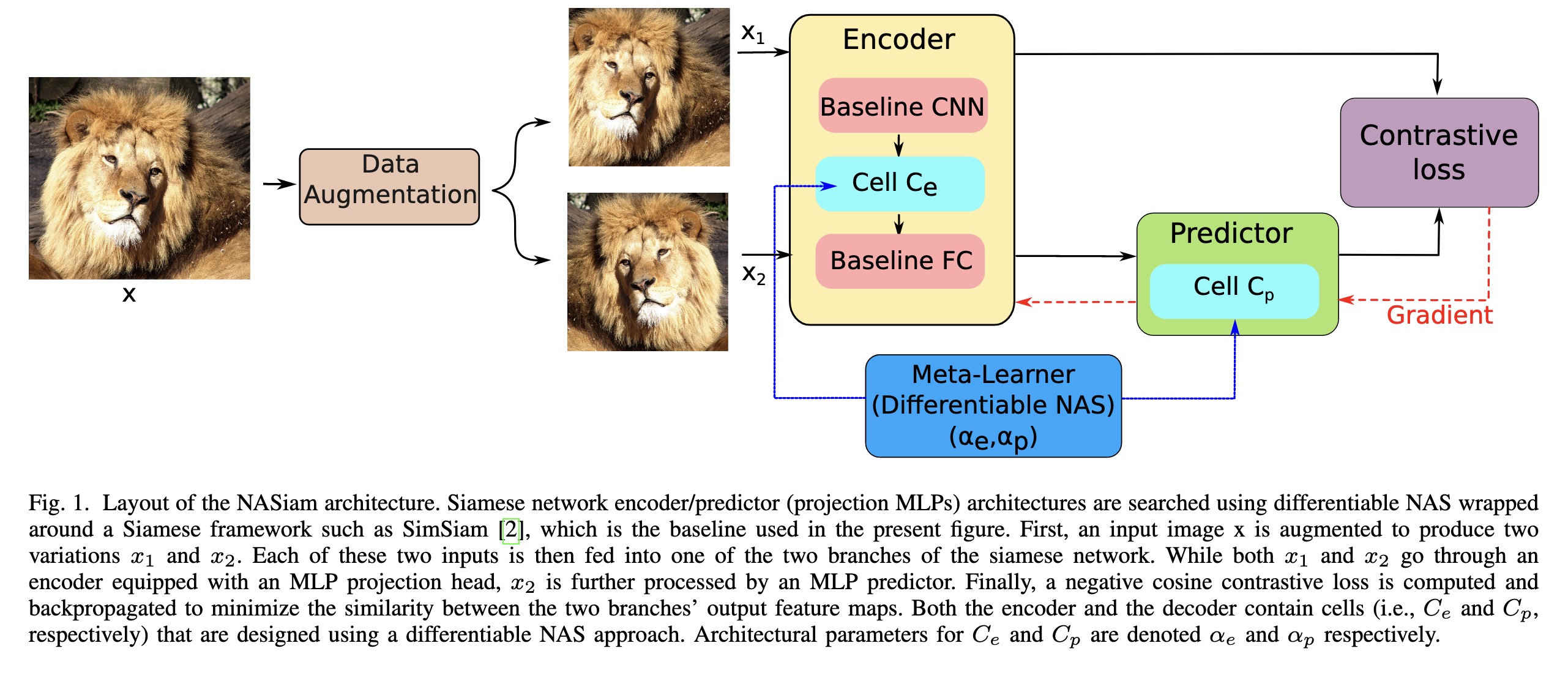

"NASiam: Efficient Representation Learning using Neural Architecture Search for Siamese Networks. (arXiv:2302.00059v1 [cs.CV])" — A novel approach that uses differentiable NAS to improve the multilayer perceptron projector and predictor (encoder/predictor pair) architectures inside siamese-networks-based contrastive learning frameworks (e.g., SimCLR, SimSiam, and MoCo) while preserving the simplicity of previous baselines.

Paper: http://arxiv.org/abs/2302.00059

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Layout of the NASiam architectu…

Paper: http://arxiv.org/abs/2302.00059

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Layout of the NASiam architectu…

Fahim Farook

f

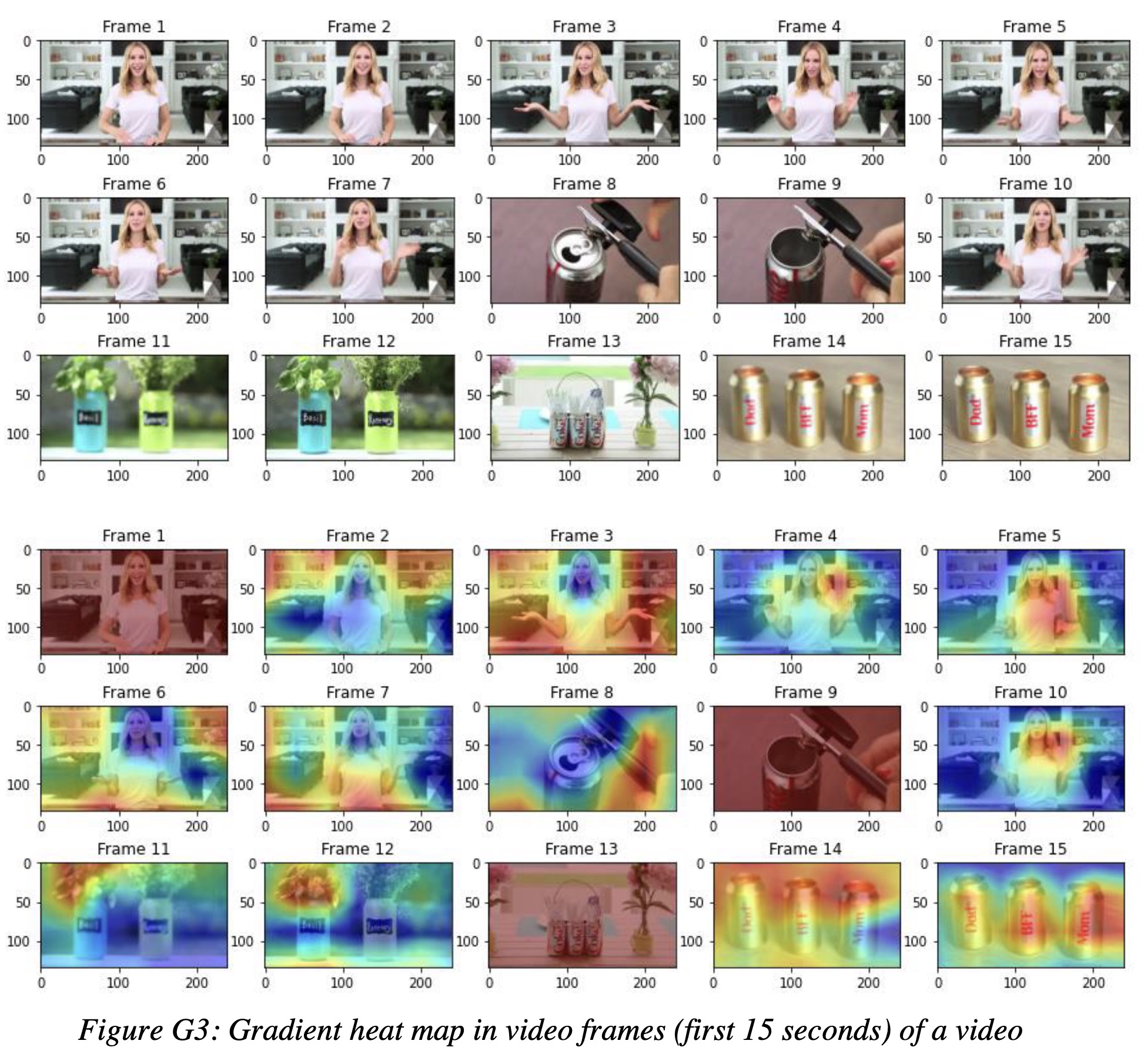

"Video Influencers: Unboxing the Mystique. (arXiv:2012.12311v2 [cs.LG] UPDATED)" — A study and analysis of YouTube influencers and their unstructured video data across text, audio and images using a novel "interpretable deep learning" framework to determine the effectiveness of their constituent elements in explaining video engagement.

Paper: http://arxiv.org/abs/2012.12311

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Gradient heat map in video fram…

Paper: http://arxiv.org/abs/2012.12311

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Gradient heat map in video fram…

Fahim Farook

f

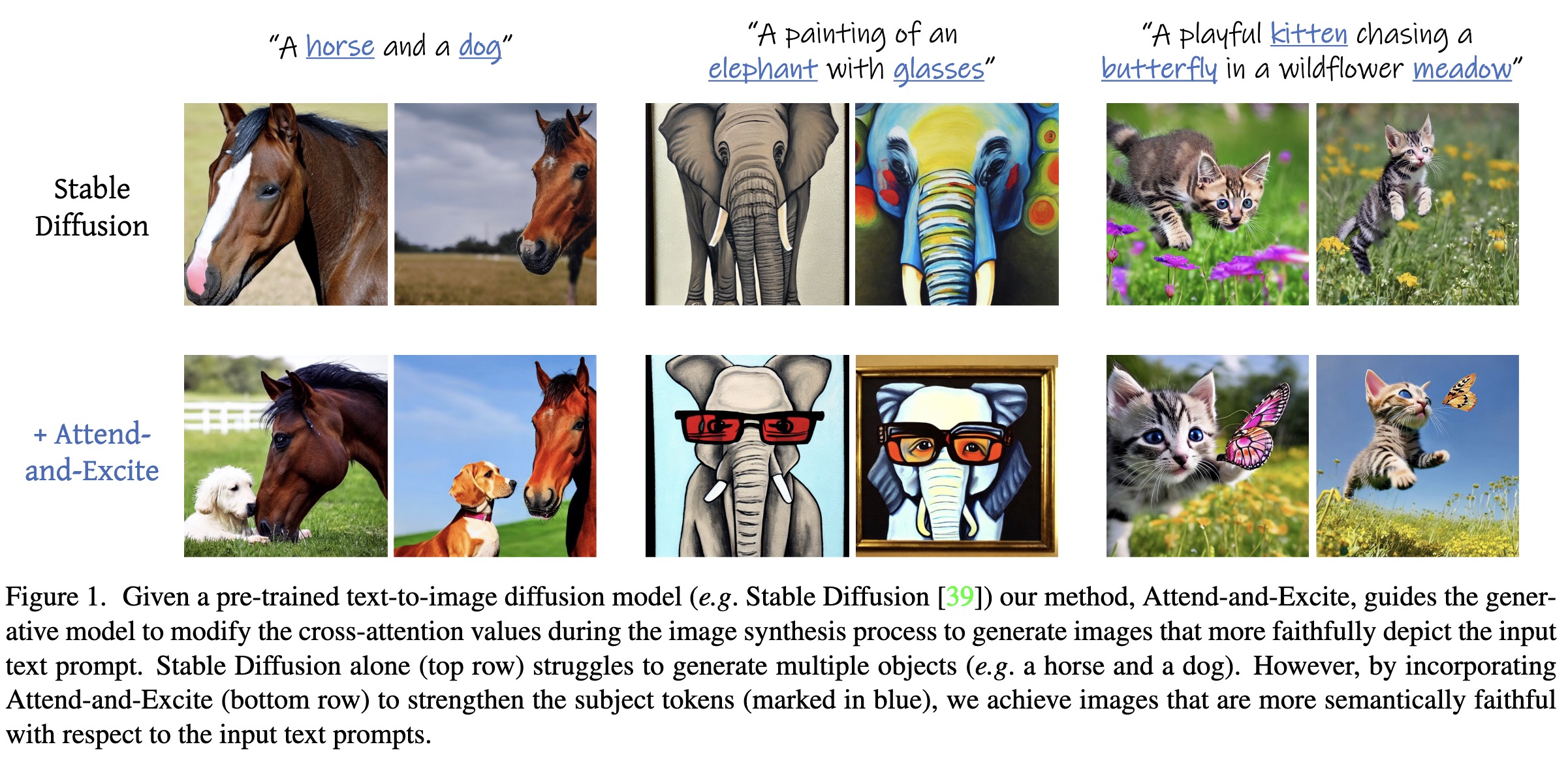

"Attend-and-Excite: Attention-Based Semantic Guidance for Text-to-Image Diffusion Models. (arXiv:2301.13826v1 [cs.CV])" — A process which intervenes in the generative process of diffusion models on the fly during inference time to improve the faithfulness of the generated images to guide the model to refine the cross-attention units to attend to all subject tokens in the text prompt and strengthen - or excite - their activations, encouraging the model to generate all subjects described in the text prompt.

Paper: http://arxiv.org/abs/2301.13826

Code: https://github.com/AttendAndExcite/Attend-and-Excite

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Given a pre-trained text-to-ima…

Paper: http://arxiv.org/abs/2301.13826

Code: https://github.com/AttendAndExcite/Attend-and-Excite

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Given a pre-trained text-to-ima…

Fahim Farook

f

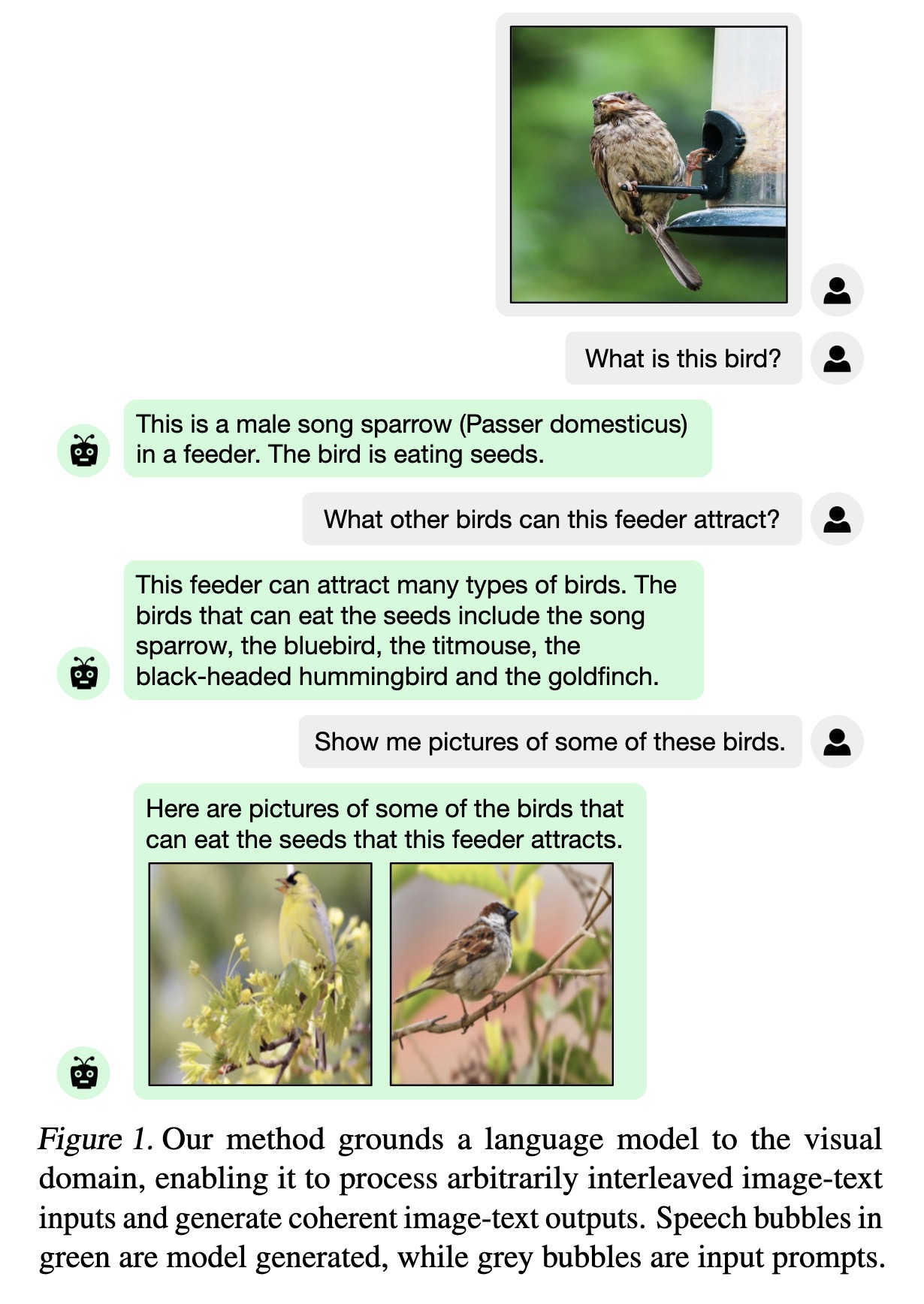

"Grounding Language Models to Images for Multimodal Generation. (arXiv:2301.13823v1 [cs.CL])" — An efficient method to ground pretrained text-only language models to the visual domain, enabling them to process and generate arbitrarily interleaved image-and-text data. This approach apparently works with any off-the-shelf language model.

Paper: http://arxiv.org/abs/2301.13823

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Our method grounds a language m…

Paper: http://arxiv.org/abs/2301.13823

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Our method grounds a language m…