Conversation

Fahim Farook

f

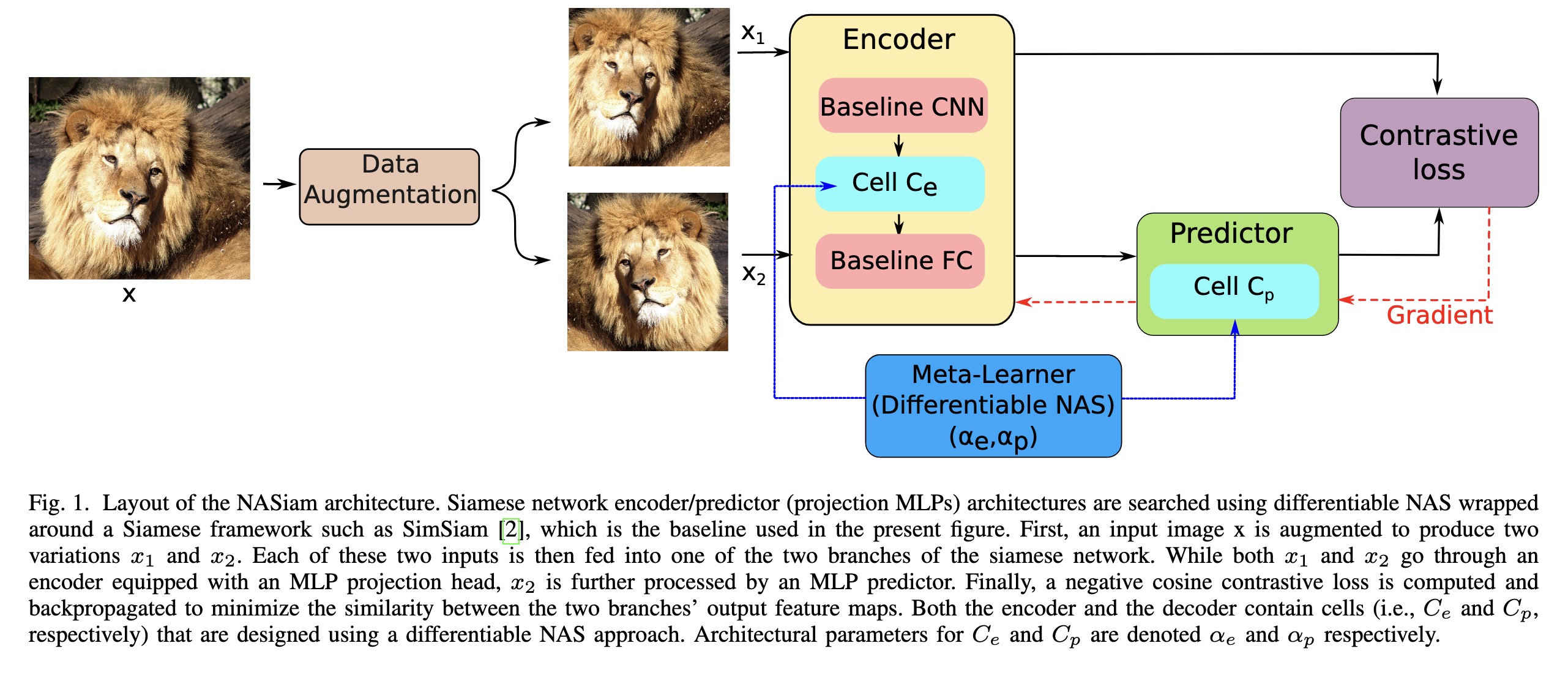

"NASiam: Efficient Representation Learning using Neural Architecture Search for Siamese Networks. (arXiv:2302.00059v1 [cs.CV])" — A novel approach that uses differentiable NAS to improve the multilayer perceptron projector and predictor (encoder/predictor pair) architectures inside siamese-networks-based contrastive learning frameworks (e.g., SimCLR, SimSiam, and MoCo) while preserving the simplicity of previous baselines.

Paper: http://arxiv.org/abs/2302.00059

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Layout of the NASiam architectu…

Paper: http://arxiv.org/abs/2302.00059

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Layout of the NASiam architectu…