Posts

1621Following

138Followers

881I'm currently working on my second novel which is complete, but is in the edit stage. I wrote my first novel over 20 years ago but then didn't write much till now.

I post about #Coding, #Flutter, #Writing, #Movies and #TV. I'll also talk about #Technology, #Gadgets, #MachineLearning, #DeepLearning and a few other things as the fancy strikes ...

Lived in: 🇱🇰🇸🇦🇺🇸🇳🇿🇸🇬🇲🇾🇦🇪🇫🇷🇪🇸🇵🇹🇶🇦🇨🇦

Fahim Farook

f

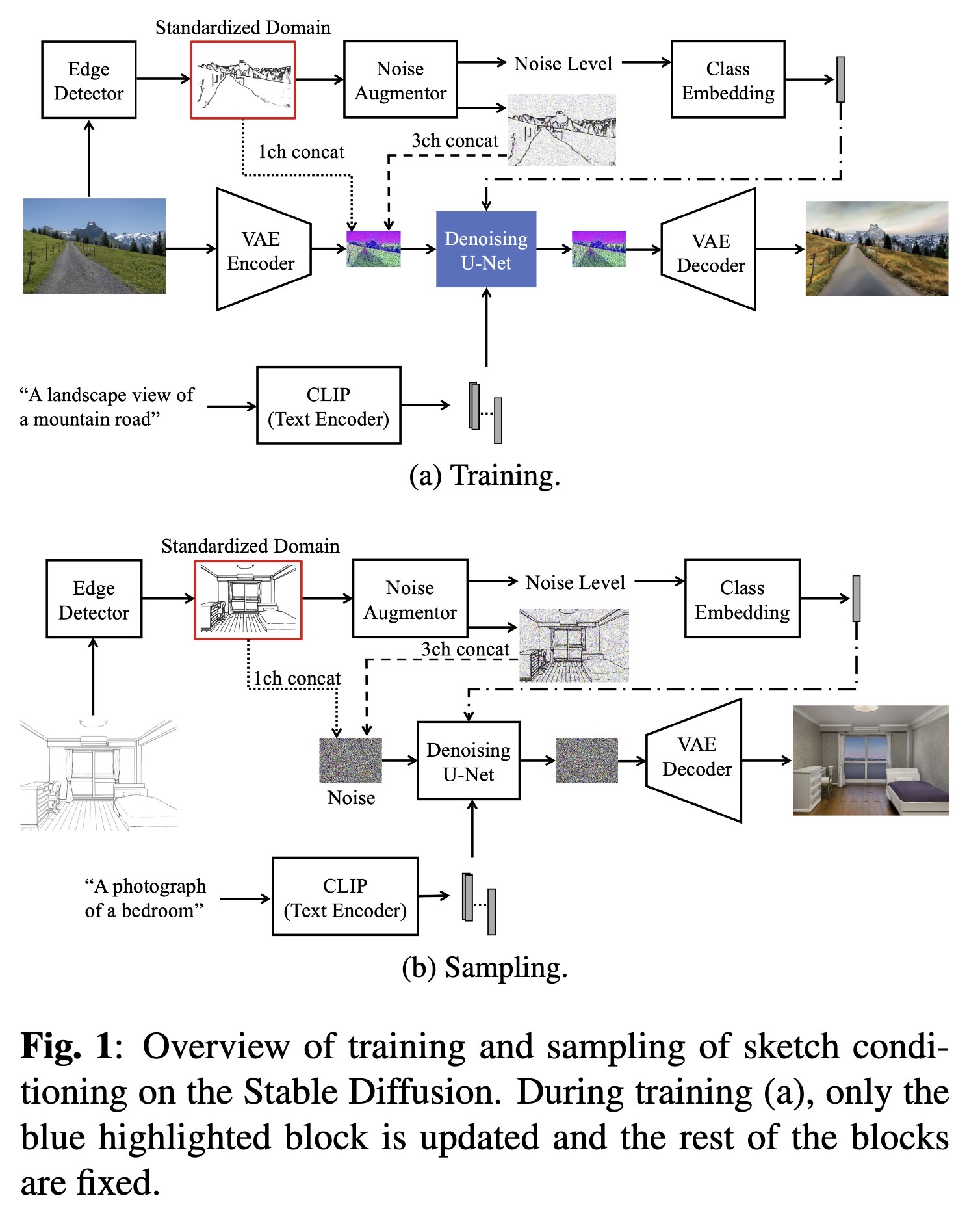

"Text-Guided Scene Sketch-to-Photo Synthesis. (arXiv:2302.06883v1 [cs.CV])" — Creating a whole scene color image based on a sketch using generative models such as Stable Diffusion.

Paper: http://arxiv.org/abs/2302.06883

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Overview of training and sampli…

Paper: http://arxiv.org/abs/2302.06883

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Overview of training and sampli…

Fahim Farook

f

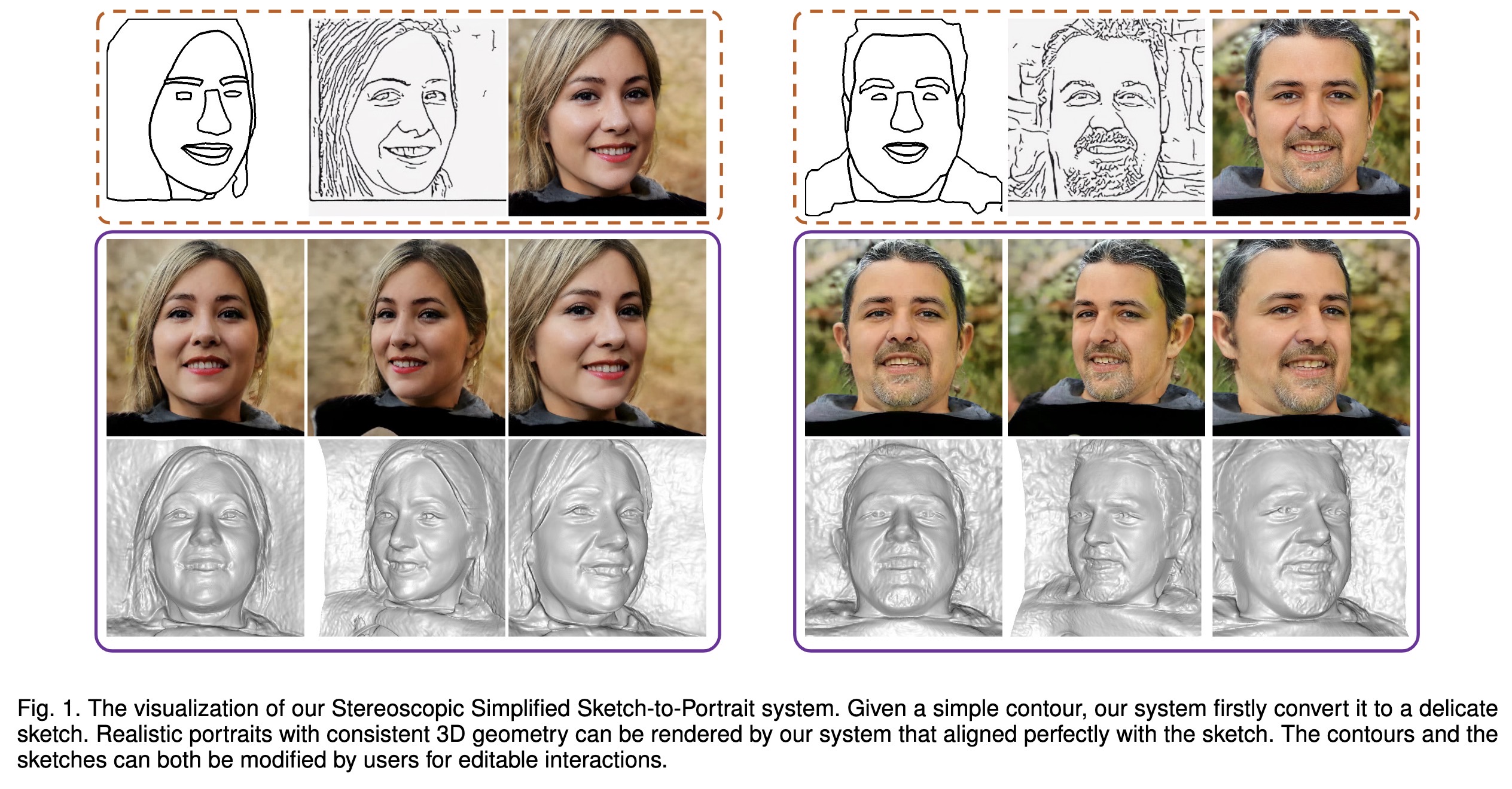

"Make Your Brief Stroke Real and Stereoscopic: 3D-Aware Simplified Sketch to Portrait Generation. (arXiv:2302.06857v1 [cs.CV])" — Creating photorealistic images of people based on sketches using 3D generative models.

Paper: http://arxiv.org/abs/2302.06857

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

The visualization of our Stereo…

Paper: http://arxiv.org/abs/2302.06857

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

The visualization of our Stereo…

Fahim Farook

f

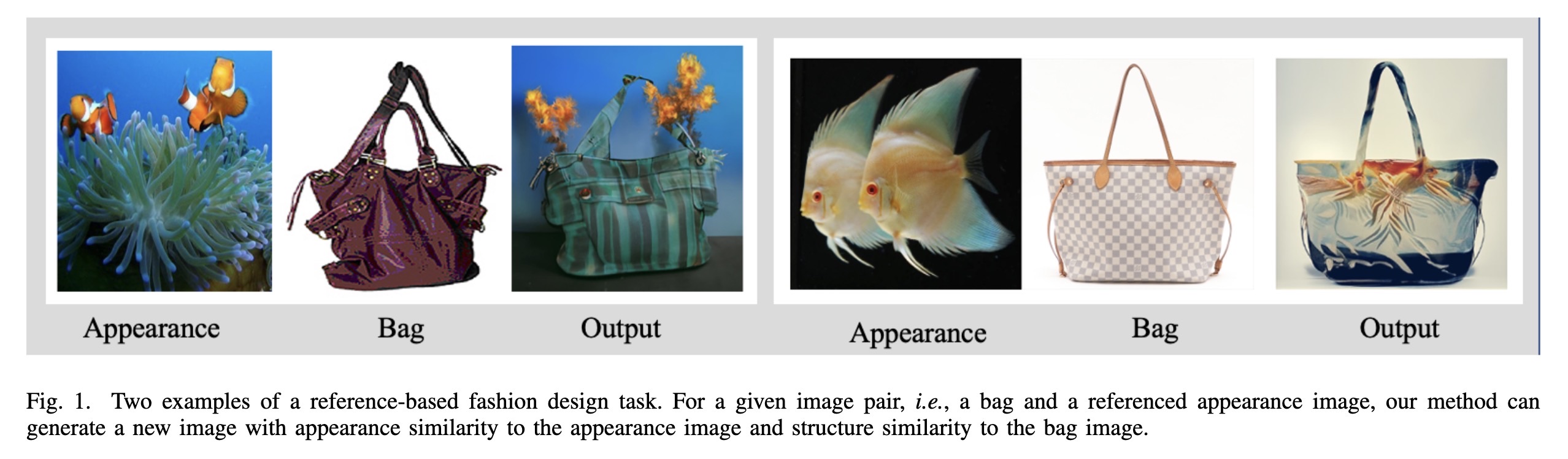

"DiffFashion: Reference-based Fashion Design with Structure-aware Transfer by Diffusion Models. (arXiv:2302.06826v1 [cs.CV])" — Transfer the input image appearance onto images of items of clothing while not altering the structure of the clothing item.

Paper: http://arxiv.org/abs/2302.06826

Code: https://github.com/Rem105-210/DiffFashion

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Two examples of a reference-bas…

Paper: http://arxiv.org/abs/2302.06826

Code: https://github.com/Rem105-210/DiffFashion

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Two examples of a reference-bas…

Fahim Farook

f

Edited 2 years ago

@ajyoung Sorry, no 😞 I haven’t looked at Divam’s stuff in a couple of months and I don’t recall much from the time I did look at it except in general terms … I thought it just calculated the timesteps given a 1000 step range and didn’t actually use a scheduler? But I might be wrong?

Update: What I meant above was no special scheduler algorithm ... just basic built-in scheduling by dividing up the 1000 step range by the number of steps ....

Update: What I meant above was no special scheduler algorithm ... just basic built-in scheduling by dividing up the 1000 step range by the number of steps ....

Fahim Farook

f

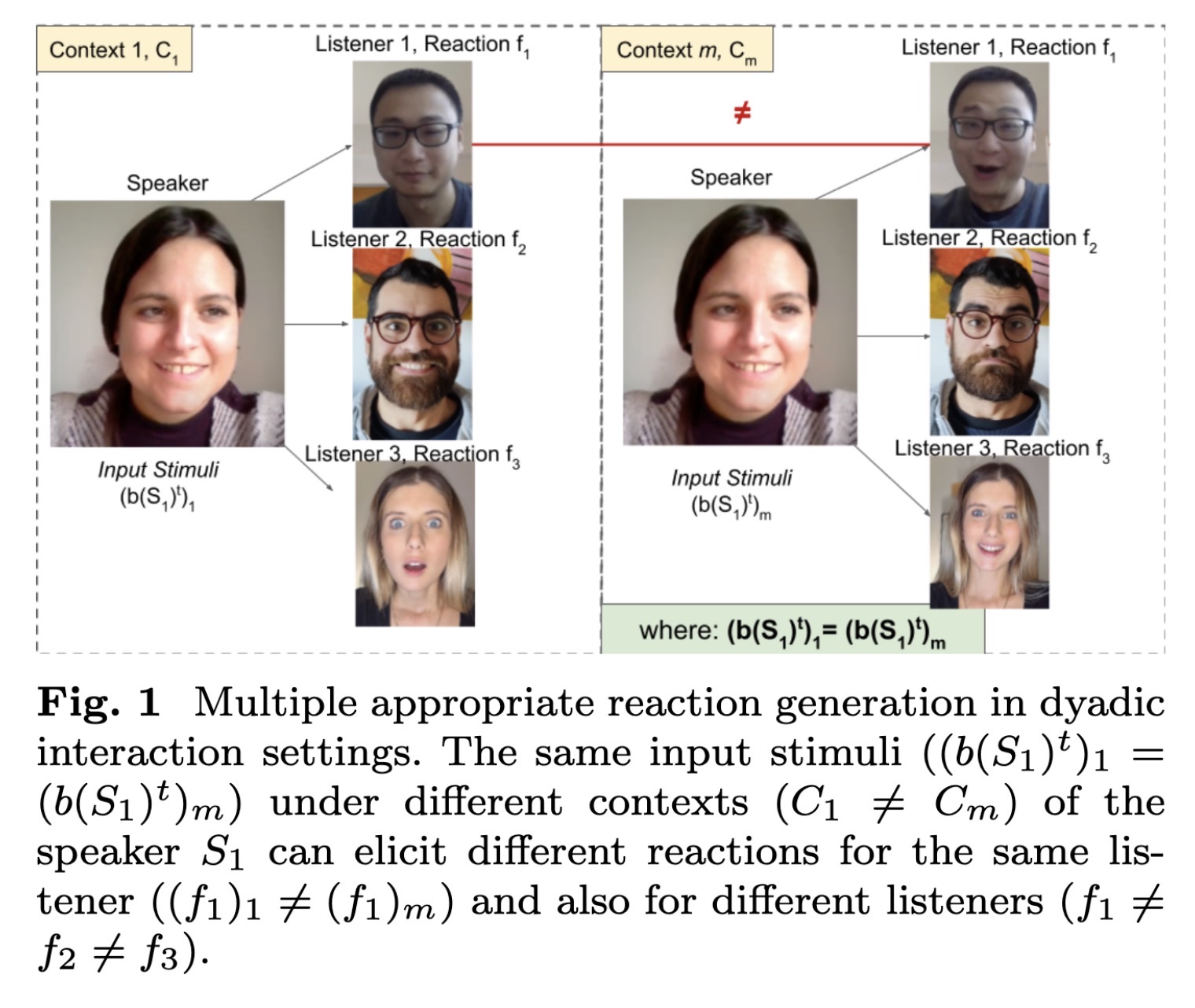

"Multiple Appropriate Facial Reaction Generation in Dyadic Interaction Settings: What, Why and How?. (arXiv:2302.06514v2 [cs.CV] UPDATED)" — An attempt to generate appropriate behavioral responses to received stimulus and to evaluate the appropriateness of the generated responses.

Paper: http://arxiv.org/abs/2302.06514

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Multiple appropriate reaction g…

Paper: http://arxiv.org/abs/2302.06514

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Multiple appropriate reaction g…

Fahim Farook

f

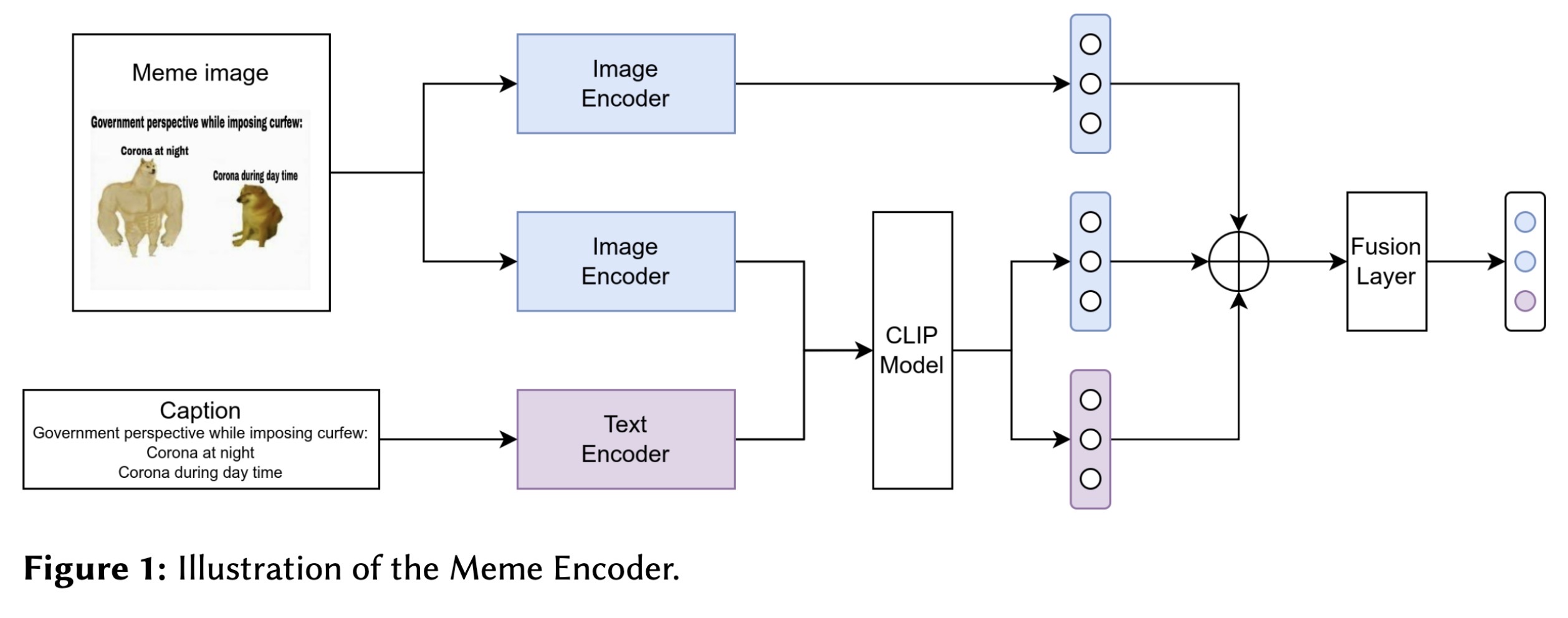

"NYCU-TWO at Memotion 3: Good Foundation, Good Teacher, then you have Good Meme Analysis. (arXiv:2302.06078v2 [cs.CL] UPDATED)" — Classifying the emotions and intensity expressed in memes using machine learning.

Paper: http://arxiv.org/abs/2302.06078

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Overview of the Meme Encoder

Paper: http://arxiv.org/abs/2302.06078

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Overview of the Meme Encoder

Fahim Farook

f

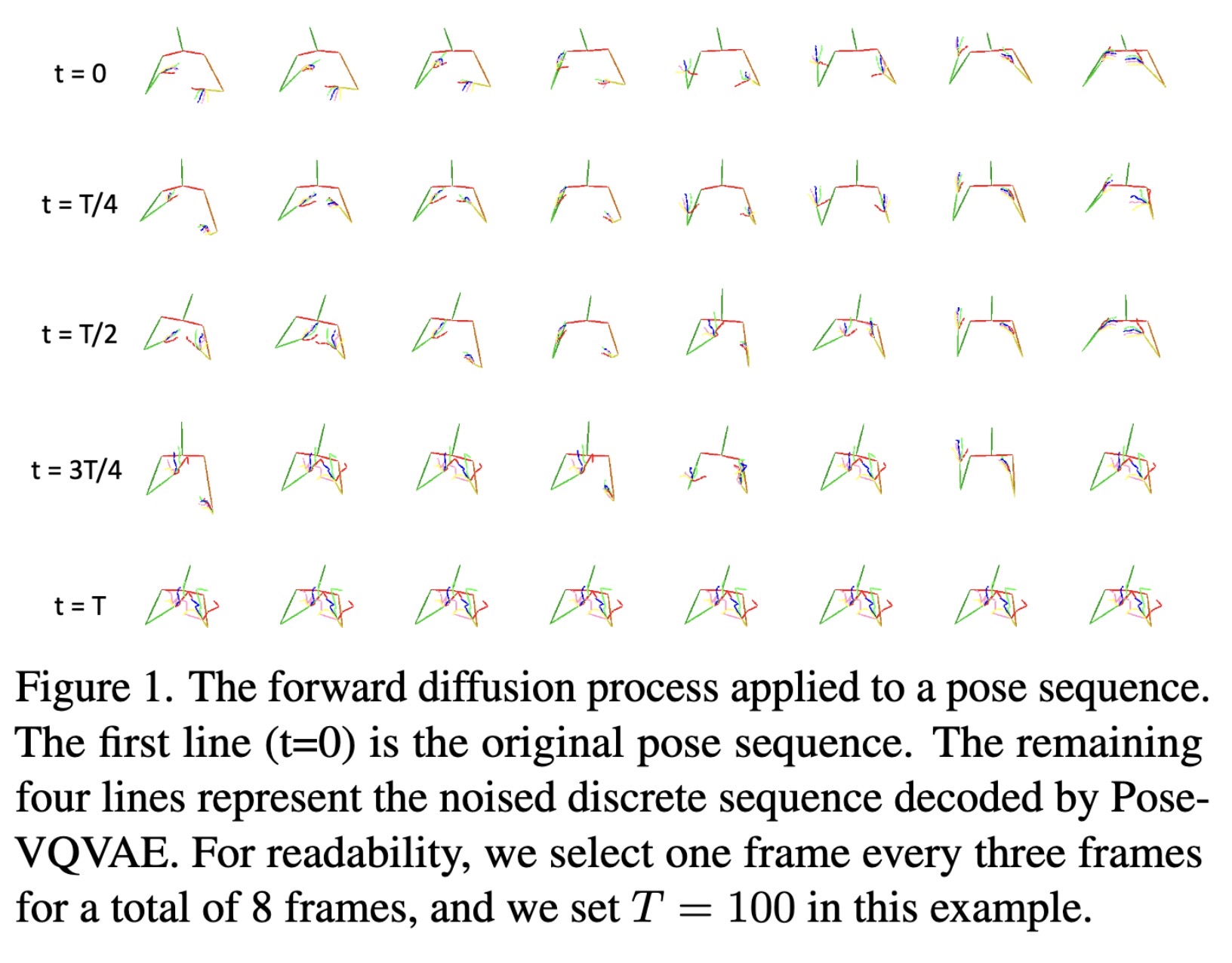

"Vector Quantized Diffusion Model with CodeUnet for Text-to-Sign Pose Sequences Generation. (arXiv:2208.09141v2 [cs.CV] UPDATED)" — Using diffusion models to generate sign language pose sequences based on spoken language.

Paper: http://arxiv.org/abs/2208.09141

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

The forward diffusion process a…

Paper: http://arxiv.org/abs/2208.09141

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

The forward diffusion process a…

Fahim Farook

f

@ajyoung Ah, didn’t know that … I mean about the PyTorch mapping. The last time I looked, the weights were just a list of data provided along with the Tensorflow variant, I believe … I knew people were asking for a script to do model conversion but there didn’t seem to be much response and that’s all I knew.

I am not sure if you’ll get that much of a speed bump with CoreML as opposed to Tensorflow. If you do go ahead, I’d be interested to hear what kind of performance you get and if it’s better than Tensorflow.

It is pretty fast on an M1 MBP since I can generate an image at 20 steps with the DPM-Solver++ scheduler in about 7 seconds. But there’s only two schedulers and nobody seems to be in a hurry to add more 😛I took a look yesterday but it’ll take me a while to get my head around it and too many things going on at the moment for me to attempt it.

But on the other hand DPM-Solver++ does work well and so maybe nobody really wants anything else?

I am not sure if you’ll get that much of a speed bump with CoreML as opposed to Tensorflow. If you do go ahead, I’d be interested to hear what kind of performance you get and if it’s better than Tensorflow.

It is pretty fast on an M1 MBP since I can generate an image at 20 steps with the DPM-Solver++ scheduler in about 7 seconds. But there’s only two schedulers and nobody seems to be in a hurry to add more 😛I took a look yesterday but it’ll take me a while to get my head around it and too many things going on at the moment for me to attempt it.

But on the other hand DPM-Solver++ does work well and so maybe nobody really wants anything else?

Fahim Farook

f

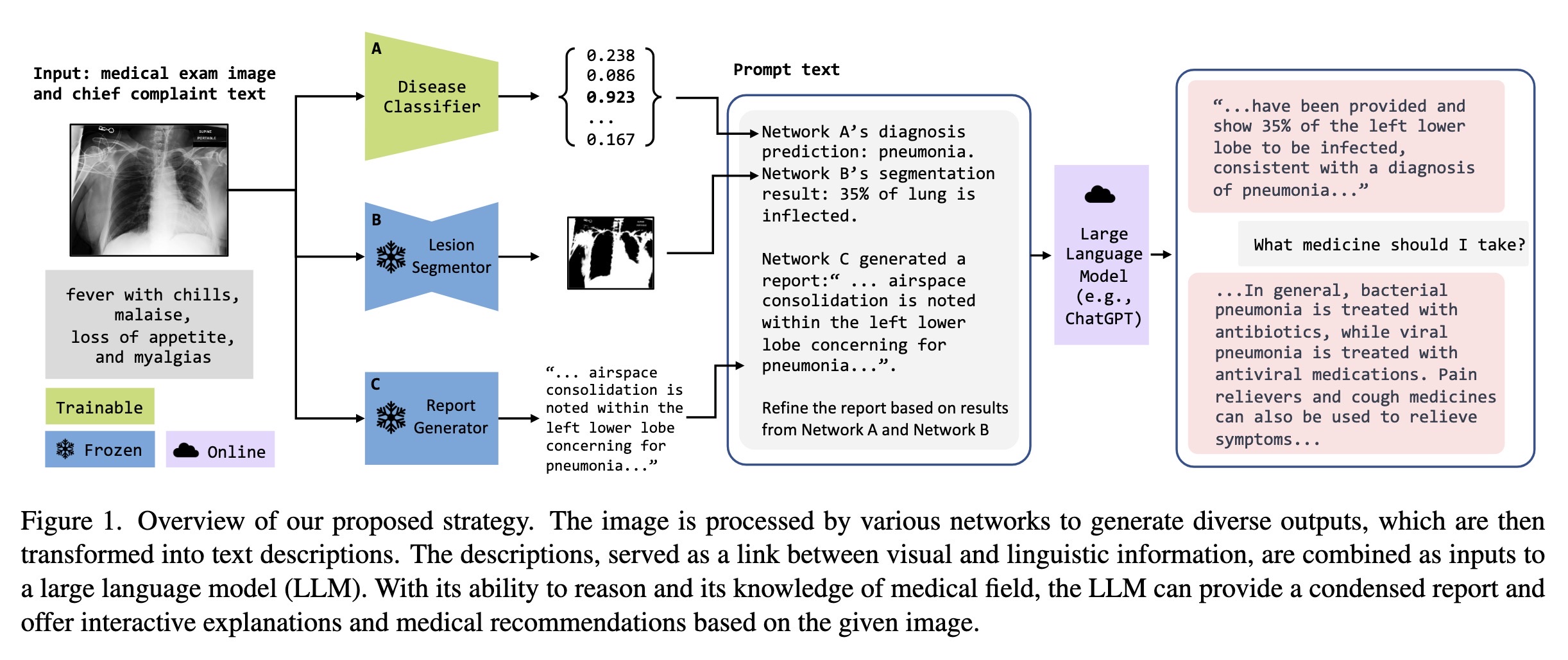

"ChatCAD: Interactive Computer-Aided Diagnosis on Medical Image using Large Language Models. (arXiv:2302.07257v1 [cs.CV])" — Using Large Language Models (LLM) with Computer-Aided Diagnosis (CAD) networks to enhance the output of CAD networks by summarizing and presenting the information in a more understandable format.

Paper: http://arxiv.org/abs/2302.07257

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Overview of our proposed strate…

Paper: http://arxiv.org/abs/2302.07257

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Overview of our proposed strate…

Fahim Farook

f

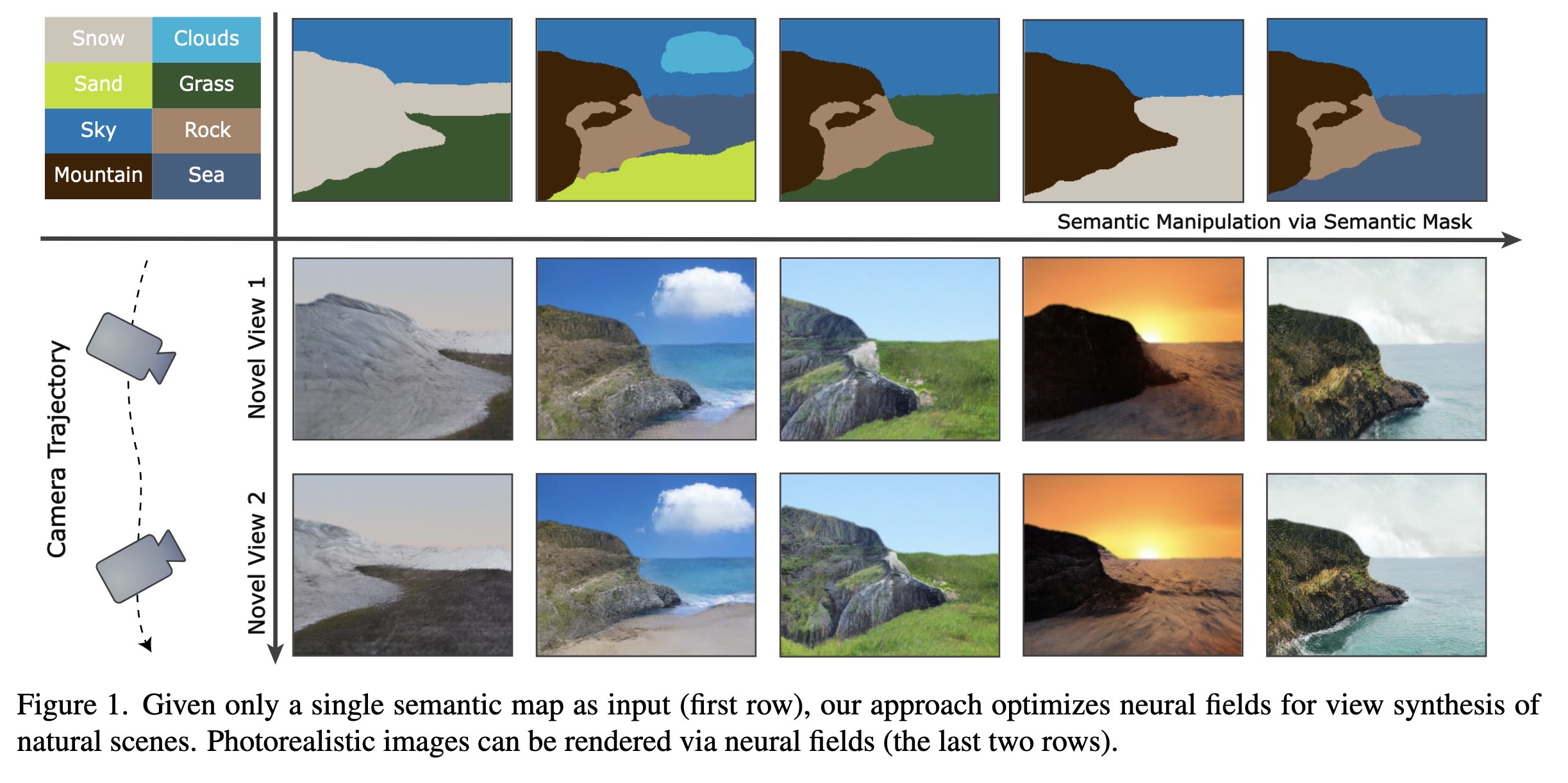

"Painting 3D Nature in 2D: View Synthesis of Natural Scenes from a Single Semantic Mask. (arXiv:2302.07224v1 [cs.CV])" — Using a semantic mask as input to generate photorealistic color images of natural scenes.

Paper: http://arxiv.org/abs/2302.07224

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Given only a single semantic ma…

Paper: http://arxiv.org/abs/2302.07224

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Given only a single semantic ma…

Fahim Farook

f

"Netizens, Academicians, and Information Professionals' Opinions About AI With Special Reference To ChatGPT. (arXiv:2302.07136v1 [cs.CY])" — It's interesting to see what people think about ChatGPT (and AI in general) given all the current rush to embrace ChatGPT as the saviour of many businesses/corporations ... 😛 But a little light on specifics — mostly general statistics/impressions.

Paper: http://arxiv.org/abs/2302.07136

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Paper: http://arxiv.org/abs/2302.07136

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Fahim Farook

f

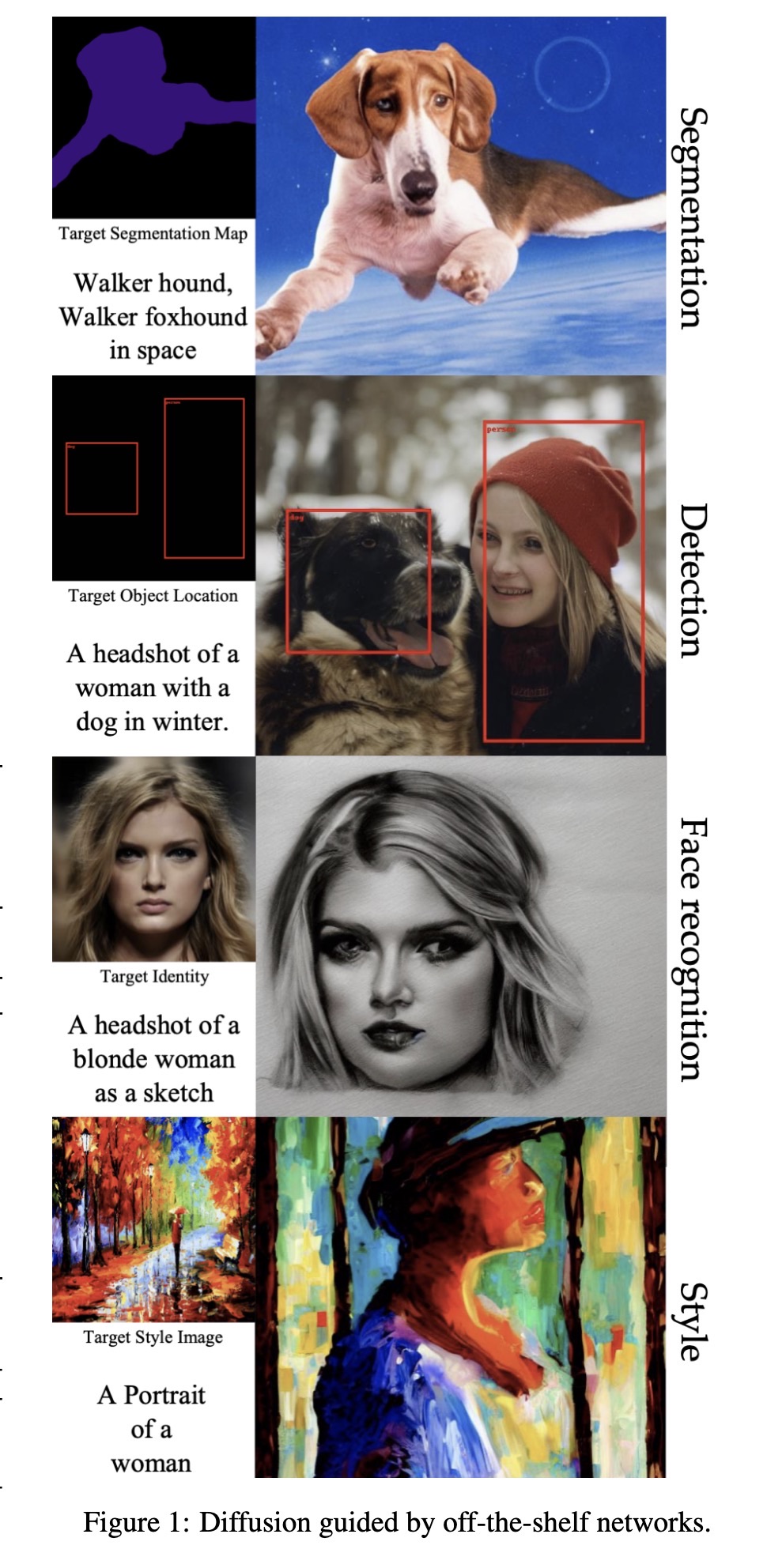

"Universal Guidance for Diffusion Models. (arXiv:2302.07121v1 [cs.CV])" — a universal guidance algorithm that enables diffusion models to be controlled by arbitrary guidance methods such as segmentation, face recognition, object detection, and classifier signals, without the need to retrain for that specific method.

Paper: http://arxiv.org/abs/2302.07121

Code: https://github.com/arpitbansal297/Universal-Guided-Diffusion

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Examples of diffusion being gui…

Paper: http://arxiv.org/abs/2302.07121

Code: https://github.com/arpitbansal297/Universal-Guided-Diffusion

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Examples of diffusion being gui…

Fahim Farook

f

A total of 82 papers in the cs.CV category on arXiv.org today — 47 new, 35 updated.

Have CoreML models I want to play with, but I guess I’ll have to read papers instead 😛

#AI #CV #NewPapers #DeepLearning #MachineLearning

Have CoreML models I want to play with, but I guess I’ll have to read papers instead 😛

#AI #CV #NewPapers #DeepLearning #MachineLearning

Fahim Farook

f

@ajyoung I assume that’s DiffusionBee (or a variant) that Divam Gupta did? I used that for a bit and it was indeed one of the faster/better ways to use StableDiffusion on a Mac but the lack of models was what kept me searching for alternatives 🙂

CoreML does work with Intel Macs, but you’ll have to create the models with the “--attention-implementation” argument set to “ORIGINAL” I believe since the default setting for that argument is “SPLIT_EINSUM” and that’s for the Apple Neural Engine (ANE) and I don’t believe that’s available except on Apple Silicon devices …

CoreML does work with Intel Macs, but you’ll have to create the models with the “--attention-implementation” argument set to “ORIGINAL” I believe since the default setting for that argument is “SPLIT_EINSUM” and that’s for the Apple Neural Engine (ANE) and I don’t believe that’s available except on Apple Silicon devices …

Fahim Farook

f

Edited 2 years ago

Since yesterday evening, when I finally perfected the process for converting existing #StableDiffusion models (in either CKPT or SAFETENSOR format) to CoreML format, I’ve converted six models. Of them, all except for one work fine 🙂

So, in case this helps anybody else, here are the important things to remember:

1. You *must* use Python 3.8. If you any other Python version, you will end up with errors. (Not that I’ve tested all Python versions, but I did have errors with Python 3.9 and have read reports of others …)

2. You should be on Ventura 13.1 or higher.

3. You need the models to be in Diffusers format to run the conversion, but the easiest way that has worked for me is to download a CKPT file, convert it to Diffusers and point the script at the local folder with the Diffusers format model.

4. HuggingFace folks have a bunch of conversion scripts here: https://github.com/huggingface/diffusers/tree/main/scripts

5. The above scripts don’t mention SAFETENSOR format in the file names but SAFETENSOR is just CKPT with some changes. The CKPT conversion file has an extra argument named “--from_safetensors” so you can use the same script for CKPT to convert SAFETENSOR files with that extra argument.

6. You can use the Apple conversion script to convert one element at at a time using the different arguments such as “--convert-unet”, “--convert-text-encoder” etc. You don’t have to run all of them together. In fact, it turned out when I ran them all together, sometimes a component might be left out — generally the text encoder.

7. Once you’ve converted all the components and have them in one folder, you have to run the Apple conversion script once more with the “--bundle-resources-for-swift-cli” argument (pointing at your output folder) to create the final compiled CoreML model files (.mlmodelc) from your .mlpackage files.

That’s it 🙂 If you do all of the above, it should be fairly straightforward to create new CoreML models from existing StableDiffusion models.

Feel free to hit me up should you run into issues. Since I’ve gone through all this, I’d be happy to help anybody else facing the same issues ….

#CoreML #StableDiffusion #MachineLearning #DeepLearning #ModelConversion

So, in case this helps anybody else, here are the important things to remember:

1. You *must* use Python 3.8. If you any other Python version, you will end up with errors. (Not that I’ve tested all Python versions, but I did have errors with Python 3.9 and have read reports of others …)

2. You should be on Ventura 13.1 or higher.

3. You need the models to be in Diffusers format to run the conversion, but the easiest way that has worked for me is to download a CKPT file, convert it to Diffusers and point the script at the local folder with the Diffusers format model.

4. HuggingFace folks have a bunch of conversion scripts here: https://github.com/huggingface/diffusers/tree/main/scripts

5. The above scripts don’t mention SAFETENSOR format in the file names but SAFETENSOR is just CKPT with some changes. The CKPT conversion file has an extra argument named “--from_safetensors” so you can use the same script for CKPT to convert SAFETENSOR files with that extra argument.

6. You can use the Apple conversion script to convert one element at at a time using the different arguments such as “--convert-unet”, “--convert-text-encoder” etc. You don’t have to run all of them together. In fact, it turned out when I ran them all together, sometimes a component might be left out — generally the text encoder.

7. Once you’ve converted all the components and have them in one folder, you have to run the Apple conversion script once more with the “--bundle-resources-for-swift-cli” argument (pointing at your output folder) to create the final compiled CoreML model files (.mlmodelc) from your .mlpackage files.

That’s it 🙂 If you do all of the above, it should be fairly straightforward to create new CoreML models from existing StableDiffusion models.

Feel free to hit me up should you run into issues. Since I’ve gone through all this, I’d be happy to help anybody else facing the same issues ….

#CoreML #StableDiffusion #MachineLearning #DeepLearning #ModelConversion

Fahim Farook

f

Best Laid Plans (not really a warning, other than length and whining)

Show content

@AngelaPreston Hope your son feels better soon! It’s never fun to be sick but it’s even worse when you’re a kid … at least, that’s how I remember it.

Fahim Farook

repeated

repeated

Bryan Wright

catselbow@fosstodon.orgA tiny jumping spider pauses to take in the view.

#spiders #arachnids #spider #arthropods #nature #photography #macrophotography

Fahim Farook

repeated

repeated

Low Quality Facts

lowqualityfacts@mstdn.socialSounds awesome, please let us see it Joe.

https://patreon.com/lowqualityfacts

Fahim Farook

repeated

repeated

Fahim Farook

repeated

repeated

Katharina

Katharina@toot.walesPainted some pine trees and a Robin.

#watercolour #painting #pinetrees #Robin #landscapepainting #mastoart