Posts

1568Following

137Followers

877I'm currently working on my second novel which is complete, but is in the edit stage. I wrote my first novel over 20 years ago but then didn't write much till now.

I post about #Coding, #Flutter, #Writing, #Movies and #TV. I'll also talk about #Technology, #Gadgets, #MachineLearning, #DeepLearning and a few other things as the fancy strikes ...

Lived in: 🇱🇰🇸🇦🇺🇸🇳🇿🇸🇬🇲🇾🇦🇪🇫🇷🇪🇸🇵🇹🇶🇦🇨🇦

Fahim Farook

f

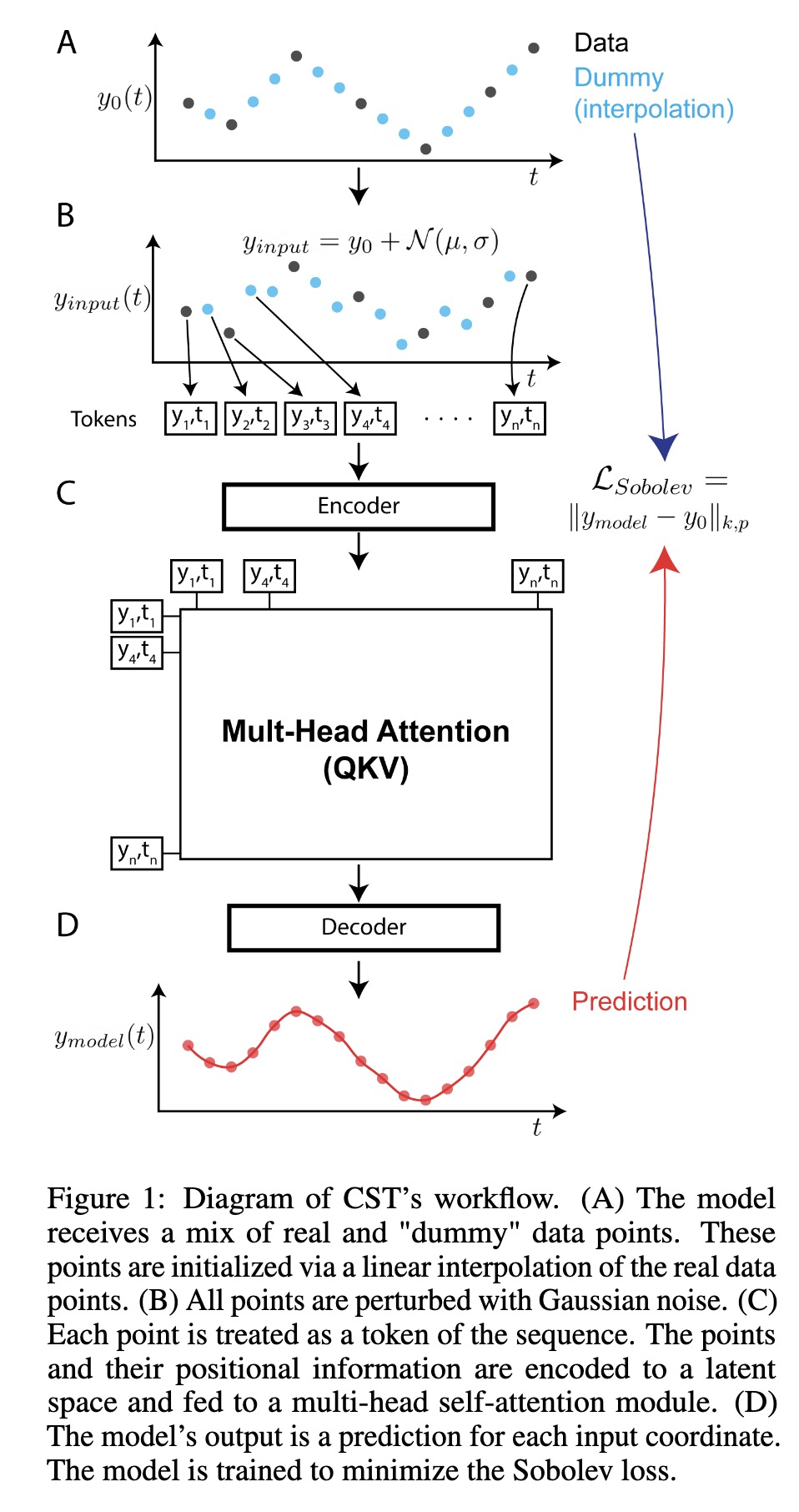

"Continuous Spatiotemporal Transformers. (arXiv:2301.13338v1 [cs.LG])" — A new transformer architecture that is designed for the modeling of continuous systems which guarantees a continuous and smooth output via optimization in Sobolev space.

Paper: http://arxiv.org/abs/2301.13338

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Diagram of CST’s workflow. (A) …

Paper: http://arxiv.org/abs/2301.13338

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Diagram of CST’s workflow. (A) …

Fahim Farook

f

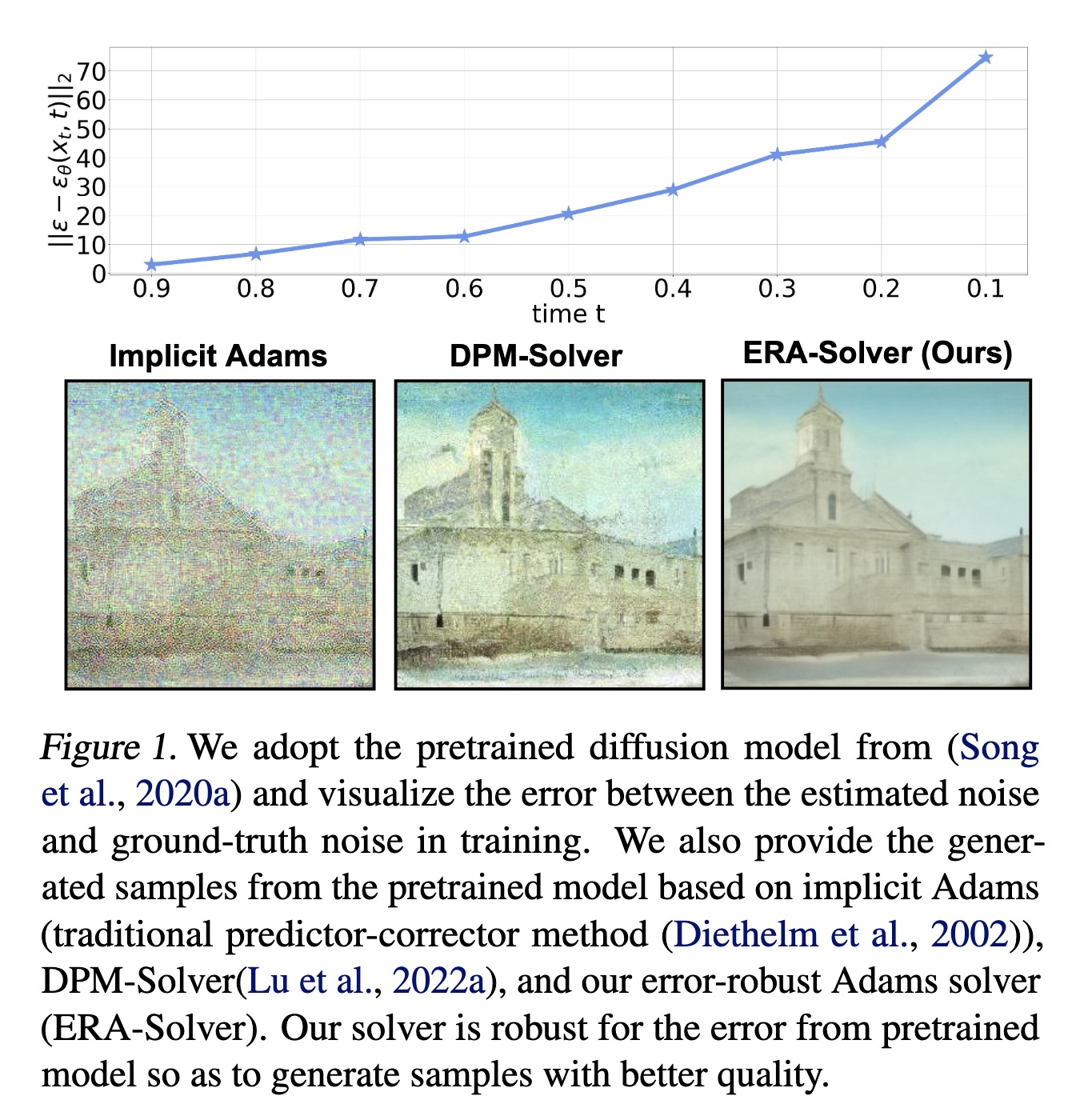

"ERA-Solver: Error-Robust Adams Solver for Fast Sampling of Diffusion Probabilistic Models. (arXiv:2301.12935v2 [cs.LG] UPDATED)" — An error-robust Adams solver (ERA-Solver), which utilizes the implicit Adams numerical method that consists of a predictor and a corrector.

Paper: http://arxiv.org/abs/2301.12935

Note: The PDF for this version of the paper is currently not available

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

We adopt the pretrained diffusi…

Paper: http://arxiv.org/abs/2301.12935

Note: The PDF for this version of the paper is currently not available

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

We adopt the pretrained diffusi…

Fahim Farook

f

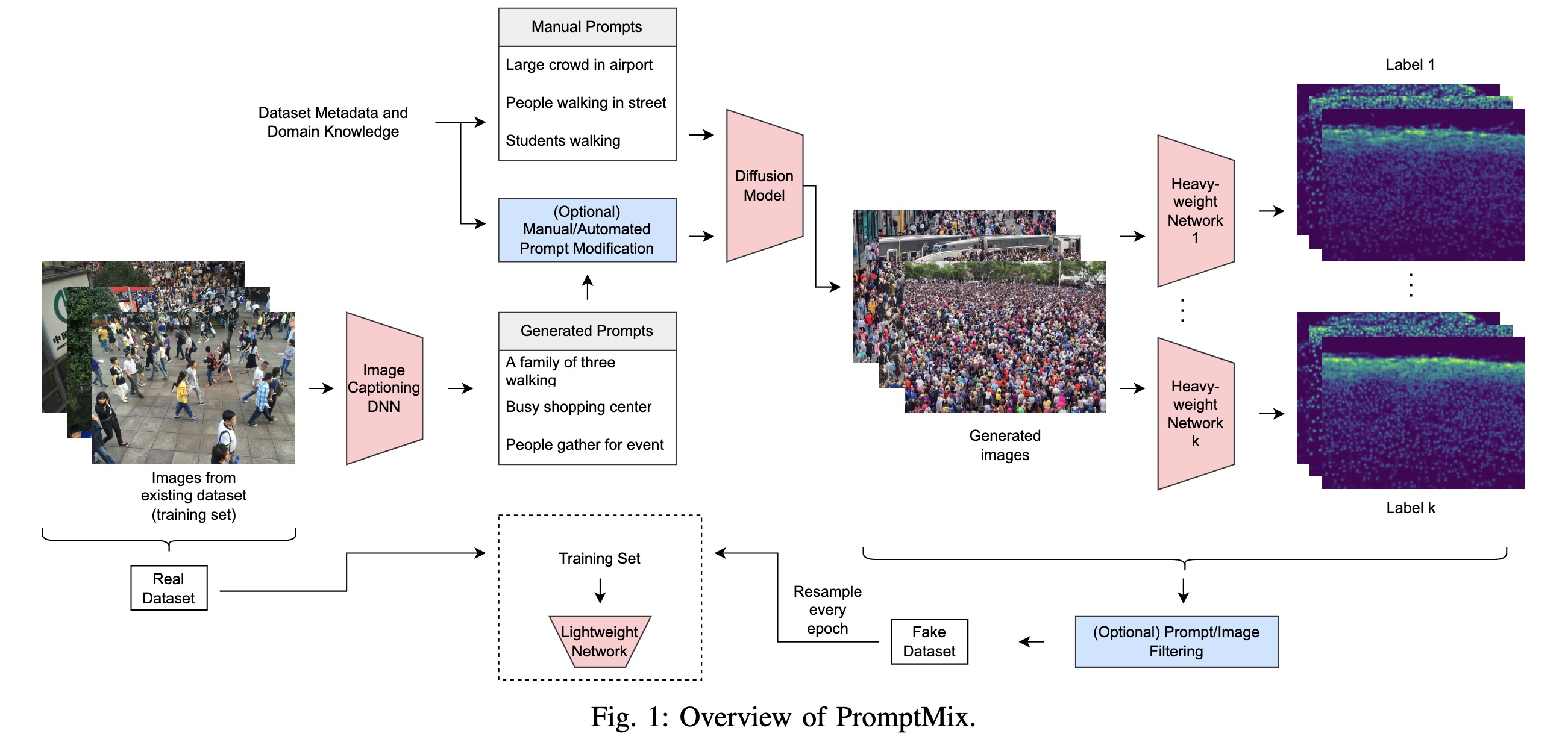

"PromptMix: Text-to-image diffusion models enhance the performance of lightweight networks. (arXiv:2301.12914v2 [cs.CV] UPDATED)" — A method for artificially boosting the size of existing datasets, that can be used to improve the performance of lightweight networks.

Paper: http://arxiv.org/abs/2301.12914

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Overview of PromptMix

Paper: http://arxiv.org/abs/2301.12914

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Overview of PromptMix

Fahim Farook

f

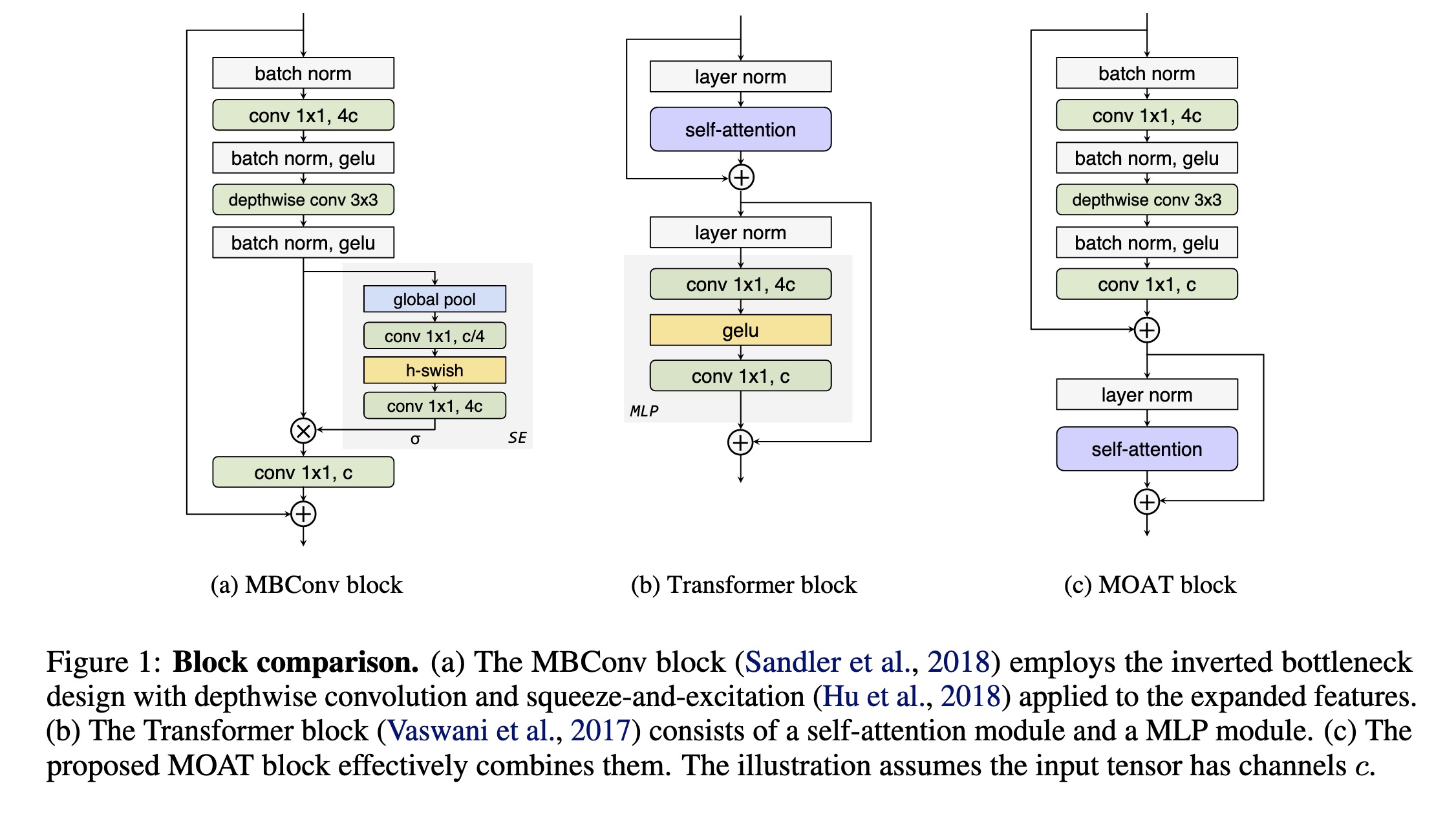

"MOAT: Alternating Mobile Convolution and Attention Brings Strong Vision Models. (arXiv:2210.01820v2 [cs.CV] UPDATED)" — A family of neural networks that build on top of mobile convolution (i.e., inverted residual blocks) and attention which not only enhances the network representation capacity, but also produces better downsampled features.

Paper: http://arxiv.org/abs/2210.01820

Code: https://github.com/google-research/deeplab2

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Block comparison. (a) The MBCon…

Paper: http://arxiv.org/abs/2210.01820

Code: https://github.com/google-research/deeplab2

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Block comparison. (a) The MBCon…

Fahim Farook

f

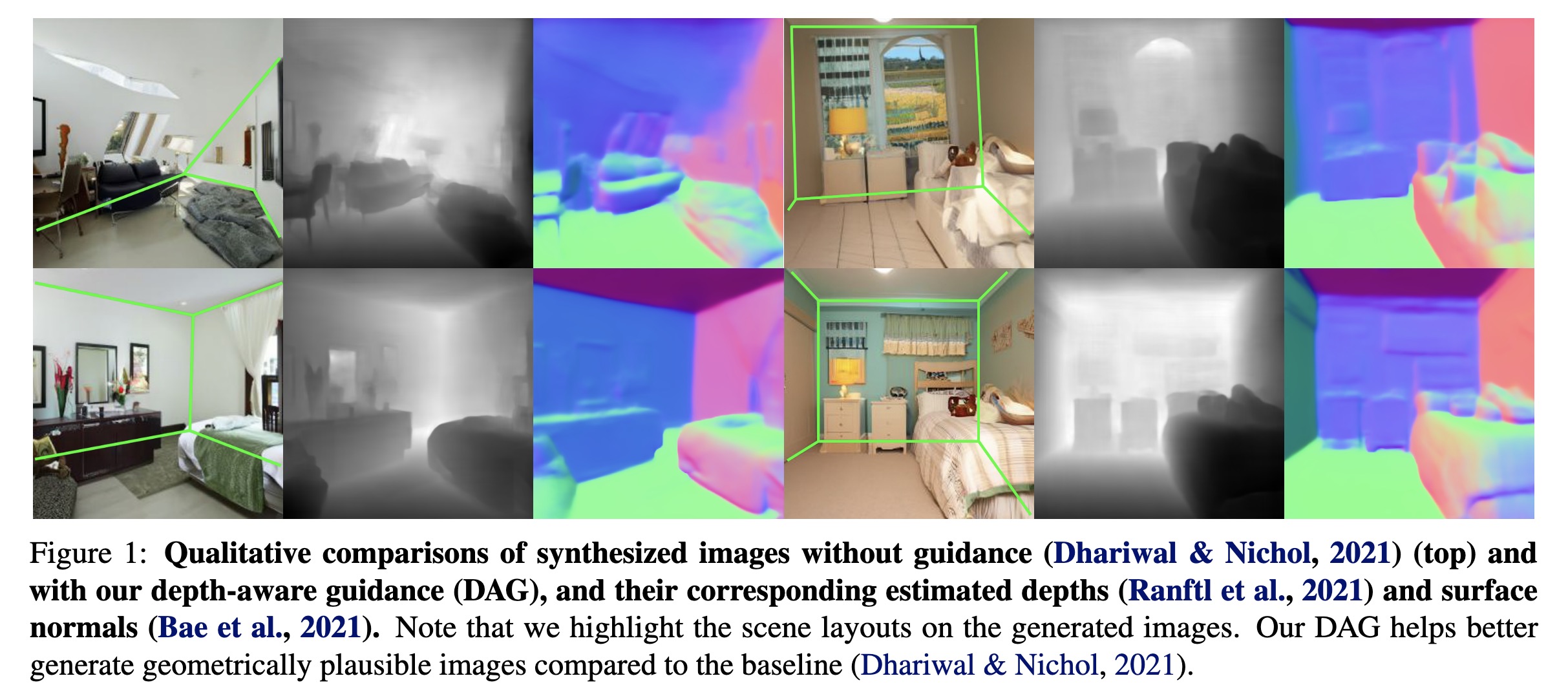

"DAG: Depth-Aware Guidance with Denoising Diffusion Probabilistic Models. (arXiv:2212.08861v2 [cs.CV] UPDATED)" — A guidance method for diffusion models that uses estimated depth information derived from the rich intermediate representations of diffusion models.

Paper: http://arxiv.org/abs/2212.08861

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Qualitative comparisons of synt…

Paper: http://arxiv.org/abs/2212.08861

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Qualitative comparisons of synt…

Fahim Farook

f

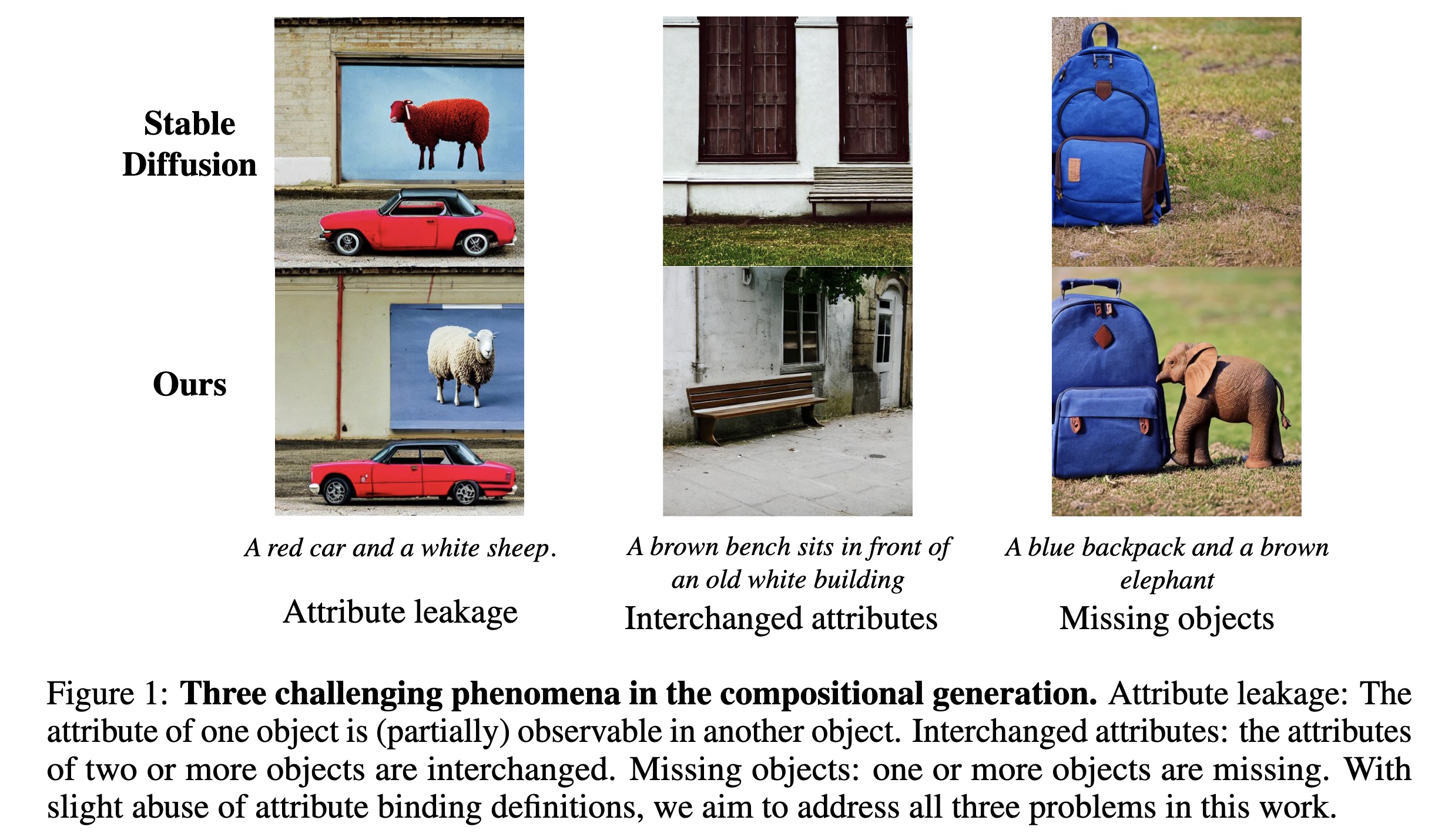

"Training-Free Structured Diffusion Guidance for Compositional Text-to-Image Synthesis. (arXiv:2212.05032v2 [cs.CV] UPDATED)" — Improving the compositional skills of text-to-image models; specifically, obtainining more accurate attribute binding and better image compositions by incorporating linguistic structures with the diffusion guidance process based on the controllable properties of manipulating cross-attention layers in diffusion-based models.

Paper: http://arxiv.org/abs/2212.05032

Code: https://github.com/weixi-feng/structured-diffusion-guidance

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Three challenging phenomena in …

Paper: http://arxiv.org/abs/2212.05032

Code: https://github.com/weixi-feng/structured-diffusion-guidance

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Three challenging phenomena in …

Fahim Farook

f

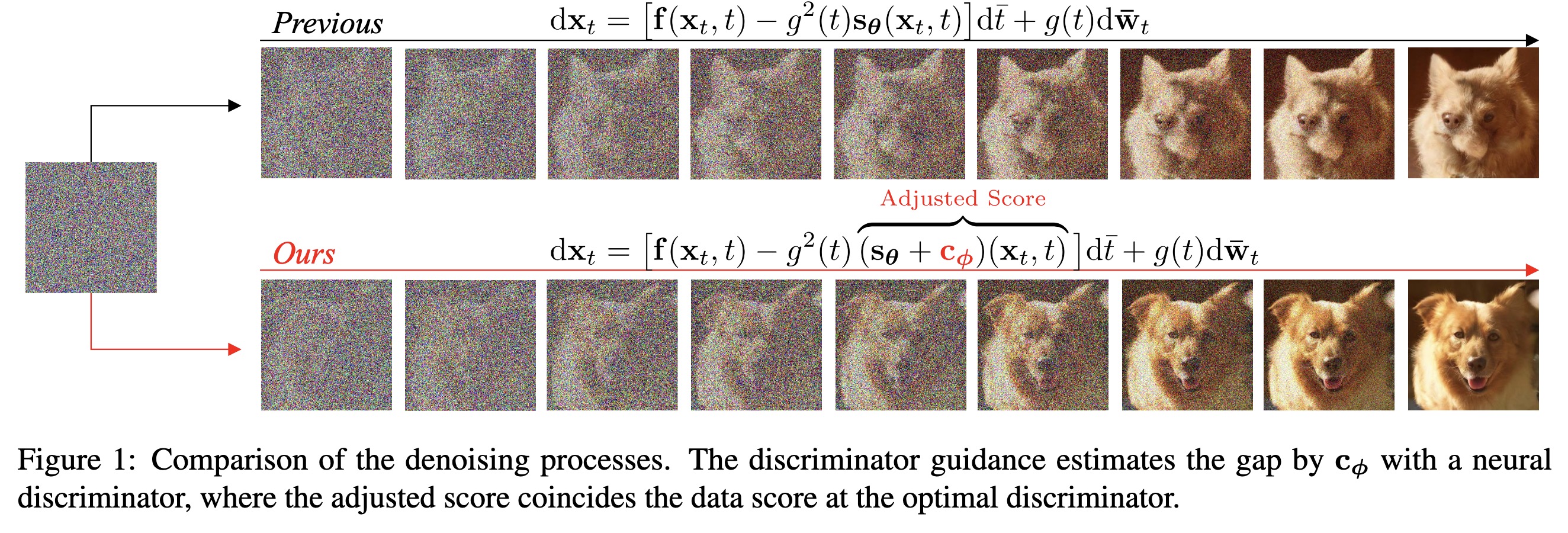

"Refining Generative Process with Discriminator Guidance in Score-based Diffusion Models. (arXiv:2211.17091v2 [cs.CV] UPDATED)" — A generative SDE with score adjustment using an auxiliary discriminator with the goal of improving the original generative process of a pre-trained diffusion model by estimating the gap between the pre-trained score estimation and the true data score.

Paper: http://arxiv.org/abs/2211.17091

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Comparison of the denoising pro…

Paper: http://arxiv.org/abs/2211.17091

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Comparison of the denoising pro…

Fahim Farook

f

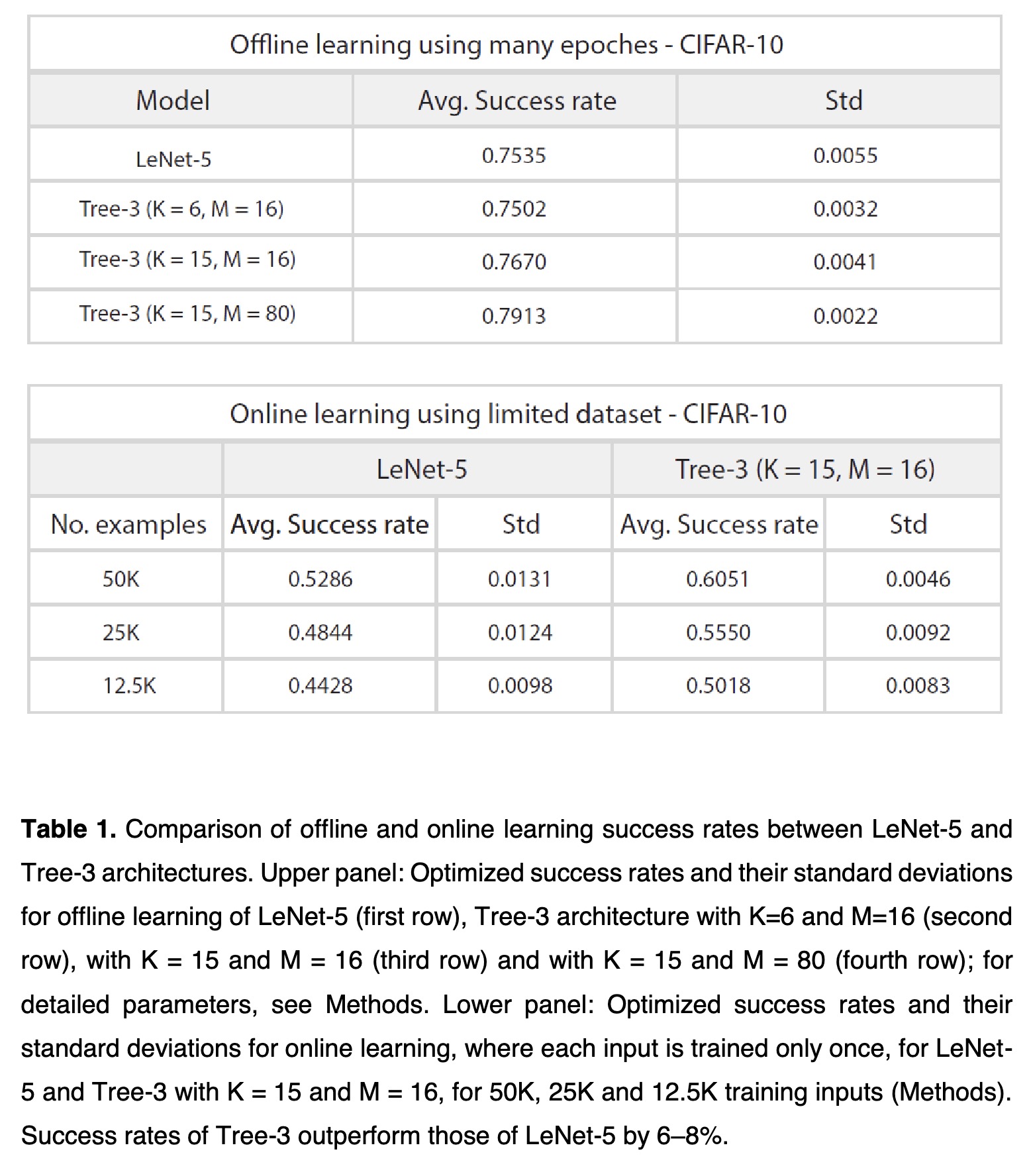

"Learning on tree architectures outperforms a convolutional feedforward network. (arXiv:2211.11378v3 [cs.CV] UPDATED)" — A 3-layer tree architecture inspired by experimental-based dendritic tree adaptations is developed and applied to the offline and online learning of the CIFAR-10 database to show that this architecture outperforms the achievable success rates of the 5-layer convolutional LeNet.

Paper: http://arxiv.org/abs/2211.11378

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Comparison of offline and onlin…

Paper: http://arxiv.org/abs/2211.11378

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Comparison of offline and onlin…

Fahim Farook

f

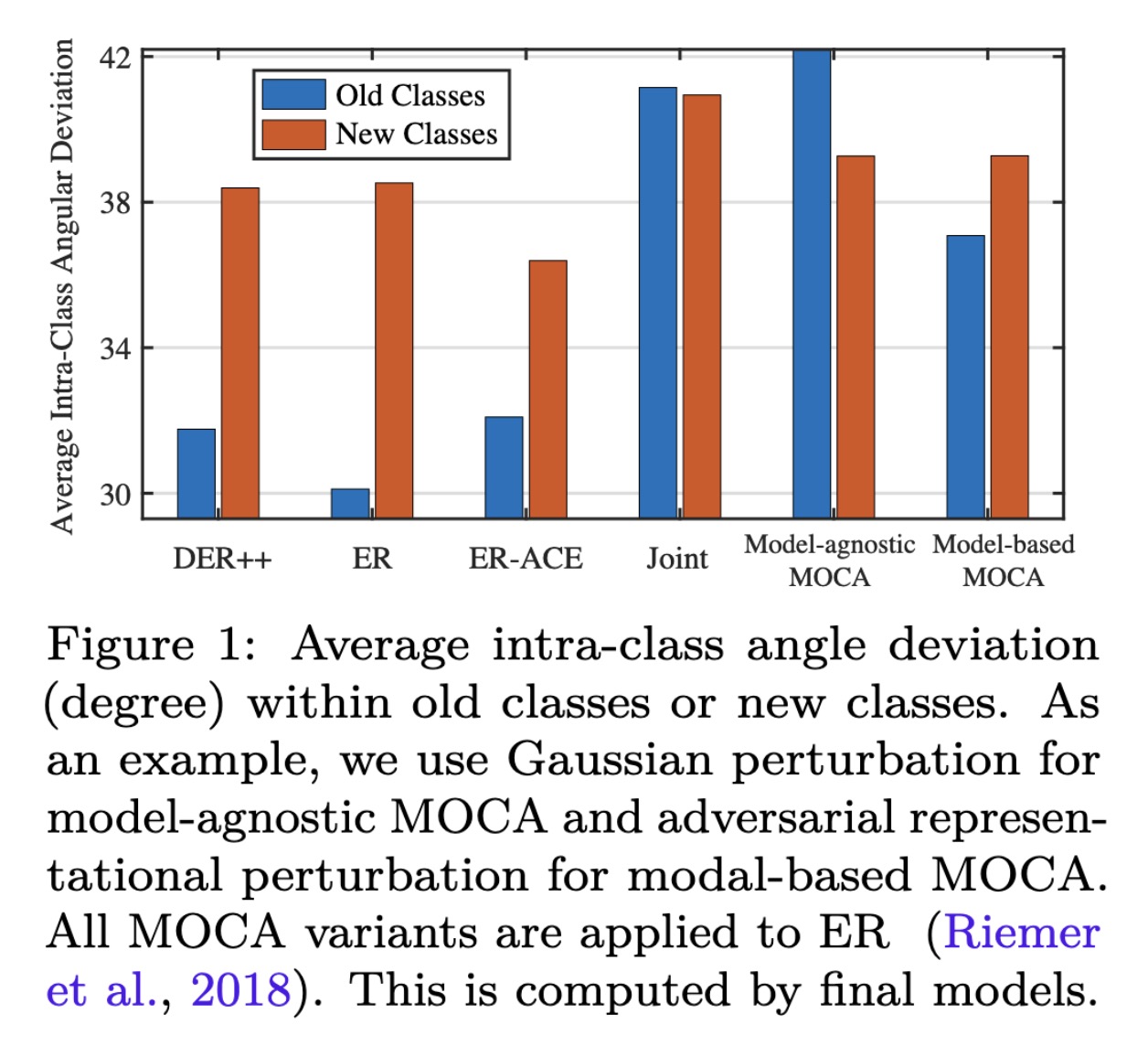

"Continual Learning by Modeling Intra-Class Variation. (arXiv:2210.05398v2 [cs.LG] UPDATED)" — An examination of memory-based continual learning which identifies that large variation in the representation space is crucial for avoiding catastrophic forgetting.

Paper: http://arxiv.org/abs/2210.05398

Code: https://github.com/yulonghui/moca

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Average intra-class angle devia…

Paper: http://arxiv.org/abs/2210.05398

Code: https://github.com/yulonghui/moca

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Average intra-class angle devia…

Fahim Farook

f

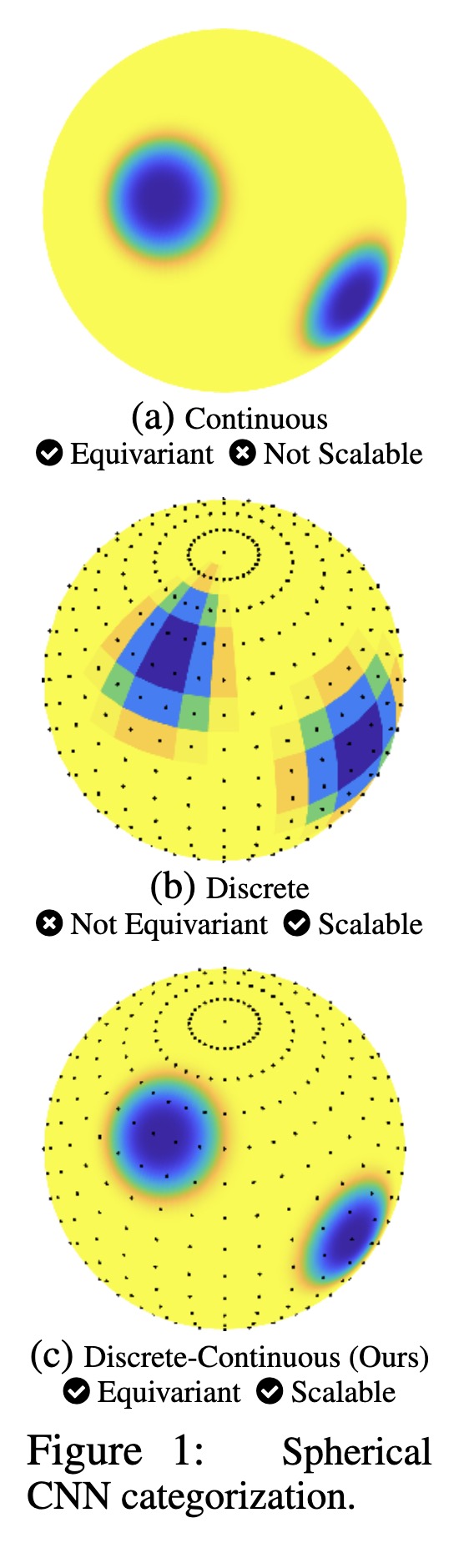

"Scalable and Equivariant Spherical CNNs by Discrete-Continuous (DISCO) Convolutions. (arXiv:2209.13603v3 [cs.CV] UPDATED)" — A hybrid discrete-continuous (DISCO) group convolution for spherical convolutional neural networks (CNN) that is simultaneously equivariant and computationally scalable to high-resolution.

Paper: http://arxiv.org/abs/2209.13603

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Spherical CNN categorization

Paper: http://arxiv.org/abs/2209.13603

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Spherical CNN categorization

Fahim Farook

f

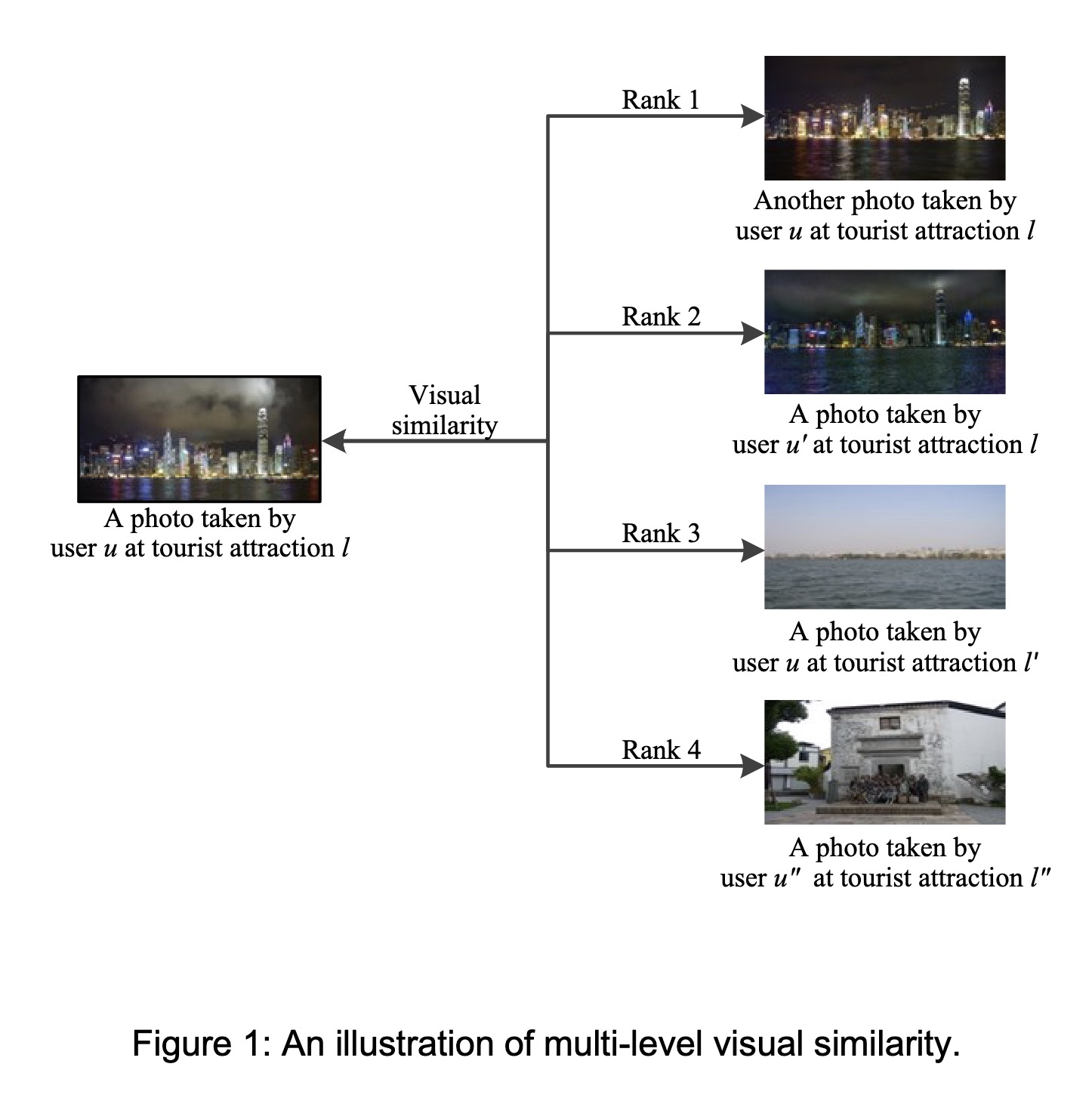

"Multi-Level Visual Similarity Based Personalized Tourist Attraction Recommendation Using Geo-Tagged Photos. (arXiv:2109.08275v2 [cs.MM] UPDATED)" — A geo-tagged photo based tourist attraction recommendation system which utilizes the visual contents of photos and interaction behavior data to obtain the final embeddings of users and tourist attractions, which are then used to predict the visit probabilities.

Paper: http://arxiv.org/abs/2109.08275

Code: https://github.com/revaludo/MEAL

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

An illustration of the multi-le…

Paper: http://arxiv.org/abs/2109.08275

Code: https://github.com/revaludo/MEAL

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

An illustration of the multi-le…

Fahim Farook

f

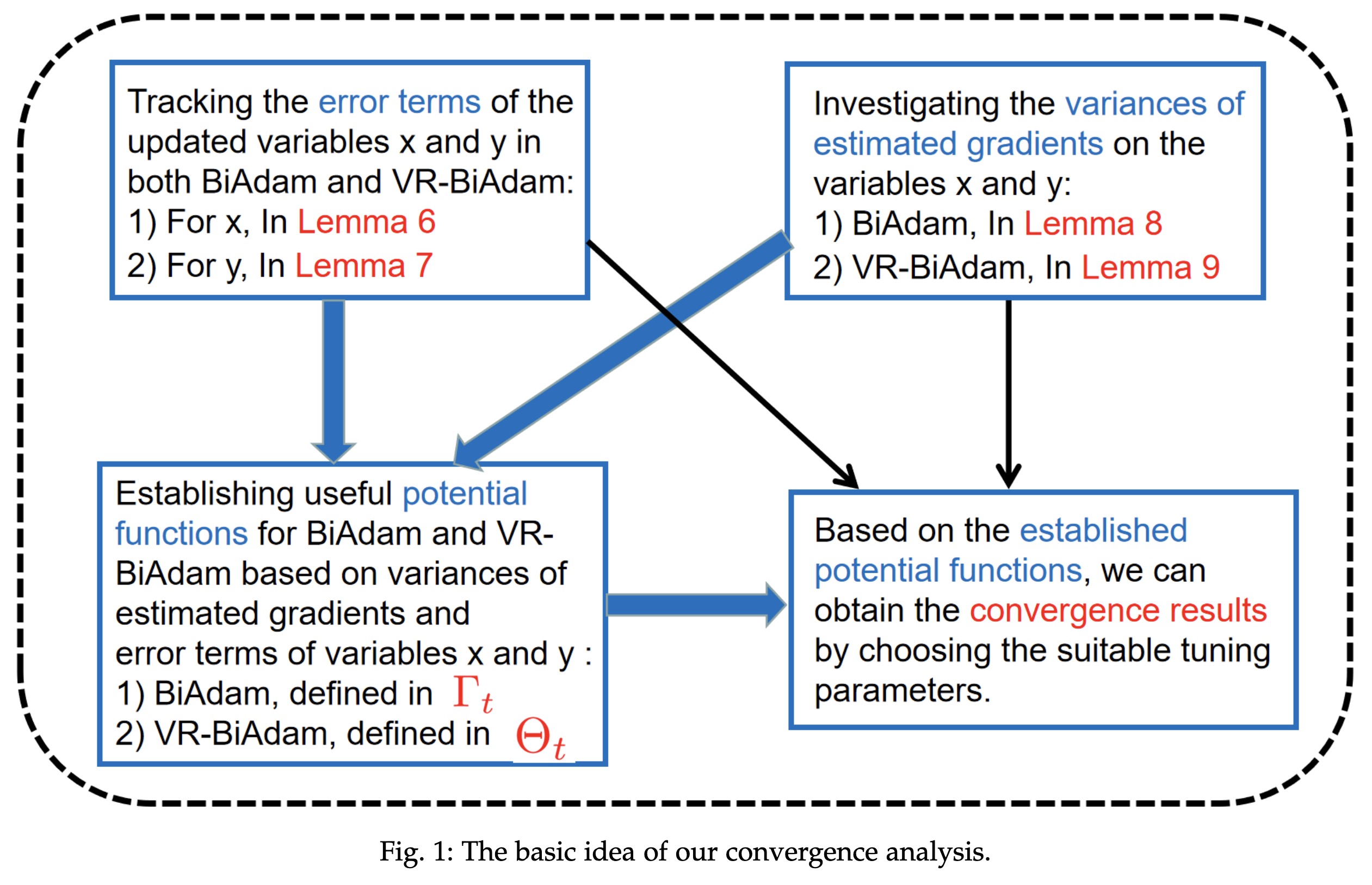

"BiAdam: Fast Adaptive Bilevel Optimization Methods. (arXiv:2106.11396v3 [math.OC] UPDATED)" — A novel fast adaptive bilevel framework to solve stochastic bilevel optimization problems that the outer problem is possibly nonconvex and the inner problem is strongly convex.

Paper: http://arxiv.org/abs/2106.11396

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

The basic idea of the convergen…

Paper: http://arxiv.org/abs/2106.11396

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

The basic idea of the convergen…

Fahim Farook

f

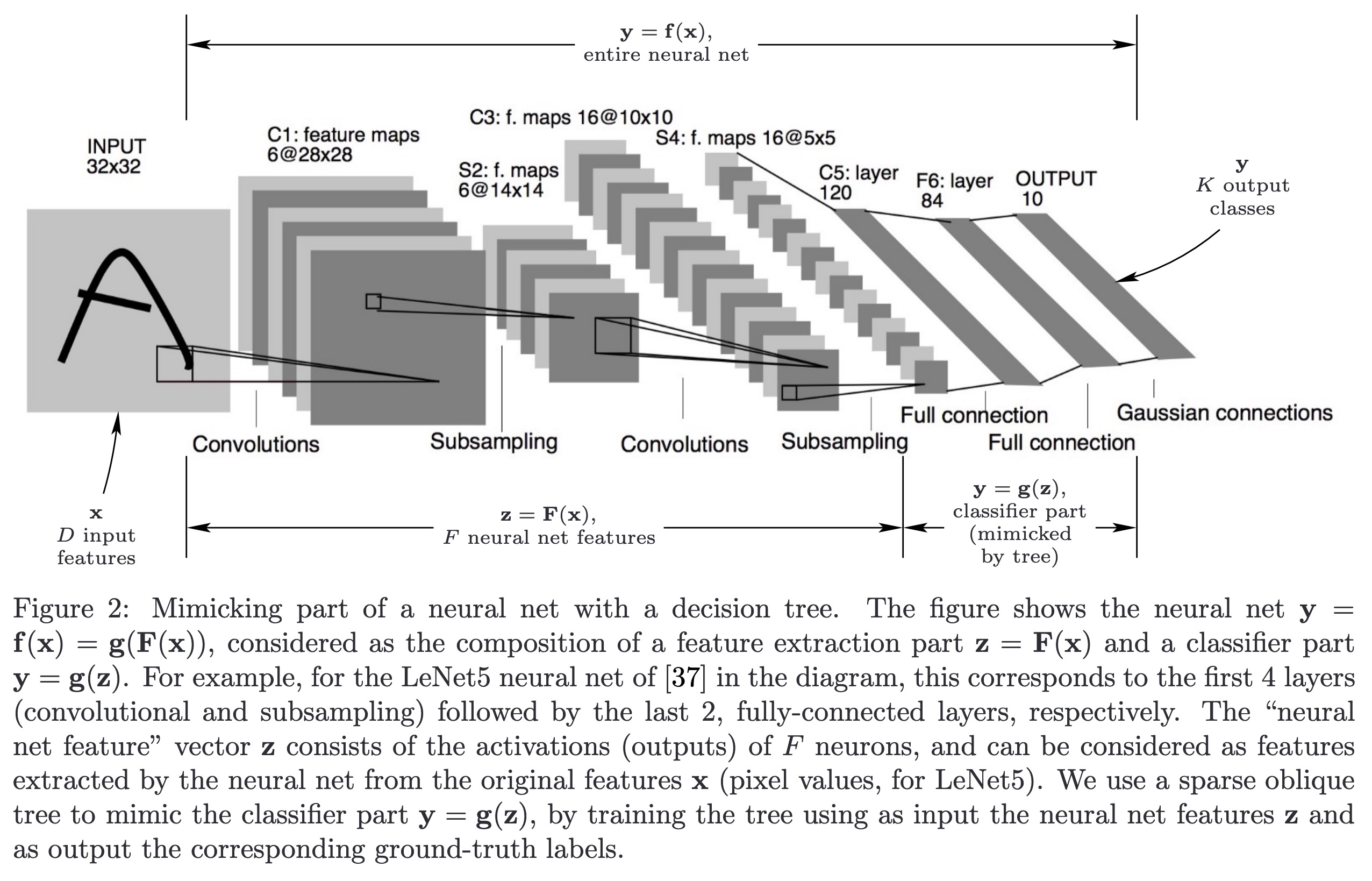

"Sparse Oblique Decision Trees: A Tool to Understand and Manipulate Neural Net Features. (arXiv:2104.02922v2 [cs.LG] UPDATED)" — An effort to understanding which of the internal features computed by the neural net are responsible for a particular class, by mimicking part of the neural net with an oblique decision tree having sparse weight vectors at the decision nodes.

Paper: http://arxiv.org/abs/2104.02922

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Mimicking part of a neural net …

Paper: http://arxiv.org/abs/2104.02922

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Mimicking part of a neural net …

Fahim Farook

f

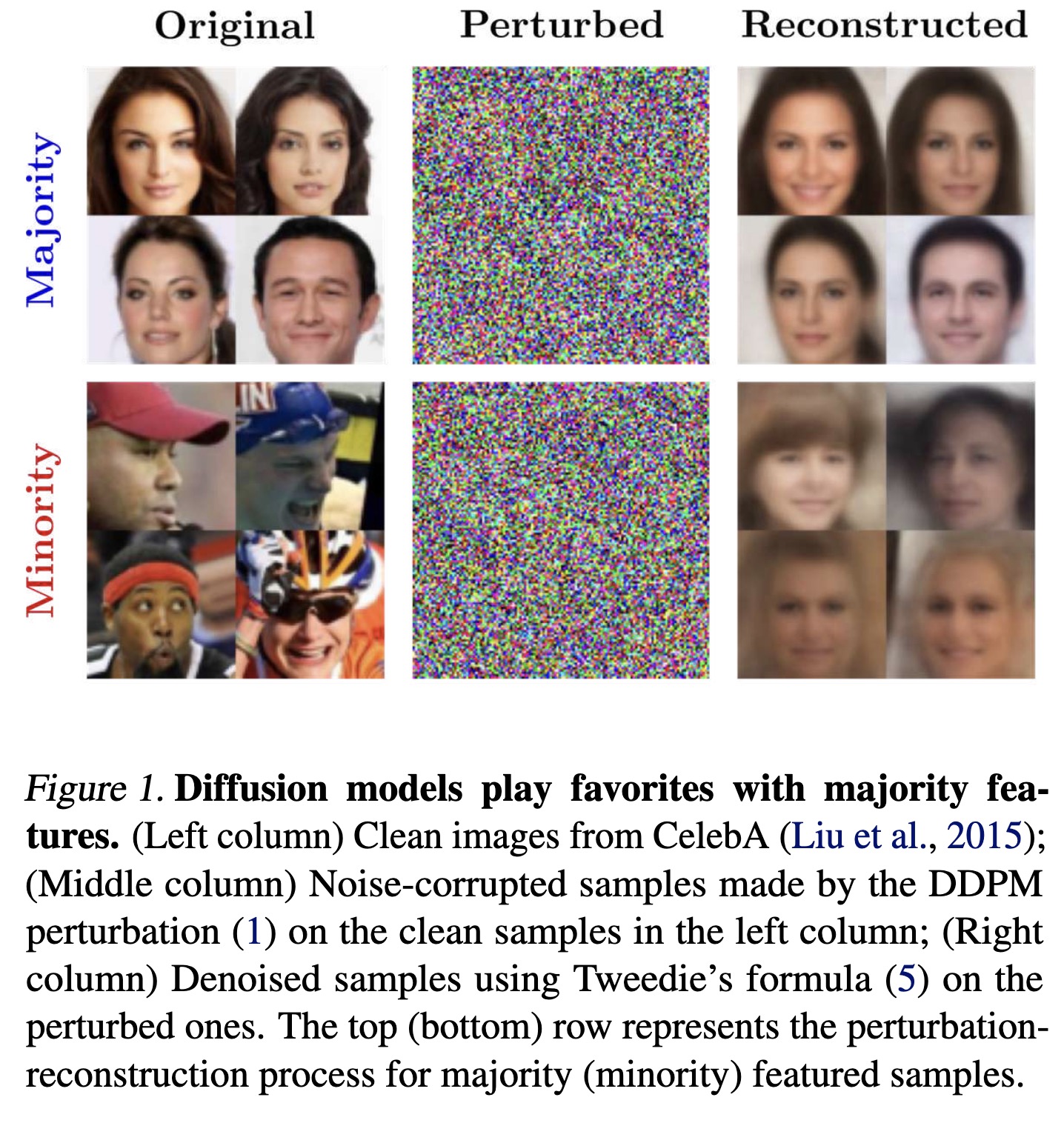

"Don't Play Favorites: Minority Guidance for Diffusion Models. (arXiv:2301.12334v1 [cs.LG])" — A framework that can make the generation process of the diffusion models focus on the minority samples, which are instances that lie on low-density regions of a data manifold.

Paper: http://arxiv.org/abs/2301.12334

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Diffusion models play favorites…

Paper: http://arxiv.org/abs/2301.12334

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Diffusion models play favorites…

Fahim Farook

f

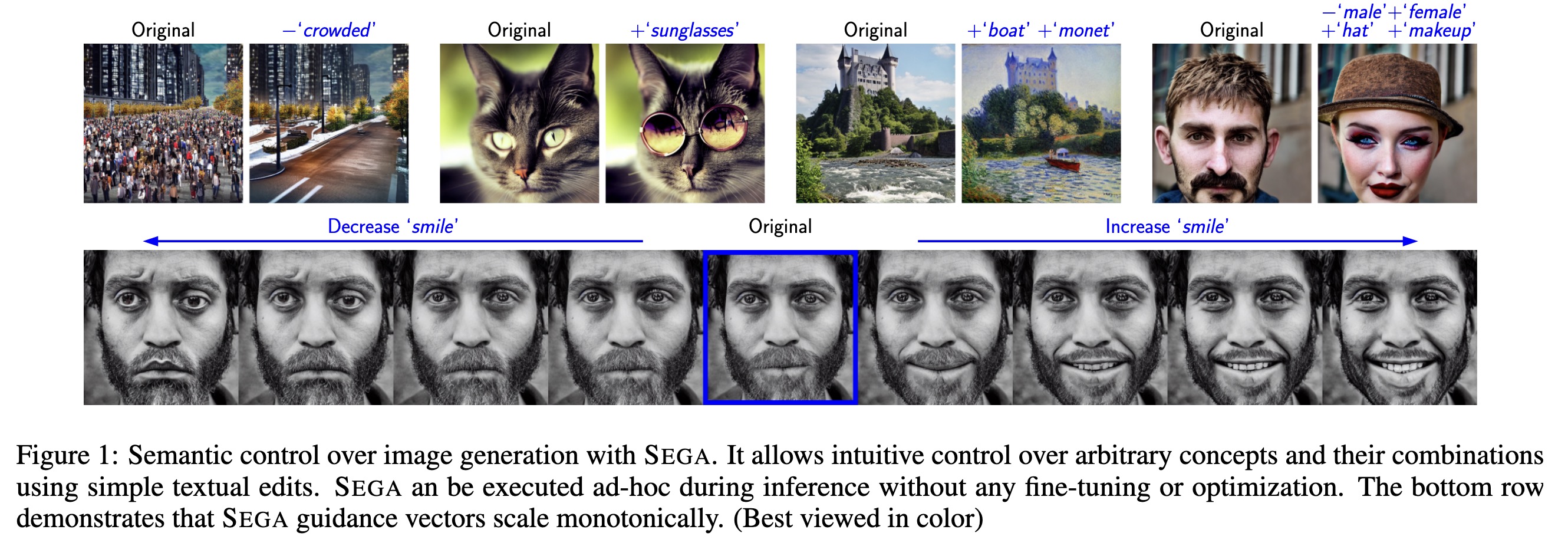

"SEGA: Instructing Diffusion using Semantic Dimensions. (arXiv:2301.12247v1 [cs.CV])" — A semantic guidance method for diffusion models to allow making subtle and extensive edits and changes in composition and style, as well as optimize the overall artistic conception.

Paper: http://arxiv.org/abs/2301.12247

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Semantic control over image gen…

Paper: http://arxiv.org/abs/2301.12247

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Semantic control over image gen…

Fahim Farook

f

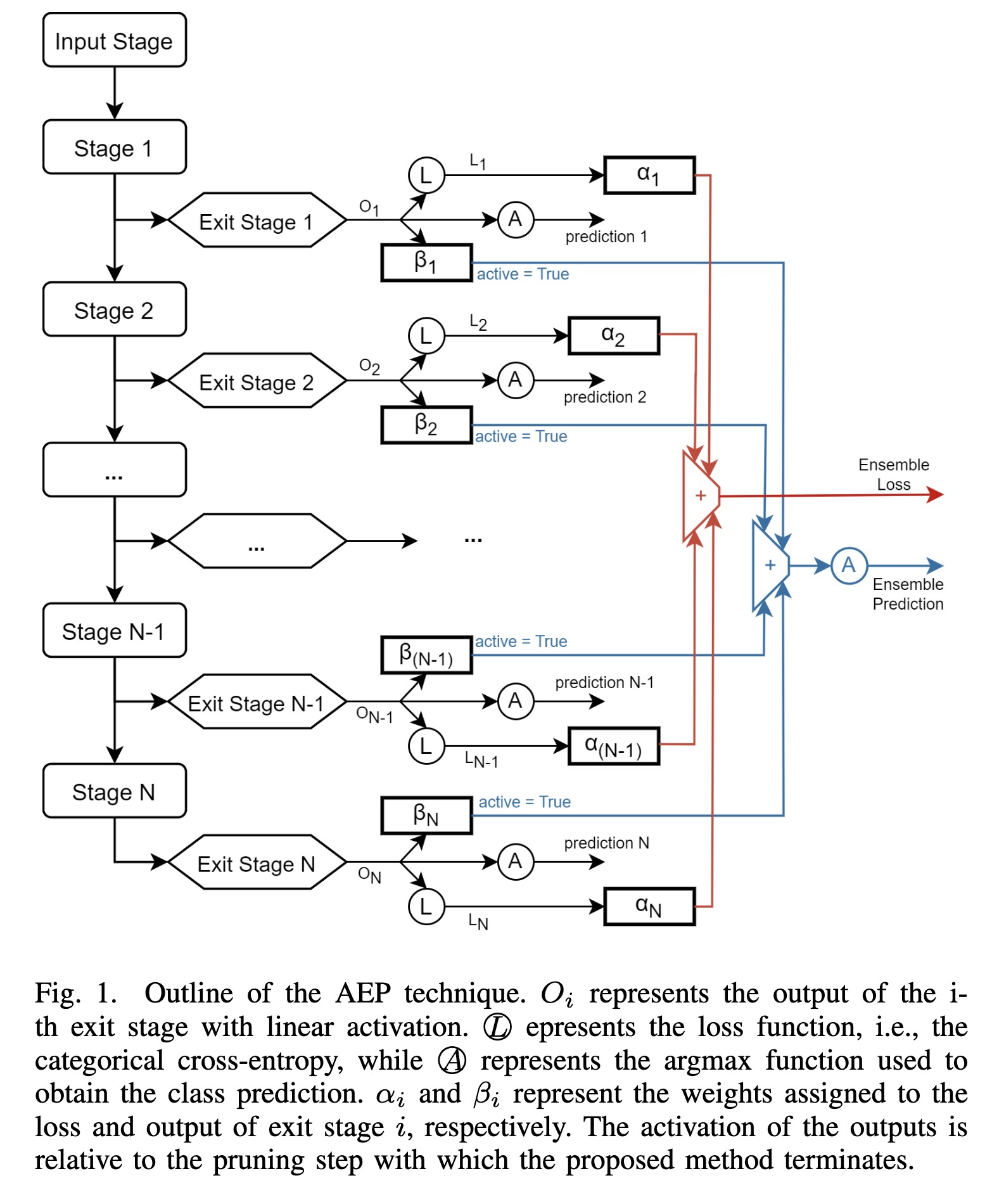

"Anticipate, Ensemble and Prune: Improving Convolutional Neural Networks via Aggregated Early Exits. (arXiv:2301.12168v1 [cs.LG])" — A new training technique based on weighted ensembles of early exits, which aims at exploiting the information in the structure of networks to maximise their performance.

Paper: http://arxiv.org/abs/2301.12168

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Outline of the AEP technique. O…

Paper: http://arxiv.org/abs/2301.12168

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Outline of the AEP technique. O…

Fahim Farook

f

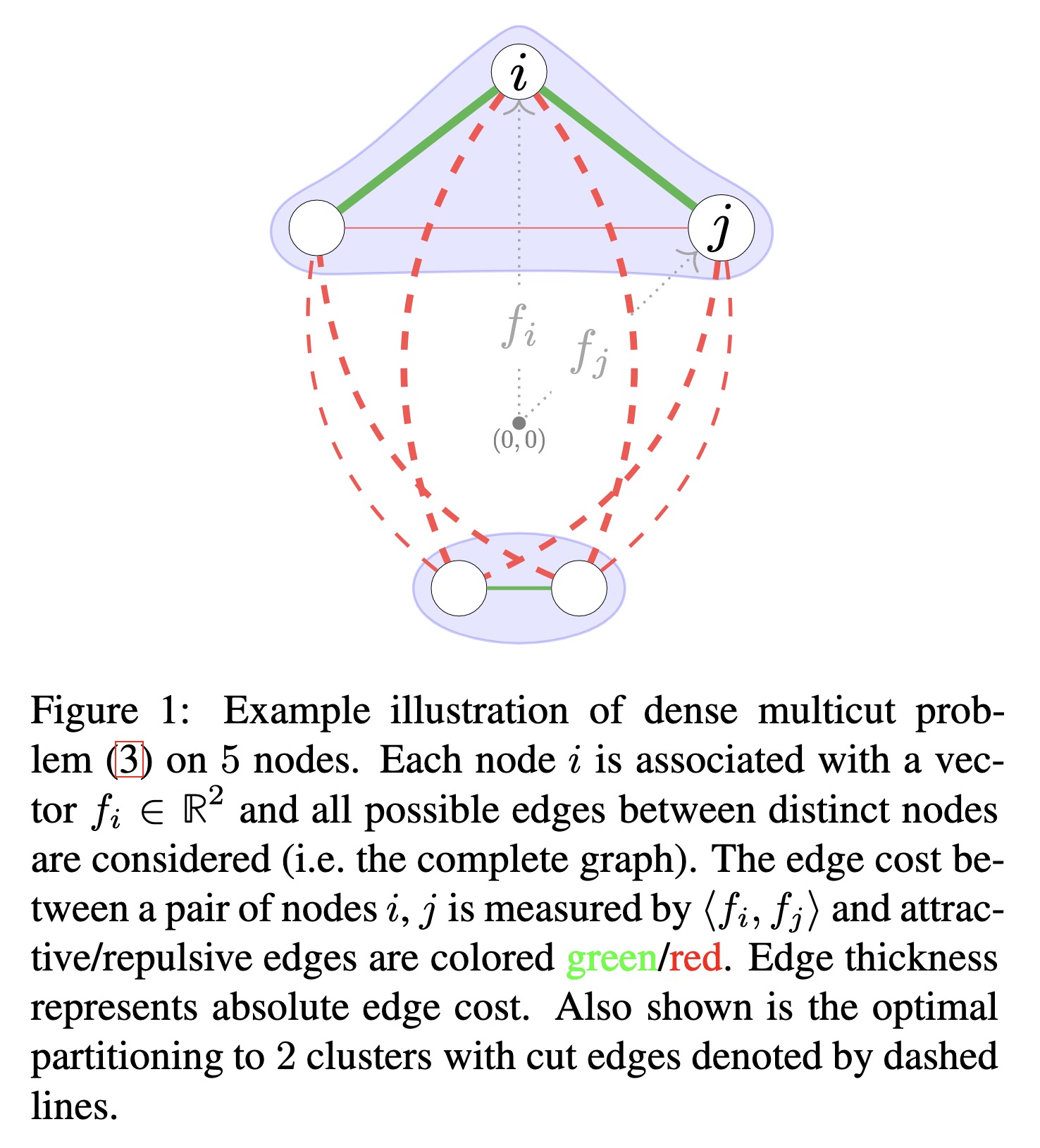

"ClusterFuG: Clustering Fully connected Graphs by Multicut. (arXiv:2301.12159v1 [cs.CV])" — A simpler and potentially better performing graph clustering formulation based on multicut (a.k.a. weighted correlation clustering) on the complete graph.

Paper: http://arxiv.org/abs/2301.12159

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Example illustration of dense m…

Paper: http://arxiv.org/abs/2301.12159

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Example illustration of dense m…

Fahim Farook

f

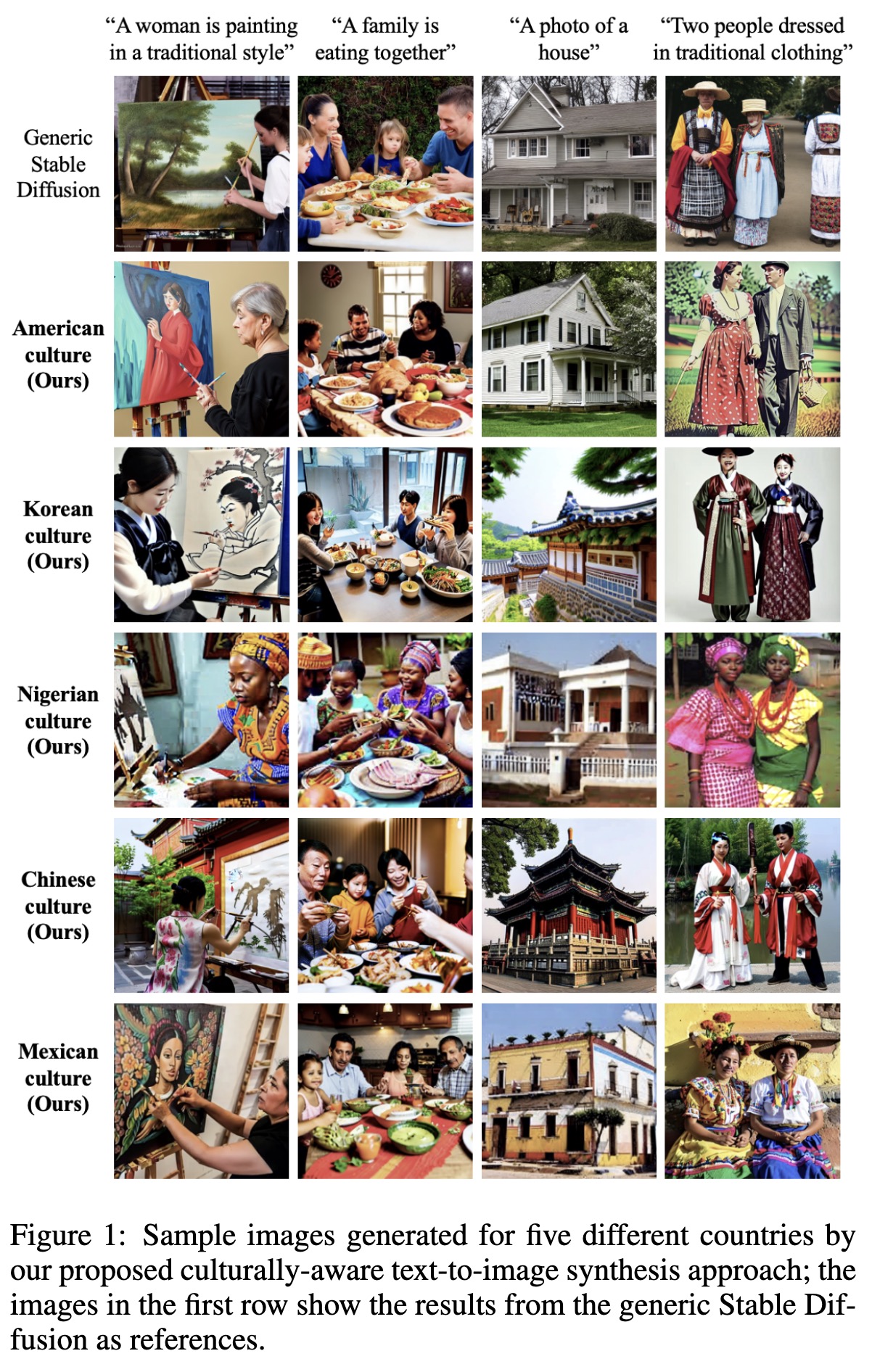

"Towards Equitable Representation in Text-to-Image Synthesis Models with the Cross-Cultural Understanding Benchmark (CCUB) Dataset. (arXiv:2301.12073v1 [cs.CV])" — A culturally-aware priming approach for text-to-image synthesis using a small but culturally curated dataset to fight the bias prevalent in giant datasets.

Paper: http://arxiv.org/abs/2301.12073

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Sample images generated for fiv…

Paper: http://arxiv.org/abs/2301.12073

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Sample images generated for fiv…

Fahim Farook

f

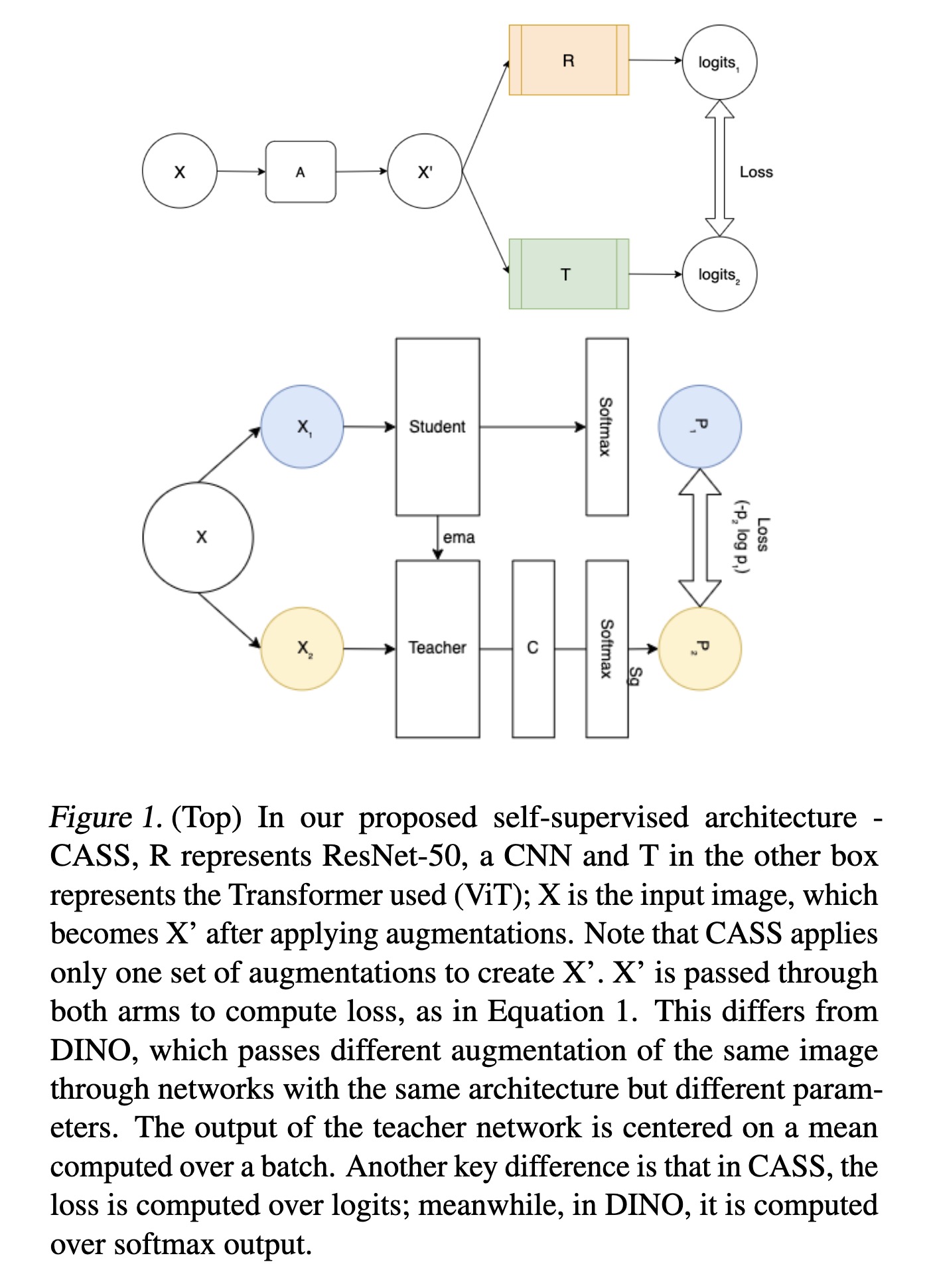

"Cross-Architectural Positive Pairs improve the effectiveness of Self-Supervised Learning. (arXiv:2301.12025v1 [cs.CV])" — A novel self-supervised learning approach that leverages Transformer and CNN simultaneously to overcome the issues with existing self-supervised techniques which have extreme computational requirements and suffer a substantial drop in performance with a reduction in batch size or pretraining epochs.

Paper: http://arxiv.org/abs/2301.12025

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

In our proposed self-supervised…

Paper: http://arxiv.org/abs/2301.12025

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

In our proposed self-supervised…

Fahim Farook

f

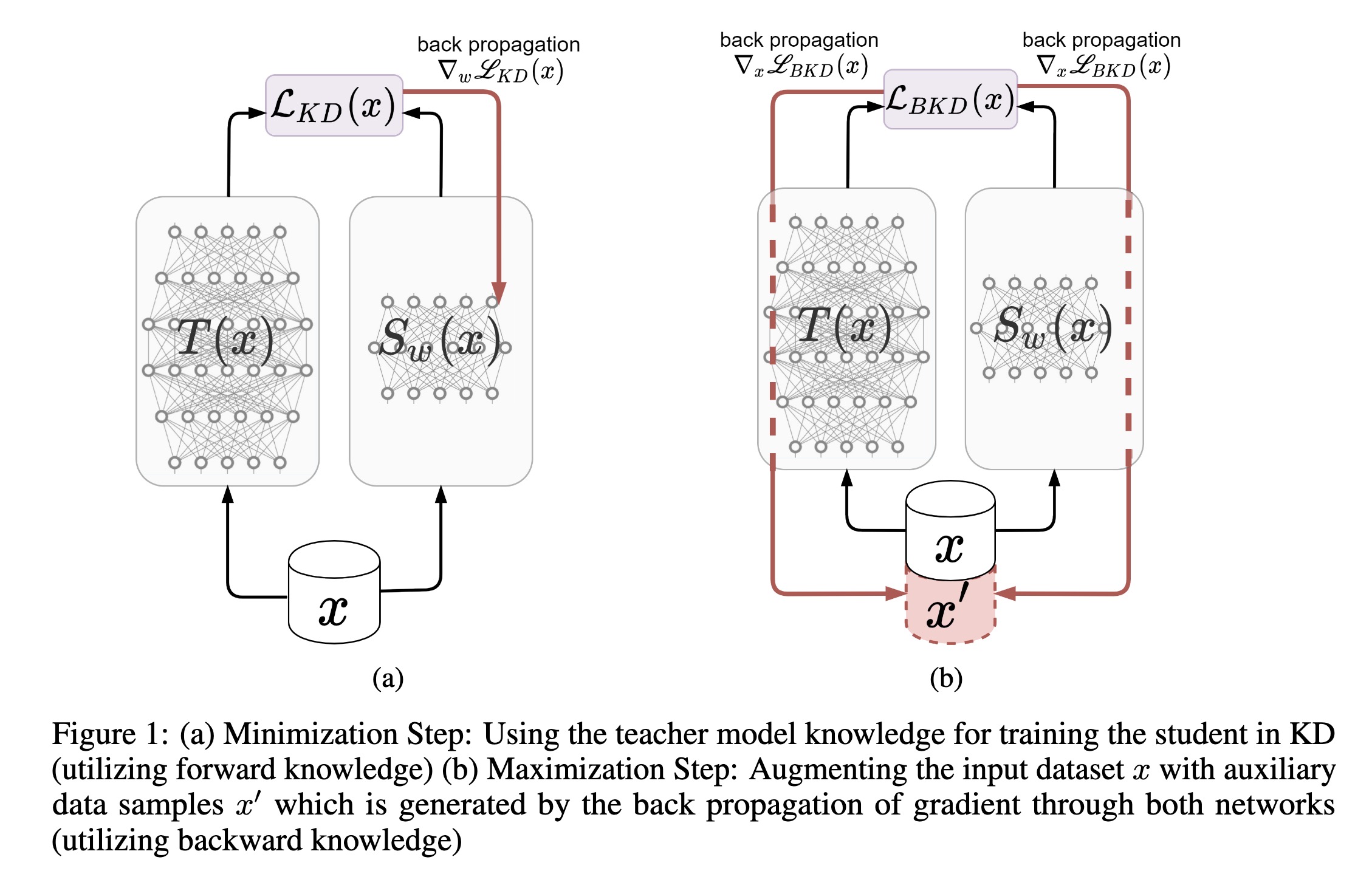

"Improved knowledge distillation by utilizing backward pass knowledge in neural networks. (arXiv:2301.12006v1 [cs.LG])" — Addressing the issue with Knowledge Distillation (KD) where there is no guarantee that the model would match in areas for which you do not have enough training samples, by generating new auxiliary training samples based on extracting knowledge from the backward pass of the teacher in the areas where the student diverges greatly from the teacher.

Paper: http://arxiv.org/abs/2301.12006

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

a) Minimization Step: Using the…

Paper: http://arxiv.org/abs/2301.12006

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

a) Minimization Step: Using the…