Conversation

Fahim Farook

f

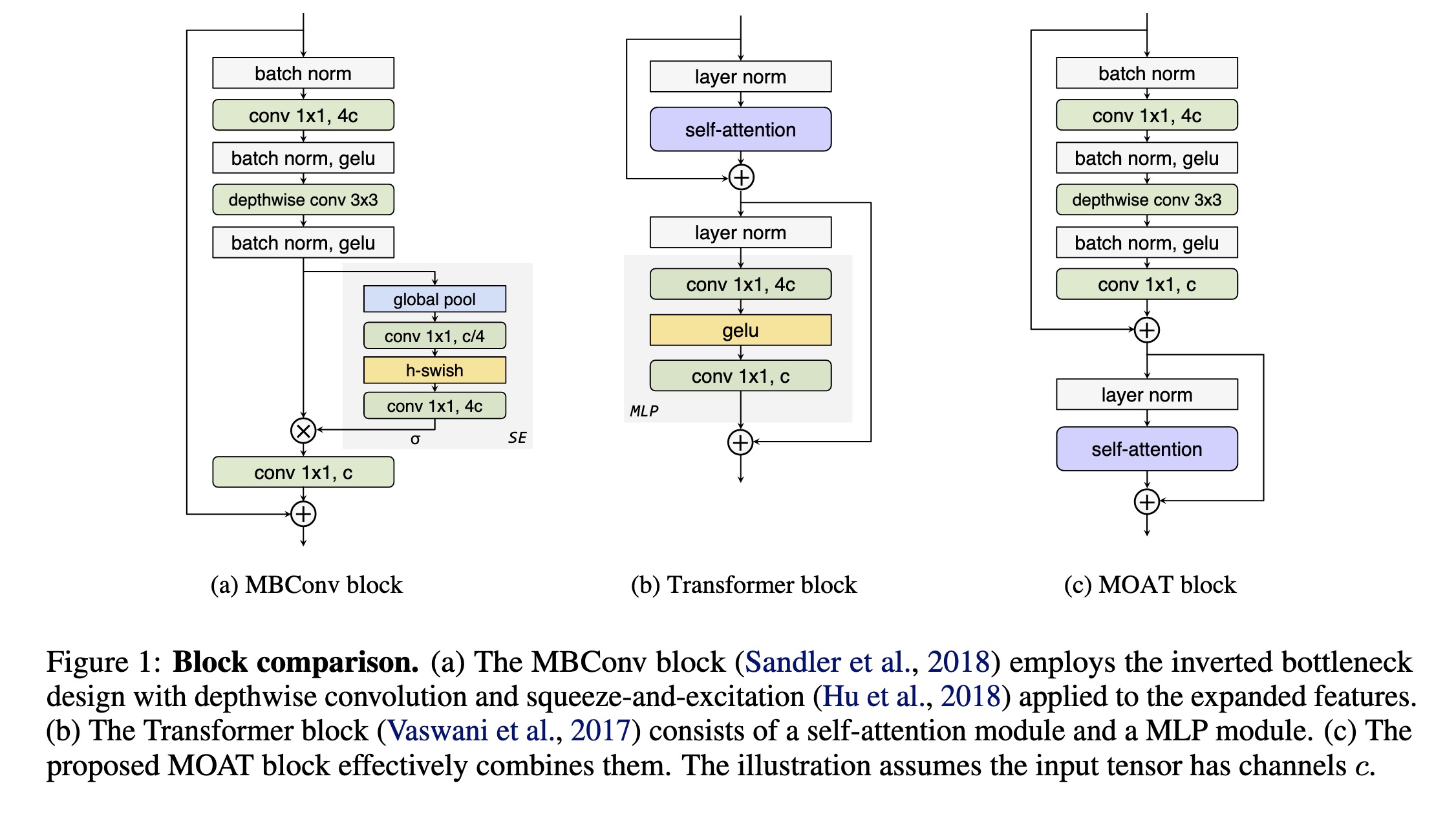

"MOAT: Alternating Mobile Convolution and Attention Brings Strong Vision Models. (arXiv:2210.01820v2 [cs.CV] UPDATED)" — A family of neural networks that build on top of mobile convolution (i.e., inverted residual blocks) and attention which not only enhances the network representation capacity, but also produces better downsampled features.

Paper: http://arxiv.org/abs/2210.01820

Code: https://github.com/google-research/deeplab2

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Block comparison. (a) The MBCon…

Paper: http://arxiv.org/abs/2210.01820

Code: https://github.com/google-research/deeplab2

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Block comparison. (a) The MBCon…