Conversation

Fahim Farook

f

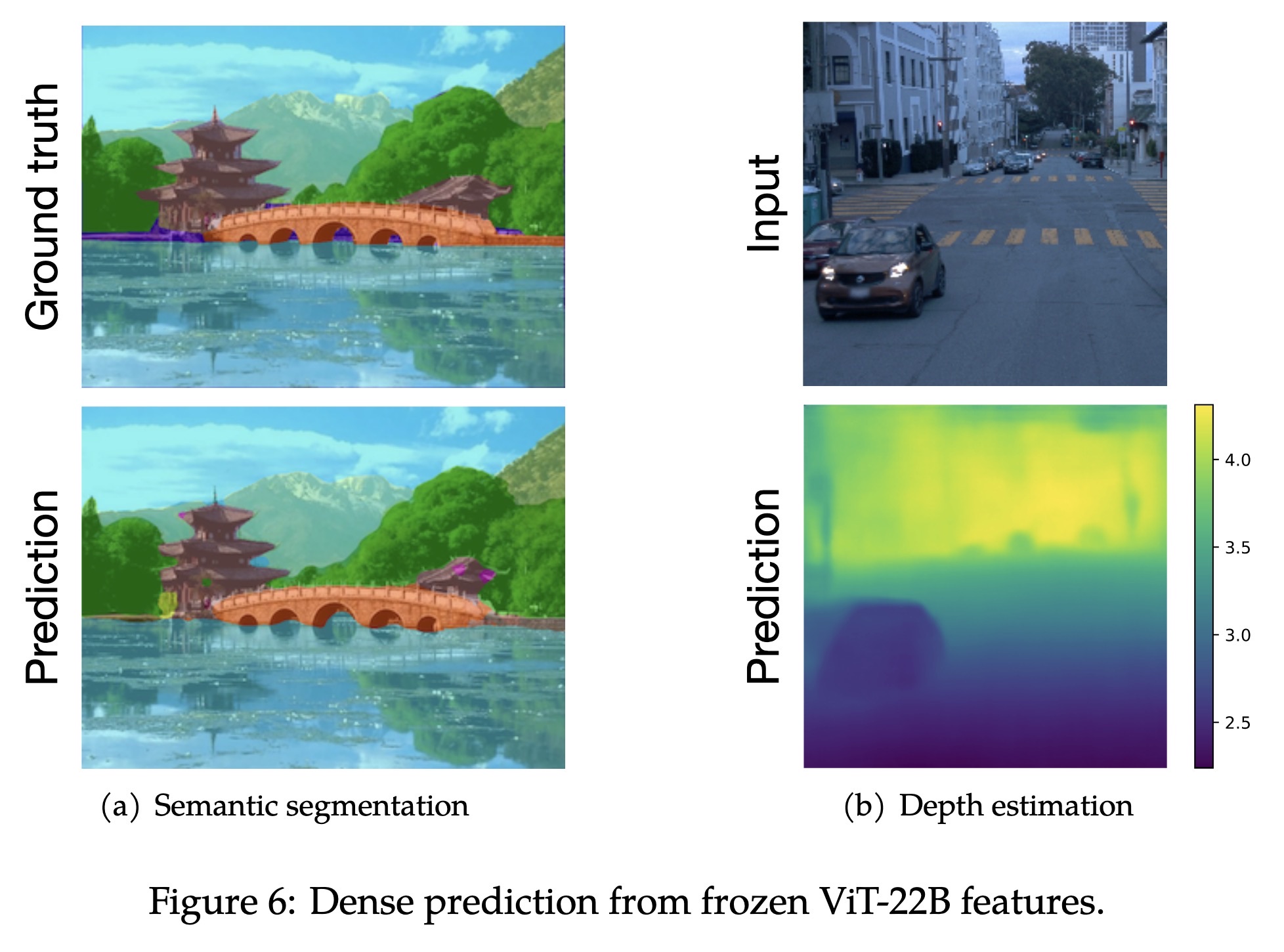

"Scaling Vision Transformers to 22 Billion Parameters. (arXiv:2302.05442v1 [cs.CV])" — A recipe for highly efficient and stable training of a 22B-parameter Vision Transformers (ViT) overtaking the previously known 4B parameter model.

Paper: http://arxiv.org/abs/2302.05442

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Dense prediction from frozen Vi…

Paper: http://arxiv.org/abs/2302.05442

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

Dense prediction from frozen Vi…