Conversation

Fahim Farook

f

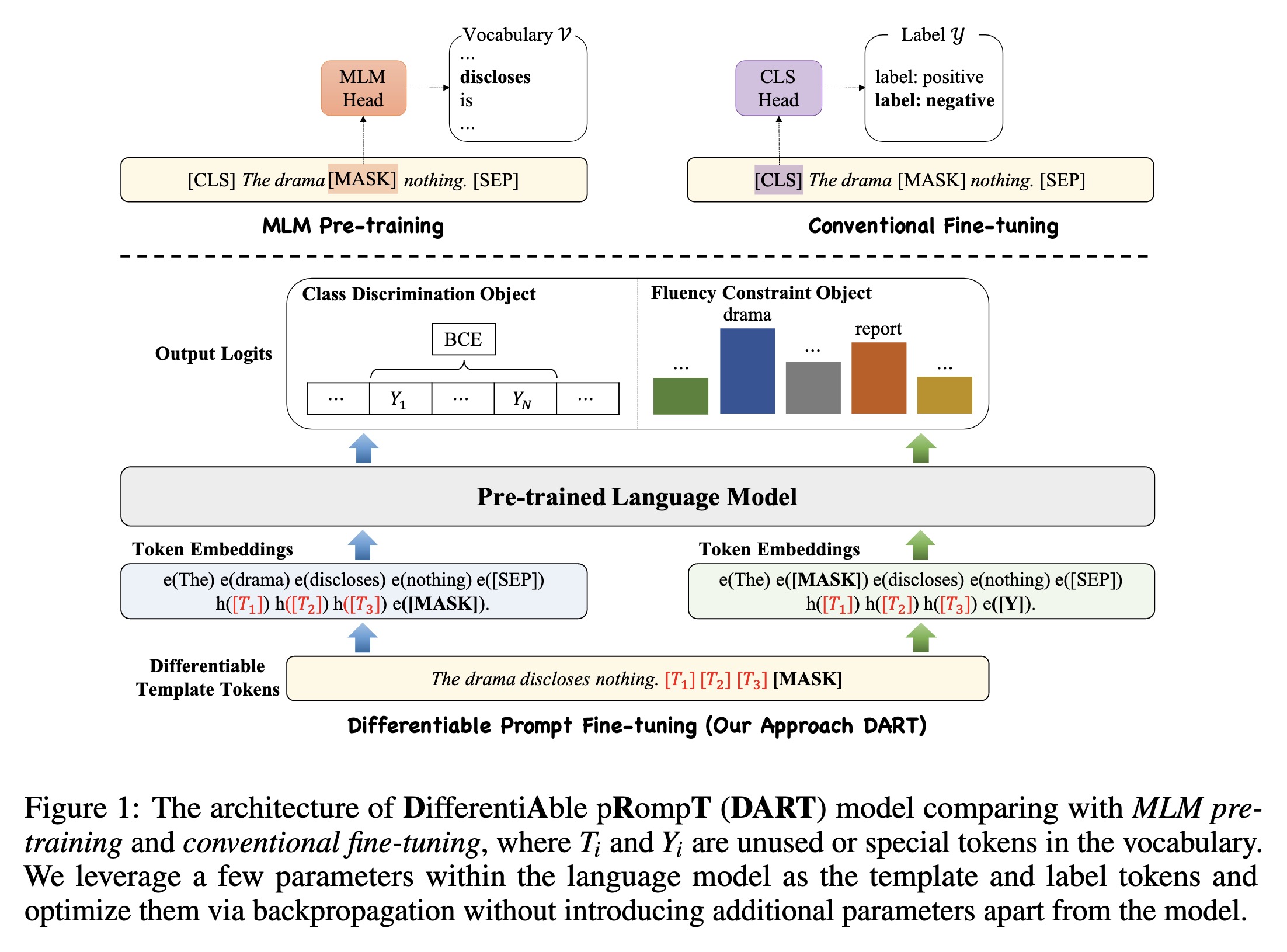

"Differentiable Prompt Makes Pre-trained Language Models Better Few-shot Learners. (arXiv:2108.13161v7 [cs.CL] CROSS LISTED)" — A novel pluggable, extensible, and efficient large-scale pre-trained language model approach which can convert small language models into better few-shot learners without any prompt engineering.

Paper: http://arxiv.org/abs/2108.13161

Code: https://github.com/zjunlp/DART

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

The architecture of DifferentiA…

Paper: http://arxiv.org/abs/2108.13161

Code: https://github.com/zjunlp/DART

#AI #CV #NewPaper #DeepLearning #MachineLearning

<<Find this useful? Please boost so that others can benefit too 🙂>>

The architecture of DifferentiA…